Head of the InClow research group: https://inclow-lm.github.io/

Program: www.rug.nl/masters/natu...

Applications (2026/2027) are open! Come and study with us (you will also learn why we have a 🐮 in our logo)

Program: www.rug.nl/masters/natu...

Applications (2026/2027) are open! Come and study with us (you will also learn why we have a 🐮 in our logo)

Paper: arxiv.org/abs/2503.03044

Slides/video/poster: underline.io/lecture/1315...

Paper: arxiv.org/abs/2503.03044

Slides/video/poster: underline.io/lecture/1315...

Come check our poster TODAY (Fri, Nov 7, 12:30 - 13:30) #EMNLP!

We’ve built a NN-agent framework to simulate how people choose the best word in a given communication context (i.e. pragmatic naming behavior).

With @yuqing0304.bsky.social, @ecesuurker.bsky.social, Tessa Verhoef, @gboleda.bsky.social

Come check our poster TODAY (Fri, Nov 7, 12:30 - 13:30) #EMNLP!

Come to our poster TODAY (Fr 7 Nov 10.30-12.00) #EMNLP to meet TurBLiMP, a new benchmark for Turkish, revealing how LLMs deal with free-order, morphologically rich languages

Pre-print: arxiv.org/abs/2506.13487

Fruit of an almost year-long project by amazing MS student @ezgibasar.bsky.social in collab w/ @frap98.bsky.social and @jumelet.bsky.social

Come to our poster TODAY (Fr 7 Nov 10.30-12.00) #EMNLP to meet TurBLiMP, a new benchmark for Turkish, revealing how LLMs deal with free-order, morphologically rich languages

Come check it out if your interested in multilingual linguistic evaluation of LLMs (there will be parse trees on the slides! There's still use for syntactic structure!)

arxiv.org/abs/2504.02768

Come check it out if your interested in multilingual linguistic evaluation of LLMs (there will be parse trees on the slides! There's still use for syntactic structure!)

arxiv.org/abs/2504.02768

Come to our poster TODAY (Fr 7 Nov, 10.30-12.00) #EMNLP!

I’m happy to share that the preprint of my first PhD project is now online!

🎊 Paper: arxiv.org/abs/2505.23689

Come to our poster TODAY (Fr 7 Nov, 10.30-12.00) #EMNLP!

We’ve built a NN-agent framework to simulate how people choose the best word in a given communication context (i.e. pragmatic naming behavior).

With @yuqing0304.bsky.social, @ecesuurker.bsky.social, Tessa Verhoef, @gboleda.bsky.social

We’ve built a NN-agent framework to simulate how people choose the best word in a given communication context (i.e. pragmatic naming behavior).

With @yuqing0304.bsky.social, @ecesuurker.bsky.social, Tessa Verhoef, @gboleda.bsky.social

Interested in Interpretable, Cognitively inspired, Low-resource LMs? Don't miss our posters & talks #EMNLP2025!

Interested in Interpretable, Cognitively inspired, Low-resource LMs? Don't miss our posters & talks #EMNLP2025!

Large reasoning models (LRMs) are strong in English — but how well do they reason in your language?

Our latest work uncovers their limitation and a clear trade-off:

Controlling Thinking Trace Language Comes at the Cost of Accuracy

📄Link: arxiv.org/abs/2505.22888

Large reasoning models (LRMs) are strong in English — but how well do they reason in your language?

Our latest work uncovers their limitation and a clear trade-off:

Controlling Thinking Trace Language Comes at the Cost of Accuracy

📄Link: arxiv.org/abs/2505.22888

Here’s the proof! 𝐁𝐚𝐛𝐲𝐁𝐚𝐛𝐞𝐥𝐋𝐌 is the first Multilingual Benchmark of Developmentally Plausible Training Data available for 45 languages to the NLP community 🎉

arxiv.org/abs/2510.10159

Here’s the proof! 𝐁𝐚𝐛𝐲𝐁𝐚𝐛𝐞𝐥𝐋𝐌 is the first Multilingual Benchmark of Developmentally Plausible Training Data available for 45 languages to the NLP community 🎉

arxiv.org/abs/2510.10159

MME focuses on resources, metrics & methodologies for evaluating multilingual systems! multilingual-multicultural-evaluation.github.io

📅 Workshop Mar 24–29, 2026

🗓️ Submit by Dec 19, 2025

MME focuses on resources, metrics & methodologies for evaluating multilingual systems! multilingual-multicultural-evaluation.github.io

📅 Workshop Mar 24–29, 2026

🗓️ Submit by Dec 19, 2025

Can't wait to discuss our work at #EMNLP2025 in Suzhou this November!

We (@arianna-bis.bsky.social, Raquel Fernández and I) answered this question in our new paper: "Reading Between the Prompts: How Stereotypes Shape LLM's Implicit Personalization".

🧵

arxiv.org/abs/2505.16467

Can't wait to discuss our work at #EMNLP2025 in Suzhou this November!

Pre-print: arxiv.org/abs/2506.13487

Fruit of an almost year-long project by amazing MS student @ezgibasar.bsky.social in collab w/ @frap98.bsky.social and @jumelet.bsky.social

Pre-print: arxiv.org/abs/2506.13487

Fruit of an almost year-long project by amazing MS student @ezgibasar.bsky.social in collab w/ @frap98.bsky.social and @jumelet.bsky.social

New paper w/ @gsarti.com

@zouharvi.bsky.social @malvinanissim.bsky.social

We compare uncertainty- and interp-based WQE metrics across 12 directions, with some surprising findings!

🧵 1/

New paper w/ @gsarti.com

@zouharvi.bsky.social @malvinanissim.bsky.social

New preprint led by @jiruiqi.bsky.social and @shan23chen.bsky.social!

Large reasoning models (LRMs) are strong in English — but how well do they reason in your language?

Our latest work uncovers their limitation and a clear trade-off:

Controlling Thinking Trace Language Comes at the Cost of Accuracy

📄Link: arxiv.org/abs/2505.22888

New preprint led by @jiruiqi.bsky.social and @shan23chen.bsky.social!

I’m happy to share that the preprint of my first PhD project is now online!

🎊 Paper: arxiv.org/abs/2505.23689

@veraneplenbroek.bsky.social‘s analysis shows LLMs behave differently according to your gender, race & more. Implicit personalization is always at work & is strongly based on your conversation topics.

Great collab w/ Raquel Fernández ⤵️

We (@arianna-bis.bsky.social, Raquel Fernández and I) answered this question in our new paper: "Reading Between the Prompts: How Stereotypes Shape LLM's Implicit Personalization".

🧵

arxiv.org/abs/2505.16467

@veraneplenbroek.bsky.social‘s analysis shows LLMs behave differently according to your gender, race & more. Implicit personalization is always at work & is strongly based on your conversation topics.

Great collab w/ Raquel Fernández ⤵️

W/ @danielsc4.it @gsarti.com ElisabettaFersini, @malvinanissim.bsky.social

W/ @danielsc4.it @gsarti.com ElisabettaFersini, @malvinanissim.bsky.social

Joint work w/ @jiruiqi.bsky.social & Raquel_Fernández

See thread! ⤵️

[1/] Retrieving passages from many languages can boost retrieval augmented generation (RAG) performance, but how good are LLMs at dealing with multilingual contexts in the prompt?

📄 Check it out: arxiv.org/abs/2504.00597

(w/ @arianna-bis.bsky.social @Raquel_Fernández)

#NLProc

Joint work w/ @jiruiqi.bsky.social & Raquel_Fernández

See thread! ⤵️

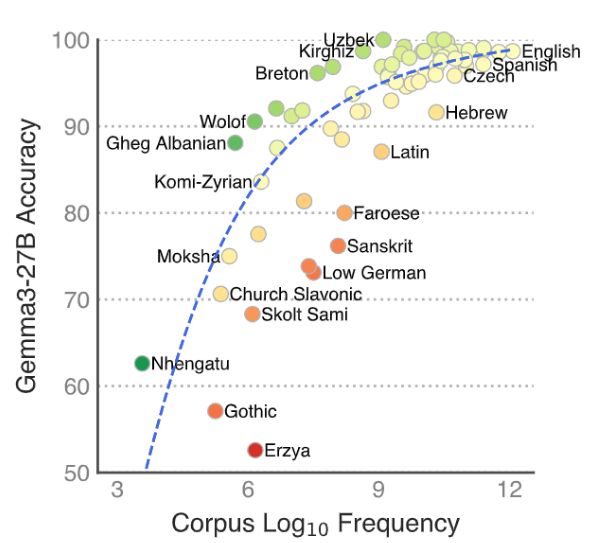

Multilinguality claims are often based on downstream tasks like QA & MT, while *formal* linguistic competence remains hard to gauge in lots of languages

Meet MultiBLiMP!

(joint work w/ @jumelet.bsky.social & @weissweiler.bsky.social)

Introducing 🌍MultiBLiMP 1.0: A Massively Multilingual Benchmark of Minimal Pairs for Subject-Verb Agreement, covering 101 languages!

We present over 125,000 minimal pairs and evaluate 17 LLMs, finding that support is still lacking for many languages.

🧵⬇️

Multilinguality claims are often based on downstream tasks like QA & MT, while *formal* linguistic competence remains hard to gauge in lots of languages

Meet MultiBLiMP!

(joint work w/ @jumelet.bsky.social & @weissweiler.bsky.social)

correlation-machine.com/CHIELD/varia...

correlation-machine.com/CHIELD/varia...

Submit your work by March 14th, 11:59 PM (AoE, UTC-12)

Don't miss out! ⏳

🔗 Submission links: conll.org

#CoNLL2025 #NLP #CoNLL

Submit your work by March 14th, 11:59 PM (AoE, UTC-12)

Don't miss out! ⏳

🔗 Submission links: conll.org

#CoNLL2025 #NLP #CoNLL

Come work with Annemarie van Dooren, @yevgenm.bsky.social and myself on a new project bridging Computational Linguistics methods and Language Acquisition questions, with a focus on the learning of modal verbs.

Come work with Annemarie van Dooren, @yevgenm.bsky.social and myself on a new project bridging Computational Linguistics methods and Language Acquisition questions, with a focus on the learning of modal verbs.