antonleicht.me

• More separation between eval technical work and policy advocacy

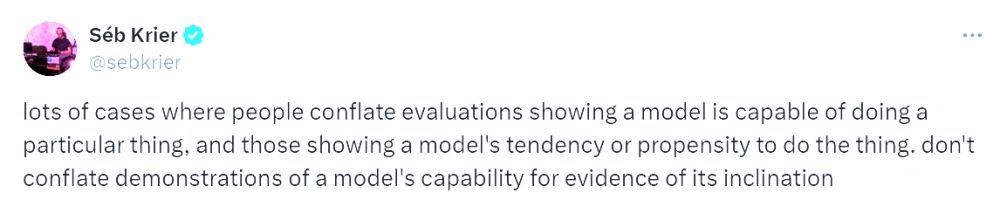

• Focus on propensity over capability

• Clearer articulation of implications for deployment

• Greater focus on frameworks, fewer evals for evals' sake

(11/N)

• More separation between eval technical work and policy advocacy

• Focus on propensity over capability

• Clearer articulation of implications for deployment

• Greater focus on frameworks, fewer evals for evals' sake

(11/N)

As long as concerning evals lead to getting used to inaction, more evals are not as politically helpful. (10/N)

As long as concerning evals lead to getting used to inaction, more evals are not as politically helpful. (10/N)

If current evals don't warrant deployment delays, then it should be made clear that only the trajectory is concerning. (9/N)

If current evals don't warrant deployment delays, then it should be made clear that only the trajectory is concerning. (9/N)

Is this evidence for impending doom, or evidence that someone asked a robot to pretend to be evil? Turns out, both. (7/N)

Is this evidence for impending doom, or evidence that someone asked a robot to pretend to be evil? Turns out, both. (7/N)

This already started to show in the SB-1047 discussion: (4/N)

This already started to show in the SB-1047 discussion: (4/N)

antonleicht.me/writing/evals

antonleicht.me/writing/evals

It's a little disheartening to see usually cooler heads and elsewhere jump on the bait. (10/N)

It's a little disheartening to see usually cooler heads and elsewhere jump on the bait. (10/N)

Lots of jurisdictions are considering AI reg. at the moment. Delays foster the impression that regulating AI leads to frustration and constraints on business.

That's fair - but it means OpenAI likely isn't only focused on making Sora available in Europe ASAP. (9/N)

Lots of jurisdictions are considering AI reg. at the moment. Delays foster the impression that regulating AI leads to frustration and constraints on business.

That's fair - but it means OpenAI likely isn't only focused on making Sora available in Europe ASAP. (9/N)

First, it focuses latent frustration on uncomfortable policy, e.g. on AIA implementation through the Code of Practice and nat. laws.

Through delays, OpenAI is leveraging public & business pressure to weaken this implementation. (8/N)

First, it focuses latent frustration on uncomfortable policy, e.g. on AIA implementation through the Code of Practice and nat. laws.

Through delays, OpenAI is leveraging public & business pressure to weaken this implementation. (8/N)

I just don't think there is good evidence for that takeaway. If major models actually skip Europe, I'll be very concerned.

But currently, statements like

the below have better alternative explanations. (7/N)

I just don't think there is good evidence for that takeaway. If major models actually skip Europe, I'll be very concerned.

But currently, statements like

the below have better alternative explanations. (7/N)

As competition around new model classes heats up, that effect will carry over - and delays will become rarer. (6/N)

As competition around new model classes heats up, that effect will carry over - and delays will become rarer. (6/N)

Compounding costs from further delays are very concerning. I think they're a little less likely than reported: OpenAI has delayed Sora, but not o1, a similarly regulation-relevant model (s. the scorecard). (5.5/N)

Compounding costs from further delays are very concerning. I think they're a little less likely than reported: OpenAI has delayed Sora, but not o1, a similarly regulation-relevant model (s. the scorecard). (5.5/N)

This seems unlikely to matter at the scale that the doom-saying implies. The first couple months of OpenAI releases often see growing pains anyways, and economic integration of video generation will take some time. (4.5/N)

This seems unlikely to matter at the scale that the doom-saying implies. The first couple months of OpenAI releases often see growing pains anyways, and economic integration of video generation will take some time. (4.5/N)

Three options: Sora delay is costly, future delays will be costly, future skipped releases will be costly. (4/N)

Three options: Sora delay is costly, future delays will be costly, future skipped releases will be costly. (4/N)

But that could change: Many other markets (e.g. US states - map below from June 2024) might also regulate soon. Once they reach critical mass, local delays wouldn't be realistic anymore. (3/N)

But that could change: Many other markets (e.g. US states - map below from June 2024) might also regulate soon. Once they reach critical mass, local delays wouldn't be realistic anymore. (3/N)

But Europe falling on its sword to make US AI safer wouldn't be sound political strategy. I instead focus on the economic side: (2/N)

But Europe falling on its sword to make US AI safer wouldn't be sound political strategy. I instead focus on the economic side: (2/N)

No official info, but it's unlikely that this is (only) about the EU AI Act: It's also delayed in the UK, which has no AIA. More likely to be about the ~similar EU Digital Markets Act and UK Online Safety Act.

The Guardian has a similar interpretation: (1/N)

No official info, but it's unlikely that this is (only) about the EU AI Act: It's also delayed in the UK, which has no AIA. More likely to be about the ~similar EU Digital Markets Act and UK Online Safety Act.

The Guardian has a similar interpretation: (1/N)

But as they get associated with unpopular policy, they’re at risk, too - like with Sen Cruz' attacks on NIST. (5/N)

But as they get associated with unpopular policy, they’re at risk, too - like with Sen Cruz' attacks on NIST. (5/N)