presenting at the morning poster session on thursday

excited to catch up with friends and collaborators, old and new

let's chat

presenting at the morning poster session on thursday

excited to catch up with friends and collaborators, old and new

let's chat

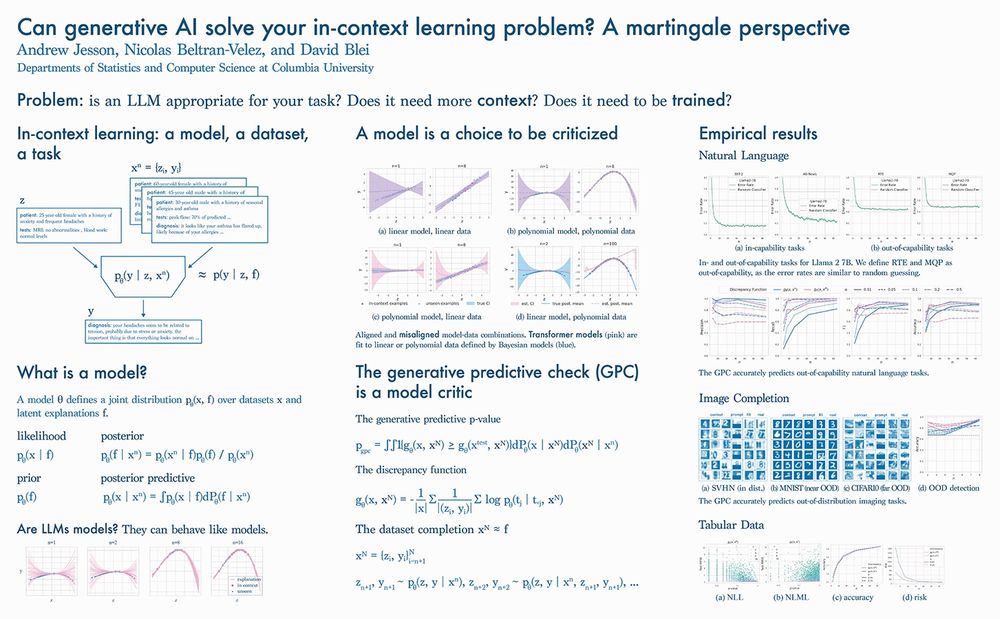

the NLL gives p-values that are informative of whether there are enough in-context examples

this can reduce risk in safety critical settings

the NLL gives p-values that are informative of whether there are enough in-context examples

this can reduce risk in safety critical settings

pre-selecting a significance level α to threshold the p-value gives us a predictor of model capacity: the generative predictive check (GPC)

pre-selecting a significance level α to threshold the p-value gives us a predictor of model capacity: the generative predictive check (GPC)

posterior predictive checks form a family of model criticism techniques

but for discrepancy functions like the negative log likelihood, PPCs require the likelihood and posterior

posterior predictive checks form a family of model criticism techniques

but for discrepancy functions like the negative log likelihood, PPCs require the likelihood and posterior

but such inferences are made by any model, even misaligned ones

if a model is too flexible, more examples may be needed to specify the task

if it is too specialized, the inferences may be unreliable

but such inferences are made by any model, even misaligned ones

if a model is too flexible, more examples may be needed to specify the task

if it is too specialized, the inferences may be unreliable

the joint comprises the likelihood over datasets and the prior over explanations

the posterior is a distribution over explanations given a dataset

the posterior predictive gives the model a voice

the joint comprises the likelihood over datasets and the prior over explanations

the posterior is a distribution over explanations given a dataset

the posterior predictive gives the model a voice

knowing when an LLM provides reliable responses is challenging in this setting

there may not be enough in-context examples to specify the task

or the model may just not have the capability to it

knowing when an LLM provides reliable responses is challenging in this setting

there may not be enough in-context examples to specify the task

or the model may just not have the capability to it

we develop a predictor that only requires sampling and log probs

we show it works for tabular, natural language, and imaging problems

come chat at the safe generative ai workshop at NeurIPS

📄 arxiv.org/abs/2412.06033

we develop a predictor that only requires sampling and log probs

we show it works for tabular, natural language, and imaging problems

come chat at the safe generative ai workshop at NeurIPS

📄 arxiv.org/abs/2412.06033