🚀 New paper out: ManagerBench: Evaluating the Safety-Pragmatism Trade-off in Autonomous LLMs🚀🧵

🚀 New paper out: ManagerBench: Evaluating the Safety-Pragmatism Trade-off in Autonomous LLMs🚀🧵

We're planning to keep this a living benchmark; come by and share your ideas/hot takes!

We're planning to keep this a living benchmark; come by and share your ideas/hot takes!

Consider submitting your work to the MIB Shared Task, part of #BlackboxNLP at @emnlpmeeting.bsky.social 2025!

The goal: benchmark existing MI methods and identify promising directions to precisely and concisely recover causal pathways in LMs >>

Consider submitting your work to the MIB Shared Task, part of #BlackboxNLP at @emnlpmeeting.bsky.social 2025!

The goal: benchmark existing MI methods and identify promising directions to precisely and concisely recover causal pathways in LMs >>

📆 Review period: May 24-June 7

If you're passionate about making interpretability useful and want to help shape the conversation, we'd love your input.

💡🔍 Self-nominate here:

docs.google.com/forms/d/e/1F...

📆 Review period: May 24-June 7

If you're passionate about making interpretability useful and want to help shape the conversation, we'd love your input.

💡🔍 Self-nominate here:

docs.google.com/forms/d/e/1F...

Only 5 days left ⏰!

Got a paper accepted to ICML that fits our theme?

Submit it to our conference track!

👉 @actinterp.bsky.social

Only 5 days left ⏰!

Got a paper accepted to ICML that fits our theme?

Submit it to our conference track!

👉 @actinterp.bsky.social

The Actionable Interpretability Workshop at #ICML2025 has moved its submission deadline to May 19th. More time to submit your work 🔍🧠✨ Don’t miss out!

The Actionable Interpretability Workshop at #ICML2025 has moved its submission deadline to May 19th. More time to submit your work 🔍🧠✨ Don’t miss out!

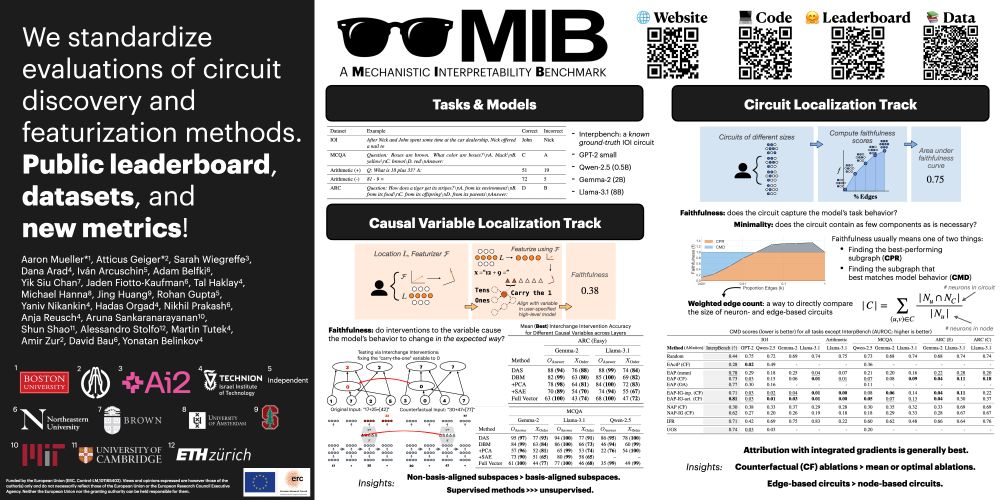

We propose 😎 𝗠𝗜𝗕: a 𝗠echanistic 𝗜nterpretability 𝗕enchmark!

We propose 😎 𝗠𝗜𝗕: a 𝗠echanistic 𝗜nterpretability 𝗕enchmark!

The First Workshop on 𝐀𝐜𝐭𝐢𝐨𝐧𝐚𝐛𝐥𝐞 𝐈𝐧𝐭𝐞𝐫𝐩𝐫𝐞𝐭𝐚𝐛𝐢𝐥𝐢𝐭𝐲 will be held at ICML 2025 in Vancouver!

📅 Submission Deadline: May 9

Follow us >> @ActInterp

🧠Topics of interest include: 👇

The First Workshop on 𝐀𝐜𝐭𝐢𝐨𝐧𝐚𝐛𝐥𝐞 𝐈𝐧𝐭𝐞𝐫𝐩𝐫𝐞𝐭𝐚𝐛𝐢𝐥𝐢𝐭𝐲 will be held at ICML 2025 in Vancouver!

📅 Submission Deadline: May 9

Follow us >> @ActInterp

🧠Topics of interest include: 👇

Website: actionable-interpretability.github.io

Deadline: May 9

> Follow @actinterp.bsky.social

> Website actionable-interpretability.github.io

@talhaklay.bsky.social @anja.re @mariusmosbach.bsky.social @sarah-nlp.bsky.social @iftenney.bsky.social

Paper submission deadline: May 9th!

Website: actionable-interpretability.github.io

Deadline: May 9

> Follow @actinterp.bsky.social

> Website actionable-interpretability.github.io

@talhaklay.bsky.social @anja.re @mariusmosbach.bsky.social @sarah-nlp.bsky.social @iftenney.bsky.social

Paper submission deadline: May 9th!

> Follow @actinterp.bsky.social

> Website actionable-interpretability.github.io

@talhaklay.bsky.social @anja.re @mariusmosbach.bsky.social @sarah-nlp.bsky.social @iftenney.bsky.social

Paper submission deadline: May 9th!

Led by Yaniv Nikankin: arxiv.org/abs/2410.21272

Led by Yaniv Nikankin: arxiv.org/abs/2410.21272

We propose a method to automatically find position-aware circuits, improving faithfulness while keeping circuits compact. 🧵👇

We propose a method to automatically find position-aware circuits, improving faithfulness while keeping circuits compact. 🧵👇

LLMs can hallucinate - but did you know they can do so with high certainty even when they know the correct answer? 🤯

We find those hallucinations in our latest work with @itay-itzhak.bsky.social, @fbarez.bsky.social, @gabistanovsky.bsky.social and Yonatan Belinkov

LLMs can hallucinate - but did you know they can do so with high certainty even when they know the correct answer? 🤯

We find those hallucinations in our latest work with @itay-itzhak.bsky.social, @fbarez.bsky.social, @gabistanovsky.bsky.social and Yonatan Belinkov

Ever wonder whether verbalized CoTs correspond to the internal reasoning process of the model?

We propose a novel parametric faithfulness approach, which erases information contained in CoT steps from the model parameters to assess CoT faithfulness.

arxiv.org/abs/2502.14829

Ever wonder whether verbalized CoTs correspond to the internal reasoning process of the model?

We propose a novel parametric faithfulness approach, which erases information contained in CoT steps from the model parameters to assess CoT faithfulness.

arxiv.org/abs/2502.14829

We trained 2 new models. Like BERT, but modern. ModernBERT.

Not some hypey GenAI thing, but a proper workhorse model, for retrieval, classification, etc. Real practical stuff.

It's much faster, more accurate, longer context, and more useful. 🧵

We trained 2 new models. Like BERT, but modern. ModernBERT.

Not some hypey GenAI thing, but a proper workhorse model, for retrieval, classification, etc. Real practical stuff.

It's much faster, more accurate, longer context, and more useful. 🧵