Anirbit

@anirbit.bsky.social

Assistant Professor/Lecturer in ML @ The University of Manchester | https://anirbit-ai.github.io/ | working on the theory of neural nets and how they solve differential equations. #AI4SCIENCE

Some luck to be hosted by a Godel Prize winner, Prof. Sebastien Pokutta, and to present our work in their group 💥 Sebastien heads this "Zuse Institute Berlin (#ZIB) " which is an amazing oasis of applied mathematics bringing together experts from different institutes in Berlin.

August 7, 2025 at 4:34 PM

Some luck to be hosted by a Godel Prize winner, Prof. Sebastien Pokutta, and to present our work in their group 💥 Sebastien heads this "Zuse Institute Berlin (#ZIB) " which is an amazing oasis of applied mathematics bringing together experts from different institutes in Berlin.

Hello #FAU. Thanks for the quick plan to host me and letting me present our exciting mathematics of ML in infinite-dimensions, #operatorlearning. #sciML Their "Pattern Recognition Laboratory" is completing 50 years! @andreasmaier.bsky.social 💥

August 2, 2025 at 6:31 PM

Hello #FAU. Thanks for the quick plan to host me and letting me present our exciting mathematics of ML in infinite-dimensions, #operatorlearning. #sciML Their "Pattern Recognition Laboratory" is completing 50 years! @andreasmaier.bsky.social 💥

Our insight is to introduce an intermediate form of gradient clipping that can leverage the PL* inequality of wide nets - something not known for standard clipping. Given our algorithm works for transformers maybe that points to some yet unkown algebraic property of them. #TMLR

June 29, 2025 at 10:38 PM

Our insight is to introduce an intermediate form of gradient clipping that can leverage the PL* inequality of wide nets - something not known for standard clipping. Given our algorithm works for transformers maybe that points to some yet unkown algebraic property of them. #TMLR

Now our research group has a logo to succinctly convey what we do - prove theorems about using ML to solve PDEs, leaning towards operator learning. Thanks to #ChatGPT4o for converting my sketches into a digital image 🔥 #AI4Science #SciML

June 7, 2025 at 7:03 PM

Now our research group has a logo to succinctly convey what we do - prove theorems about using ML to solve PDEs, leaning towards operator learning. Thanks to #ChatGPT4o for converting my sketches into a digital image 🔥 #AI4Science #SciML

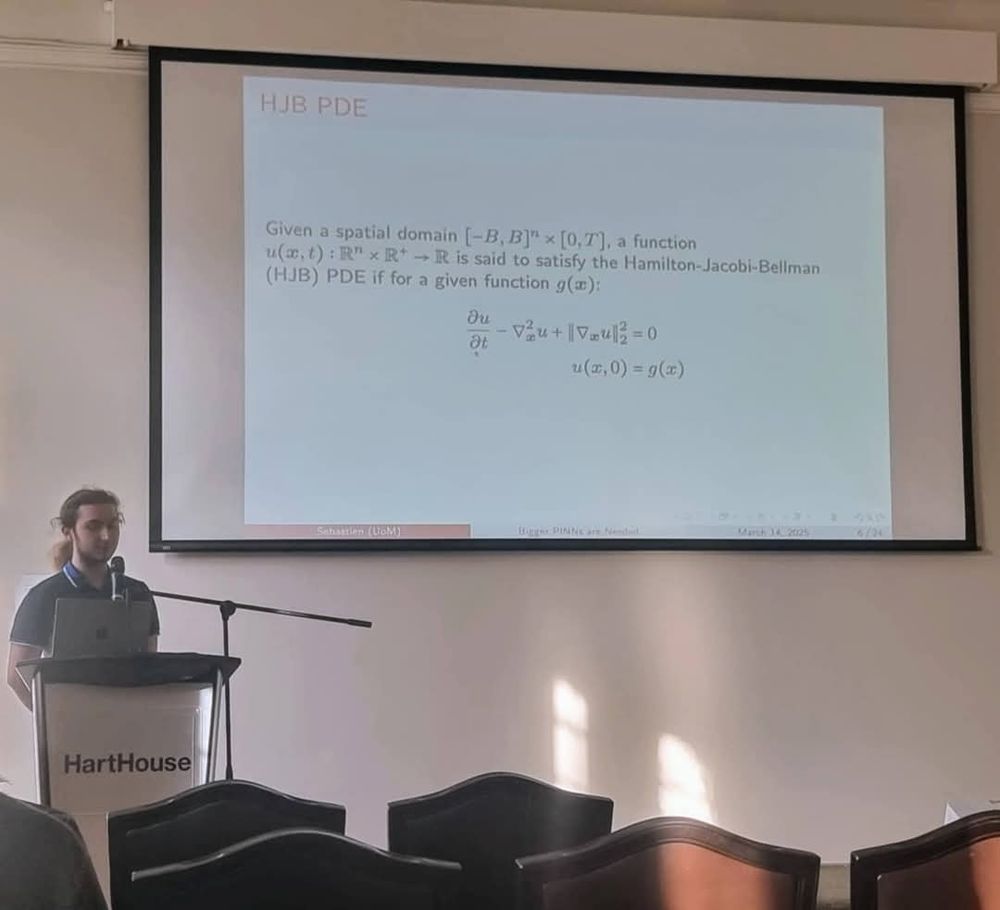

My first year PhD student Sébastien André-sloan presents at a #INFORMS conference in Toronto. Its a joint work with Matthew Colbrook at DAMTP, Cambridge. We prove a first-of-its-kind size requirement on neural nets for solving PDEs in the super-resolution setup - the natural setup for #PINNs.

March 15, 2025 at 7:10 PM

second time speaking @ the holy land of statistics, the Indian Statistical Institute (ISI) 🙂

In 2022 I was @ the Bangalore campus. Same topic : theory of operator learning

- the story continues 🔥 Both journal papers were published at #TMLR in 2024.

In 2022 I was @ the Bangalore campus. Same topic : theory of operator learning

- the story continues 🔥 Both journal papers were published at #TMLR in 2024.

January 10, 2025 at 5:00 AM

second time speaking @ the holy land of statistics, the Indian Statistical Institute (ISI) 🙂

In 2022 I was @ the Bangalore campus. Same topic : theory of operator learning

- the story continues 🔥 Both journal papers were published at #TMLR in 2024.

In 2022 I was @ the Bangalore campus. Same topic : theory of operator learning

- the story continues 🔥 Both journal papers were published at #TMLR in 2024.

This plot is from our 3rd #TMLR journal paper of the year - lnkd.in/e9NWcPcK - on generalization bounds for #DeepOperatorNet methods of PDE solving. #AI4Science Our prediction from theory is this: *use Huber loss to solve PDEs* - and here's a demonstrative comparison on Heat PDE

December 7, 2024 at 9:33 PM

This plot is from our 3rd #TMLR journal paper of the year - lnkd.in/e9NWcPcK - on generalization bounds for #DeepOperatorNet methods of PDE solving. #AI4Science Our prediction from theory is this: *use Huber loss to solve PDEs* - and here's a demonstrative comparison on Heat PDE

If you are at the 2nd UK AI Conference do stop by my student, Dibyakanti's poster 😊 Its one of his upcoming works - about why and when does Langevin Monte-Carlo train nets! At its core its an observation about neural losses satisfying the right isoperimetry inequalities as needed for LMC to work.

November 22, 2024 at 12:09 PM

If you are at the 2nd UK AI Conference do stop by my student, Dibyakanti's poster 😊 Its one of his upcoming works - about why and when does Langevin Monte-Carlo train nets! At its core its an observation about neural losses satisfying the right isoperimetry inequalities as needed for LMC to work.