@vladmnih.bsky.social, and Tim Genewein!

All details and many more results in arxiv.org/abs/2412.01441

N/N

@vladmnih.bsky.social, and Tim Genewein!

All details and many more results in arxiv.org/abs/2412.01441

N/N

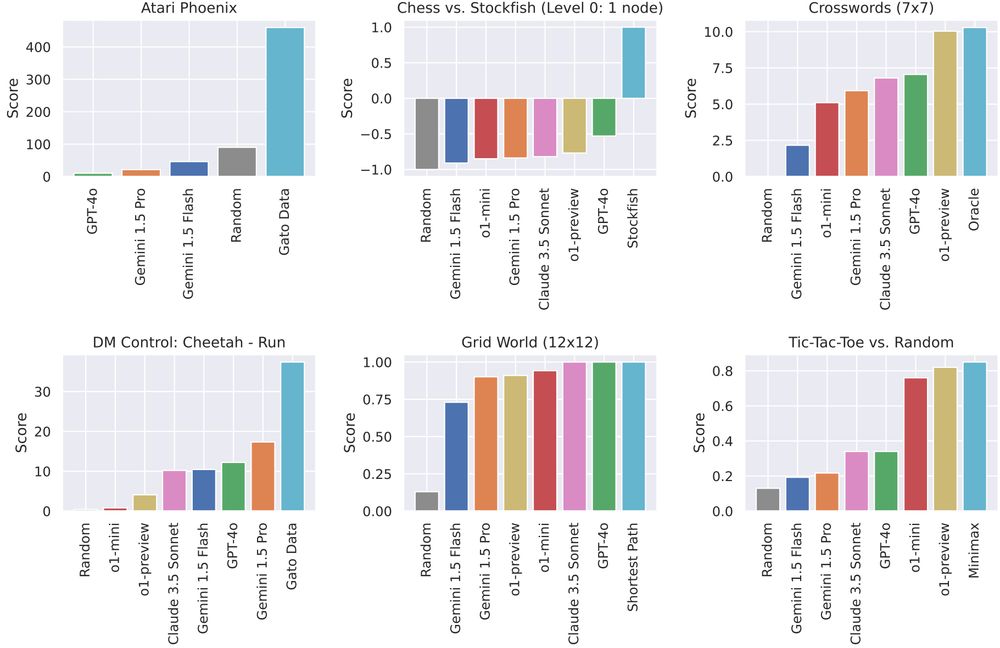

Most models perform well, with the exception of o1-mini, which fails across most tasks.

5/N

Most models perform well, with the exception of o1-mini, which fails across most tasks.

5/N

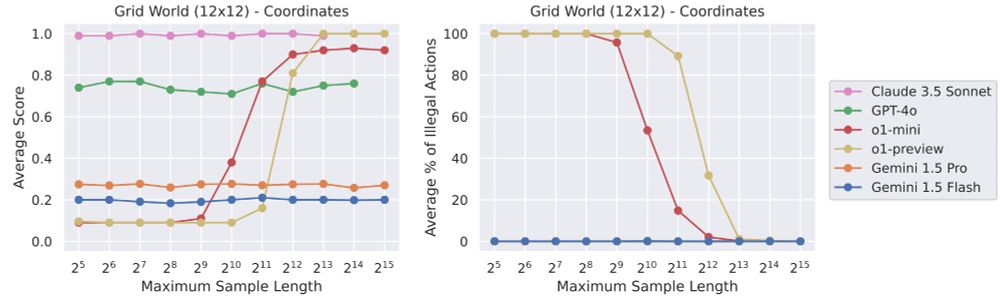

For o1-mini/o1-preview, performance crucially depends on having many (at least 8192) output tokens, even in simple decision-making tasks.

4/N

For o1-mini/o1-preview, performance crucially depends on having many (at least 8192) output tokens, even in simple decision-making tasks.

4/N

On some tasks, certain models show strong in-context imitation learning (e.g., Gemini 1.5 below). On others, the performance is independent of the expert demonstration episodes.

3/N

On some tasks, certain models show strong in-context imitation learning (e.g., Gemini 1.5 below). On others, the performance is independent of the expert demonstration episodes.

3/N

- Phoenix (Atari)

- chess vs weakest version of Stockfish

- crosswords

- cheetah run (DM Control)

- grid world navigation

- tic-tac-toe vs random actions

We compare against a random baseline and an expert policy and use up to 512 expert demonstration episodes:

2/N

- Phoenix (Atari)

- chess vs weakest version of Stockfish

- crosswords

- cheetah run (DM Control)

- grid world navigation

- tic-tac-toe vs random actions

We compare against a random baseline and an expert policy and use up to 512 expert demonstration episodes:

2/N