Medical experts analyzed clinical dataset abstractions, uncovering issues like overuse of unspecified diagnoses.

This mirrors real-world updates to medical abstractions — showing how models can help us rethink human knowledge.

Medical experts analyzed clinical dataset abstractions, uncovering issues like overuse of unspecified diagnoses.

This mirrors real-world updates to medical abstractions — showing how models can help us rethink human knowledge.

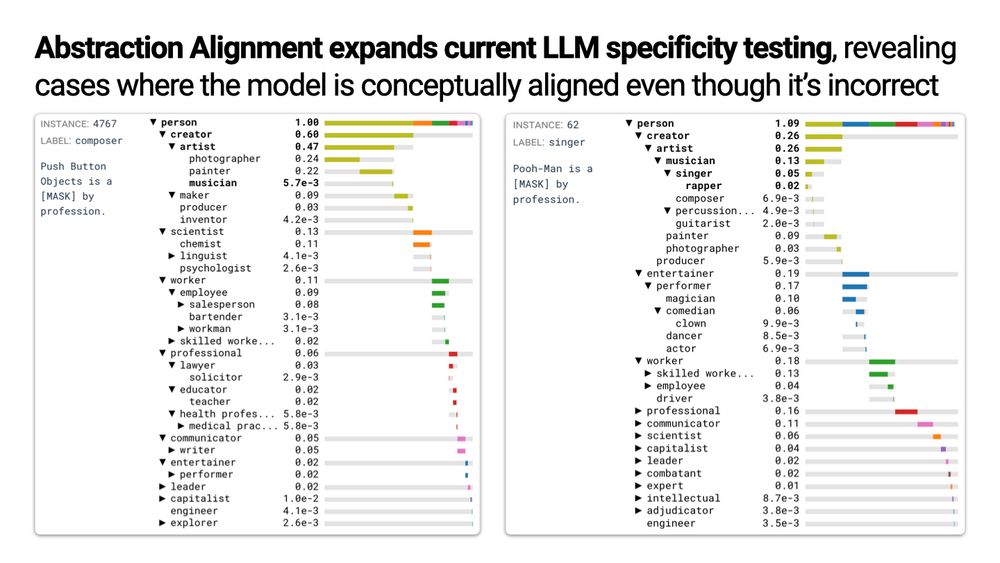

But Abstraction Alignment reveals that the concepts an LM considers are often abstraction-aligned, even when it’s wrong.

This helps separate surface-level errors from deeper conceptual misalignment.

But Abstraction Alignment reveals that the concepts an LM considers are often abstraction-aligned, even when it’s wrong.

This helps separate surface-level errors from deeper conceptual misalignment.

🔗https://vis.mit.edu/abstraction-alignment/

🔗https://vis.mit.edu/abstraction-alignment/

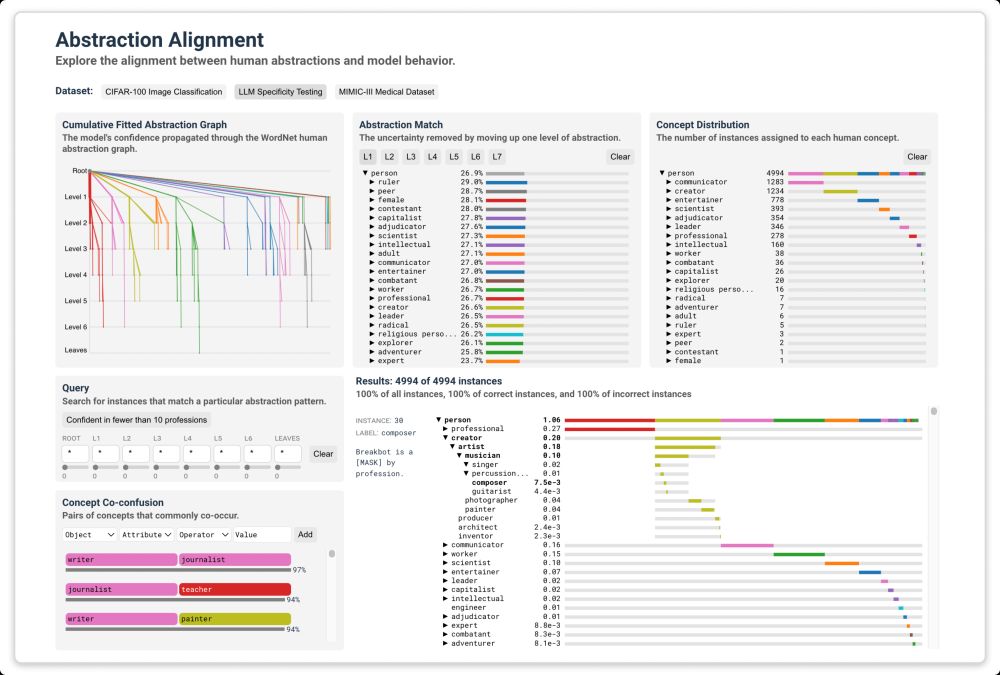

By propagating the model's uncertainty through an abstraction graph, we can see how well it aligns with human knowledge.

E.g., confusing oaks🌳 with palms🌴 is more aligned than confusing oaks🌳 with sharks🦈.

By propagating the model's uncertainty through an abstraction graph, we can see how well it aligns with human knowledge.

E.g., confusing oaks🌳 with palms🌴 is more aligned than confusing oaks🌳 with sharks🦈.

But human reasoning is built on abstractions — relationships between concepts that help us generalize (wheels 🛞→ car 🚗).

To measure alignment, we must test if models learn human-like concepts AND abstractions.

But human reasoning is built on abstractions — relationships between concepts that help us generalize (wheels 🛞→ car 🚗).

To measure alignment, we must test if models learn human-like concepts AND abstractions.

Models can learn the right concepts but still be wrong in how they relate them.

✨Abstraction Alignment✨evaluates whether models learn human-aligned conceptual relationships.

It reveals misalignments in LLMs💬 and medical datasets🏥.

🔗 arxiv.org/abs/2407.12543

Models can learn the right concepts but still be wrong in how they relate them.

✨Abstraction Alignment✨evaluates whether models learn human-aligned conceptual relationships.

It reveals misalignments in LLMs💬 and medical datasets🏥.

🔗 arxiv.org/abs/2407.12543