We compared human RTs to DeepSeek-R1’s CoT length across seven tasks: arithmetic (numeric & verbal), logic (syllogisms & ALE), relational reasoning, intuitive reasoning, and ARC (3/6)

We compared human RTs to DeepSeek-R1’s CoT length across seven tasks: arithmetic (numeric & verbal), logic (syllogisms & ALE), relational reasoning, intuitive reasoning, and ARC (3/6)

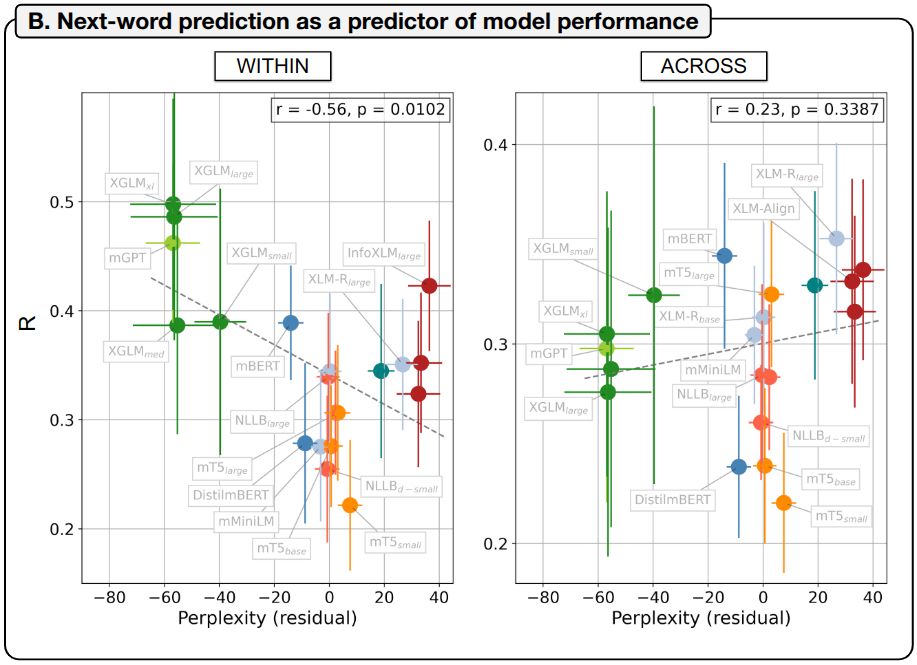

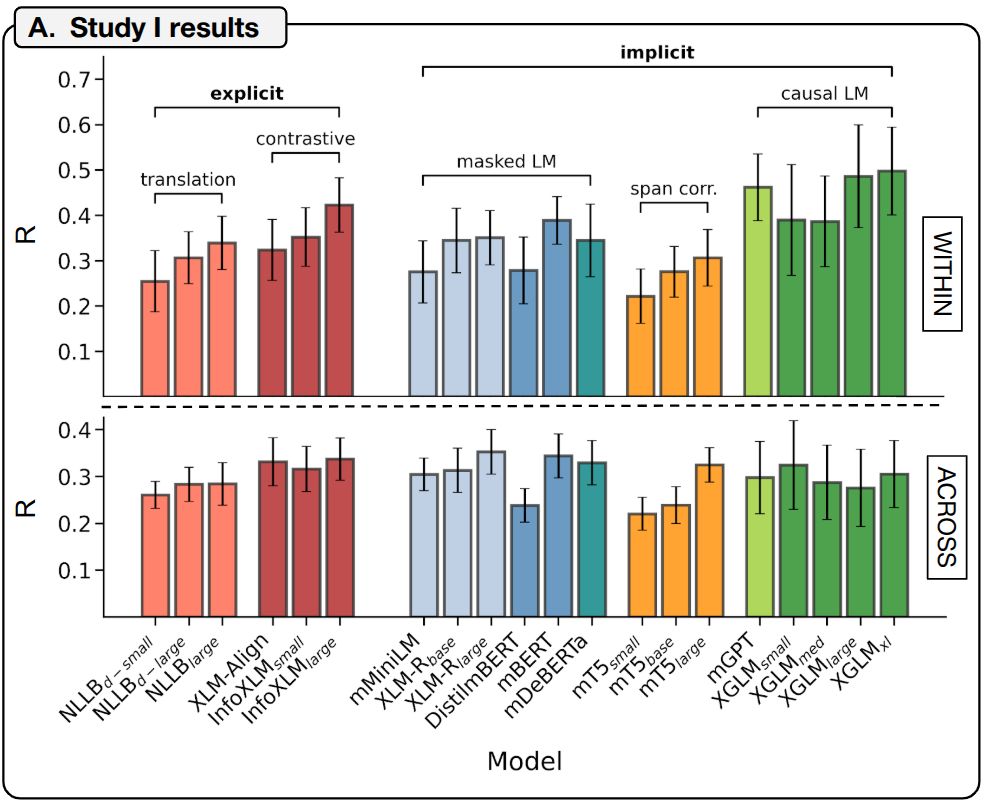

In the “within” condition, encoding performance is highest for models with good next-word prediction abilities (8/)

In the “within” condition, encoding performance is highest for models with good next-word prediction abilities (8/)

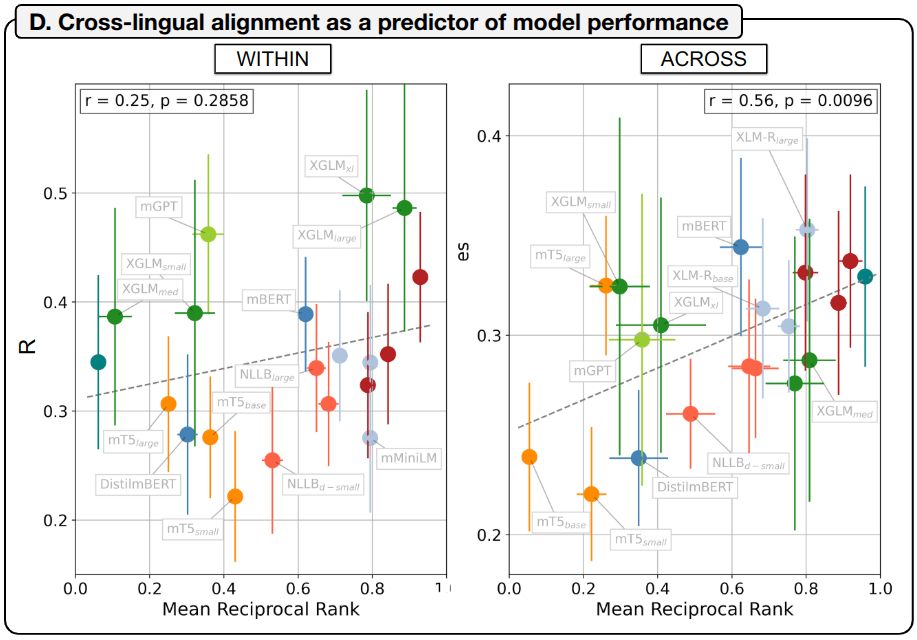

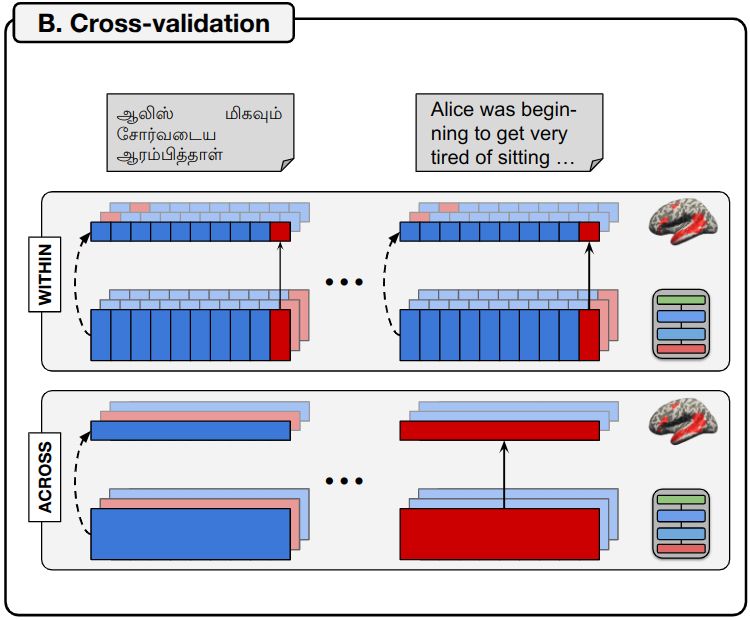

1️⃣ “within” encoding models, training and testing on data from a single language with cross-validation

2️⃣ “across” encoding models, training in N-1 languages and testing in the left-out language (5/)

1️⃣ “within” encoding models, training and testing on data from a single language with cross-validation

2️⃣ “across” encoding models, training in N-1 languages and testing in the left-out language (5/)

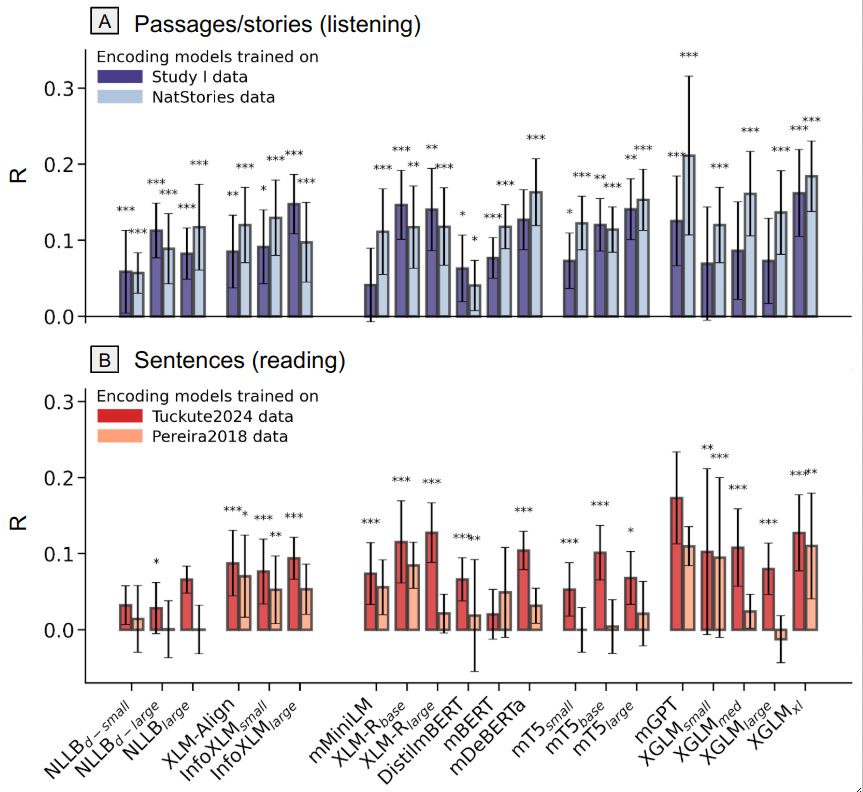

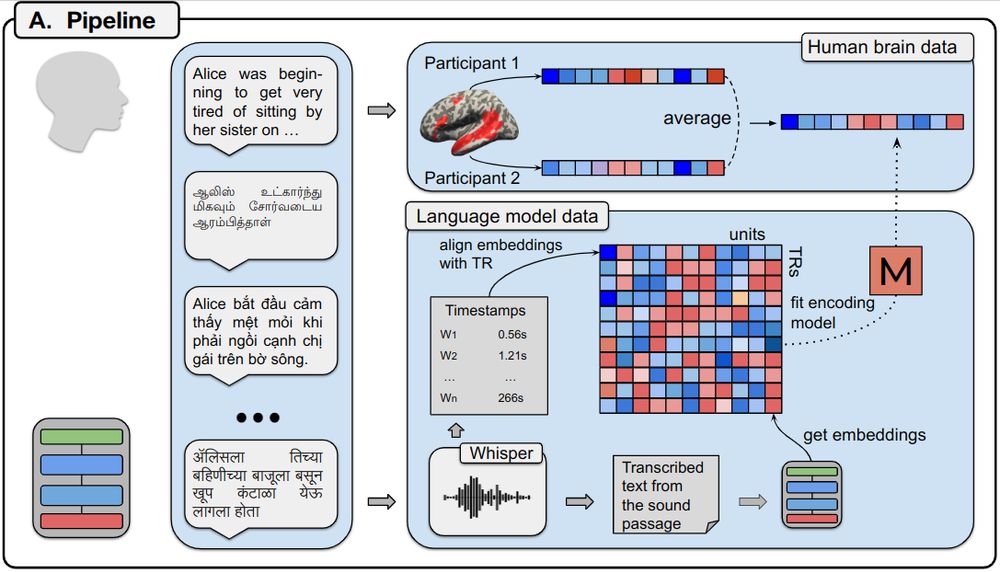

1️⃣ Present participants with auditory passages and record their brain responses in the language network

2️⃣ Extract contextualized word embeddings from multilingual LMs

3️⃣ Fit encoding models predicting brain activity from the embeddings (4/)

1️⃣ Present participants with auditory passages and record their brain responses in the language network

2️⃣ Extract contextualized word embeddings from multilingual LMs

3️⃣ Fit encoding models predicting brain activity from the embeddings (4/)