By @eijkelboomfloor.bsky.social , @zmheiko.bsky.social ,

@sharvaree.bsky.social , @erikjbekkers.bsky.social ,

@wellingmax.bsky.social , @canaesseth.bsky.social *, @jwvdm.bsky.social *

📜 As above - paper TBA soon 😉

🧵8 / 8

By @eijkelboomfloor.bsky.social , @zmheiko.bsky.social ,

@sharvaree.bsky.social , @erikjbekkers.bsky.social ,

@wellingmax.bsky.social , @canaesseth.bsky.social *, @jwvdm.bsky.social *

📜 As above - paper TBA soon 😉

🧵8 / 8

By Andrés Guzmán-Cordero*, @eijkelboomfloor.bsky.social *,

@jwvdm.bsky.social

📜 This paper will be shared soon - keep your eyes open! 🤩

🧵7 / 8

By Andrés Guzmán-Cordero*, @eijkelboomfloor.bsky.social *,

@jwvdm.bsky.social

📜 This paper will be shared soon - keep your eyes open! 🤩

🧵7 / 8

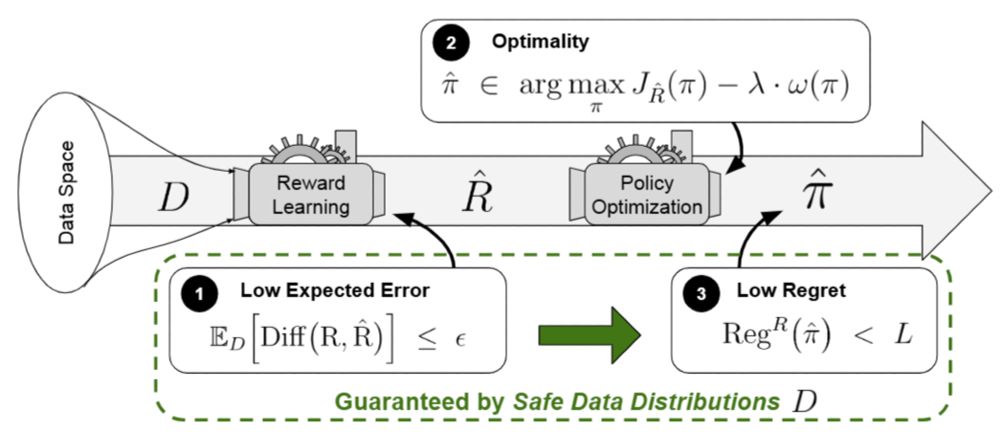

By Lukas Fluri*, @leon-lang.bsky.social *, Alessandro Abate, Patrick Forré, David Krueger, Joar Skalse

📜 arxiv.org/abs/2406.15753

🧵6 / 8

By Lukas Fluri*, @leon-lang.bsky.social *, Alessandro Abate, Patrick Forré, David Krueger, Joar Skalse

📜 arxiv.org/abs/2406.15753

🧵6 / 8

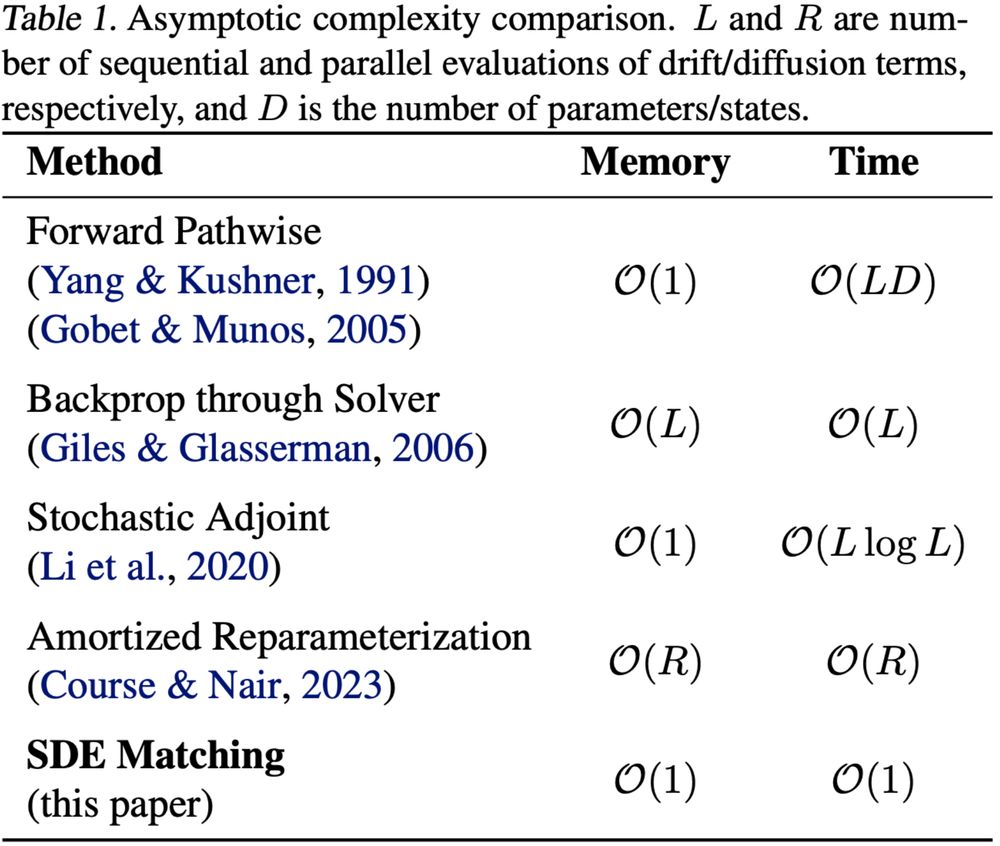

By @gbarto.bsky.social , Dmitry Vetrov, @canaesseth.bsky.social

📜 arxiv.org/abs/2502.02472

🧵5 / 8

By @gbarto.bsky.social , Dmitry Vetrov, @canaesseth.bsky.social

📜 arxiv.org/abs/2502.02472

🧵5 / 8

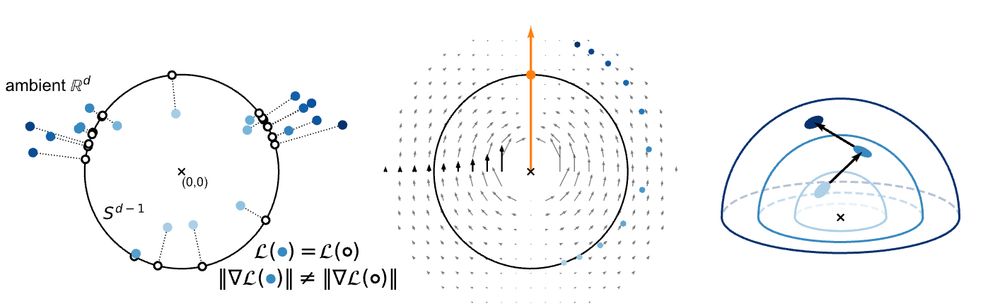

By Andrew Draganov, @sharvaree.bsky.social , Sebastian Damrich, Jan Niklas Böhm, Lucas Maes, Dmitry Kobak, @erikjbekkers.bsky.social

📜 arxiv.org/abs/2502.09252

🧵4 / 8

By Andrew Draganov, @sharvaree.bsky.social , Sebastian Damrich, Jan Niklas Böhm, Lucas Maes, Dmitry Kobak, @erikjbekkers.bsky.social

📜 arxiv.org/abs/2502.09252

🧵4 / 8

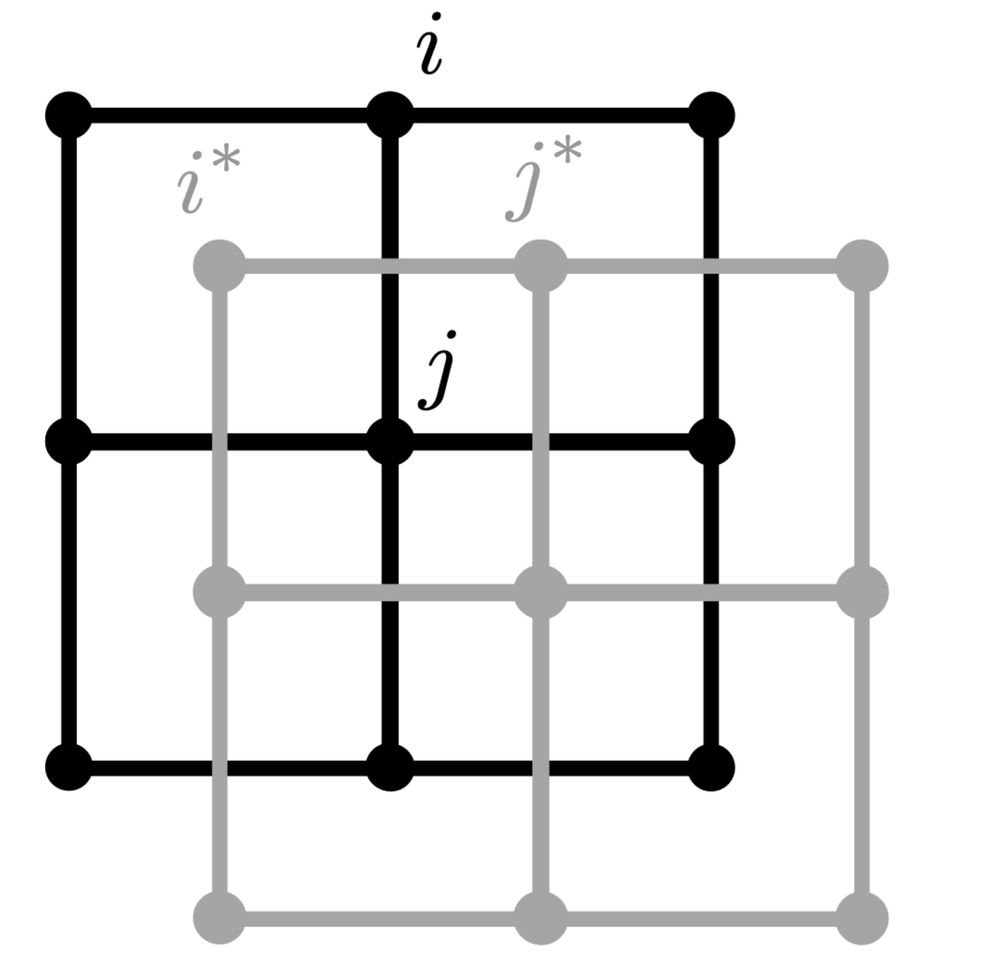

By Prateek Gupta, Andrea Ferrari, @nabiliqbal.bsky.social

📜 arxiv.org/abs/2411.04838

🧵3 / 8

By Prateek Gupta, Andrea Ferrari, @nabiliqbal.bsky.social

📜 arxiv.org/abs/2411.04838

🧵3 / 8

By @maxxxzdn.bsky.social , @jwvdm.bsky.social , @wellingmax.bsky.social

📜 arxiv.org/abs/2502.17019

🧵2 / 8

By @maxxxzdn.bsky.social , @jwvdm.bsky.social , @wellingmax.bsky.social

📜 arxiv.org/abs/2502.17019

🧵2 / 8

Adam: A Method for Stochastic Optimization

Diederik P. Kingma, Jimmy Ba

Adam revolutionized neural network training, enabling significantly faster convergence and more stable training across a wide variety of architectures and tasks.

Adam: A Method for Stochastic Optimization

Diederik P. Kingma, Jimmy Ba

Adam revolutionized neural network training, enabling significantly faster convergence and more stable training across a wide variety of architectures and tasks.

@matyasch.bsky.social @roelhulsman.bsky.social @rmassidda.it @danruxu.bsky.social 🔥

@matyasch.bsky.social @roelhulsman.bsky.social @rmassidda.it @danruxu.bsky.social 🔥