•Larger, open datasets capturing linguistic, clinical, and demogr. variability in SCZ to test generalization and modern ML architectures, e.g., LLMs, multimodal models.

•Focusing on fine-grained clinically relevant features to enhance clinical applicability.

•Larger, open datasets capturing linguistic, clinical, and demogr. variability in SCZ to test generalization and modern ML architectures, e.g., LLMs, multimodal models.

•Focusing on fine-grained clinically relevant features to enhance clinical applicability.

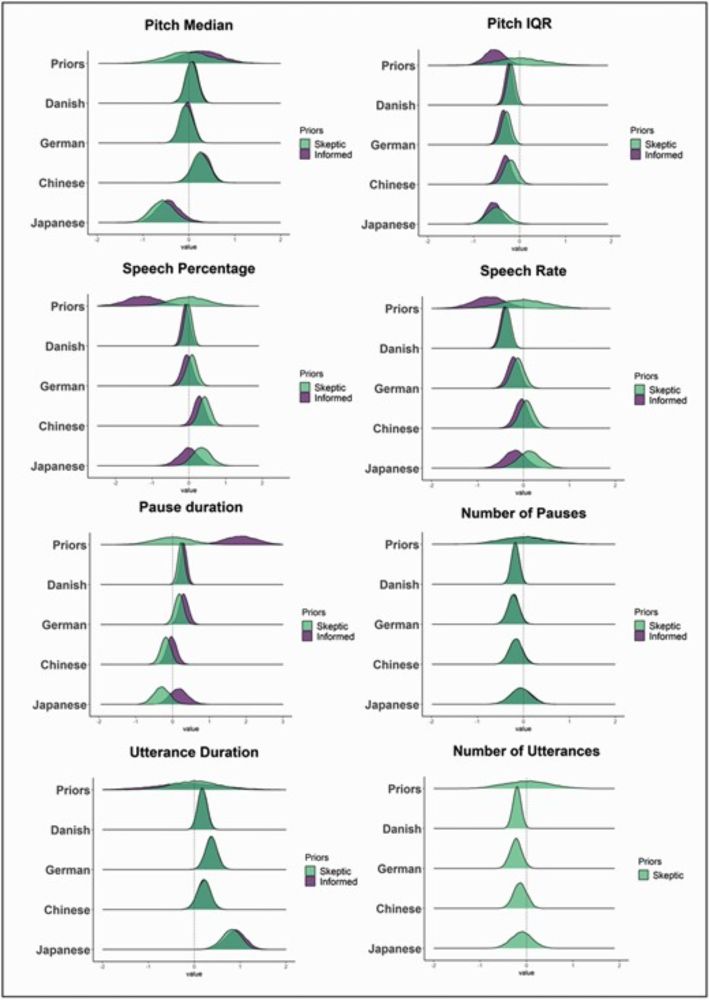

• Linguistic differences affect how SCZ symptoms relate to acoustic features

• Clinical heterogeneity limits robustness of ML models trained on small, homogenous samples

• Models biased toward general features, not capturing diagnosis- or symptom-specific markers

• Linguistic differences affect how SCZ symptoms relate to acoustic features

• Clinical heterogeneity limits robustness of ML models trained on small, homogenous samples

• Models biased toward general features, not capturing diagnosis- or symptom-specific markers

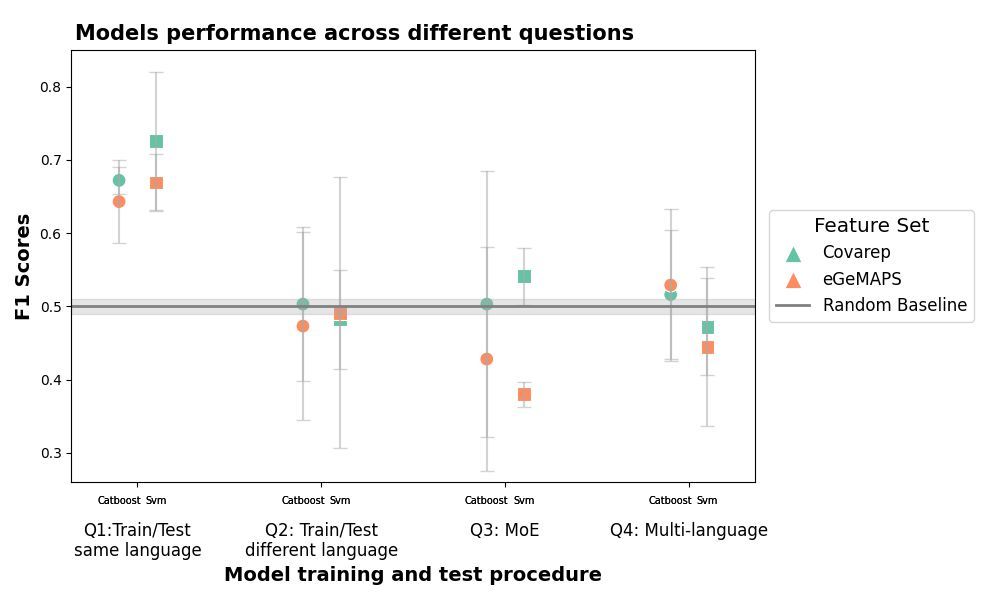

We tested two alternative approach:

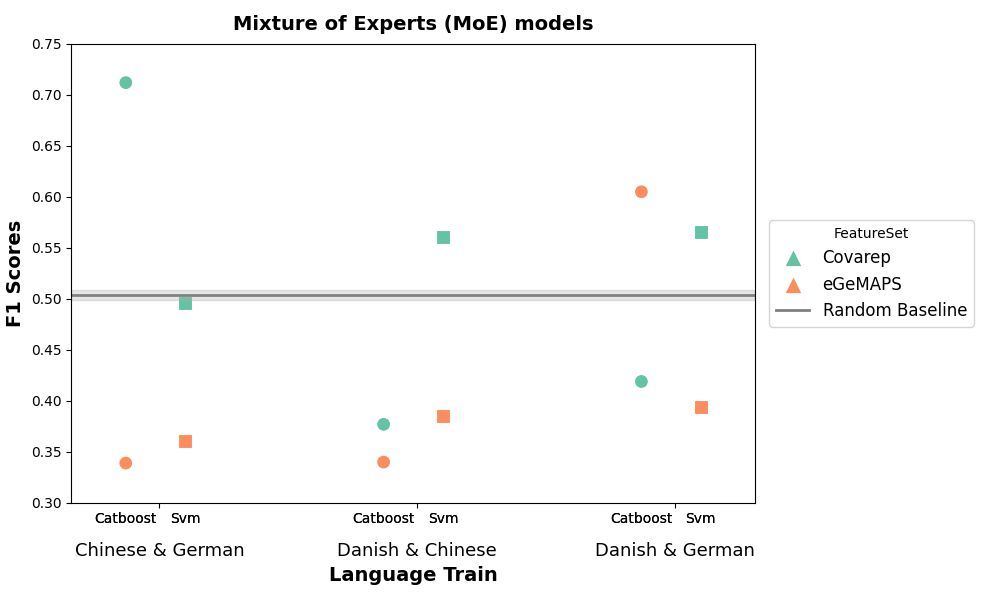

1️) Mixture of Experts models (combining predictions from models trained on different languages, Plot 3).

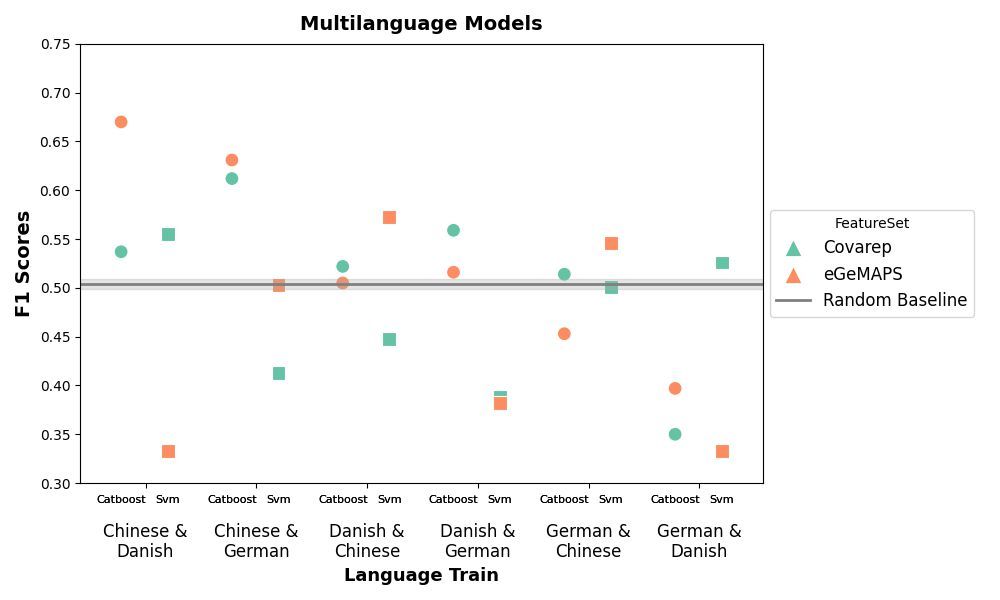

2) Multi-language training set (combin. training data from multiple languages, Plot 4).

❌ Results: Still near chance level (F1 ~ 0.50).

We tested two alternative approach:

1️) Mixture of Experts models (combining predictions from models trained on different languages, Plot 3).

2) Multi-language training set (combin. training data from multiple languages, Plot 4).

❌ Results: Still near chance level (F1 ~ 0.50).

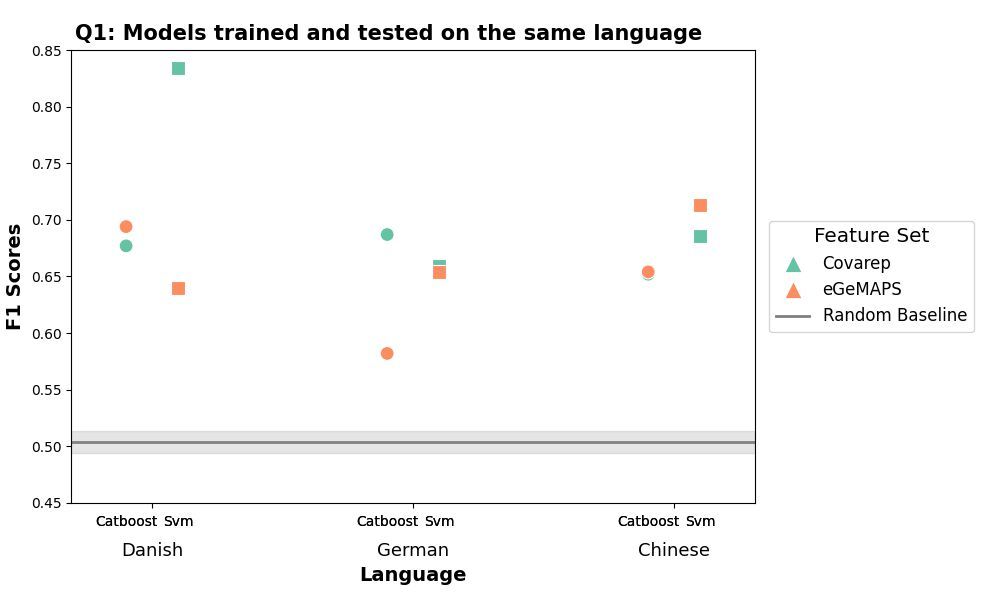

✔️#1: ML models perform when trained/tested on the same language (F1 ~ 0.75) (Plot1)

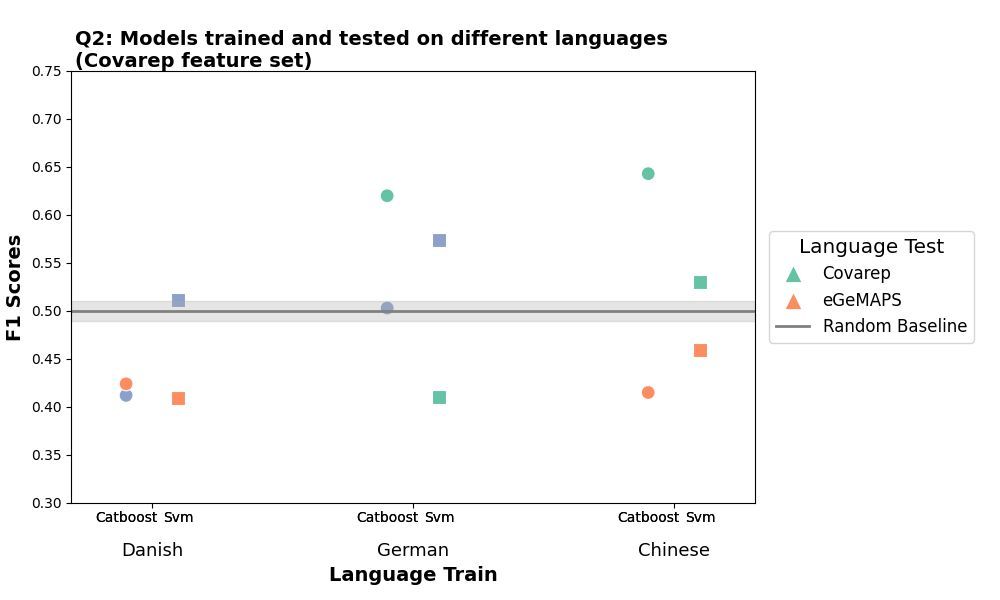

❌#2: But when trained/tested on different languages (e.g., Danish → Chinese), performance drops significantly (F1 ~ 0.50) (Plot 2).

Cross-linguistic generalizability remains a key challenge!

✔️#1: ML models perform when trained/tested on the same language (F1 ~ 0.75) (Plot1)

❌#2: But when trained/tested on different languages (e.g., Danish → Chinese), performance drops significantly (F1 ~ 0.50) (Plot 2).

Cross-linguistic generalizability remains a key challenge!

In this study we build a large cross-linguistic speech corpus (Danish, German, Chinese) of patients with schizophrenia and controls to systematically test whether voice-based ML models predicting schizophrenia generalize across different languages, samples and context: 🧵

In this study we build a large cross-linguistic speech corpus (Danish, German, Chinese) of patients with schizophrenia and controls to systematically test whether voice-based ML models predicting schizophrenia generalize across different languages, samples and context: 🧵

But how well do voice-based machine-learning models generalize across languages and cultural contexts? How well do they generalize across samples with heterogenous clinical features? Are they robust enough to biases for clinical applicability?

But how well do voice-based machine-learning models generalize across languages and cultural contexts? How well do they generalize across samples with heterogenous clinical features? Are they robust enough to biases for clinical applicability?

🎙️ Schizophrenia is associated with atypical voice patterns, making voice a promising candidate biomarker. Voice-based ML models can indeed predict diagnosis, symptoms and track socio-cognitive and motor features of SCZ with high accuracy.

🎙️ Schizophrenia is associated with atypical voice patterns, making voice a promising candidate biomarker. Voice-based ML models can indeed predict diagnosis, symptoms and track socio-cognitive and motor features of SCZ with high accuracy.