Alexandra Witt

@alexthewitty.bsky.social

Cognitive computational (neuro-)science PhD student at Uni Tübingen 🧠🤖 she/her

Taken together, we find that learning rate biases are more flexible than expected, and especially flexible (and adaptive) in social learning settings. Thanks for reading this far, and since you already put in all this effort, consider giving the full paper a read! :)

May 26, 2025 at 11:30 AM

Taken together, we find that learning rate biases are more flexible than expected, and especially flexible (and adaptive) in social learning settings. Thanks for reading this far, and since you already put in all this effort, consider giving the full paper a read! :)

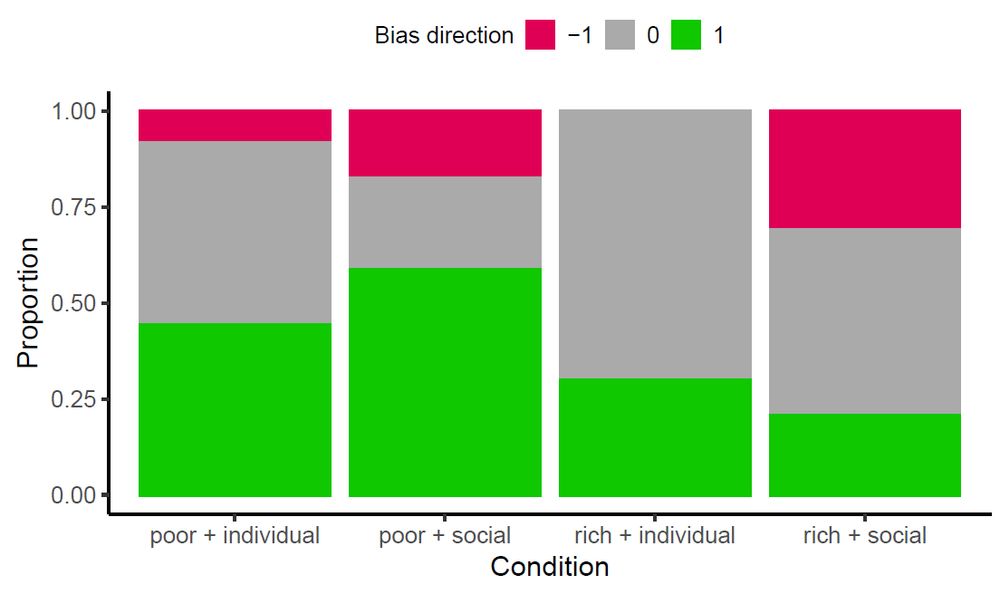

We also find that bias isn't as stable as we expected: while there is still a significant positivity bias in individual + rich, we also find a high proportion of unbiased learners. Participants changed their bias between conditions, rather than being consistently positivity-(or negativity-) biased.

May 26, 2025 at 11:30 AM

We also find that bias isn't as stable as we expected: while there is still a significant positivity bias in individual + rich, we also find a high proportion of unbiased learners. Participants changed their bias between conditions, rather than being consistently positivity-(or negativity-) biased.

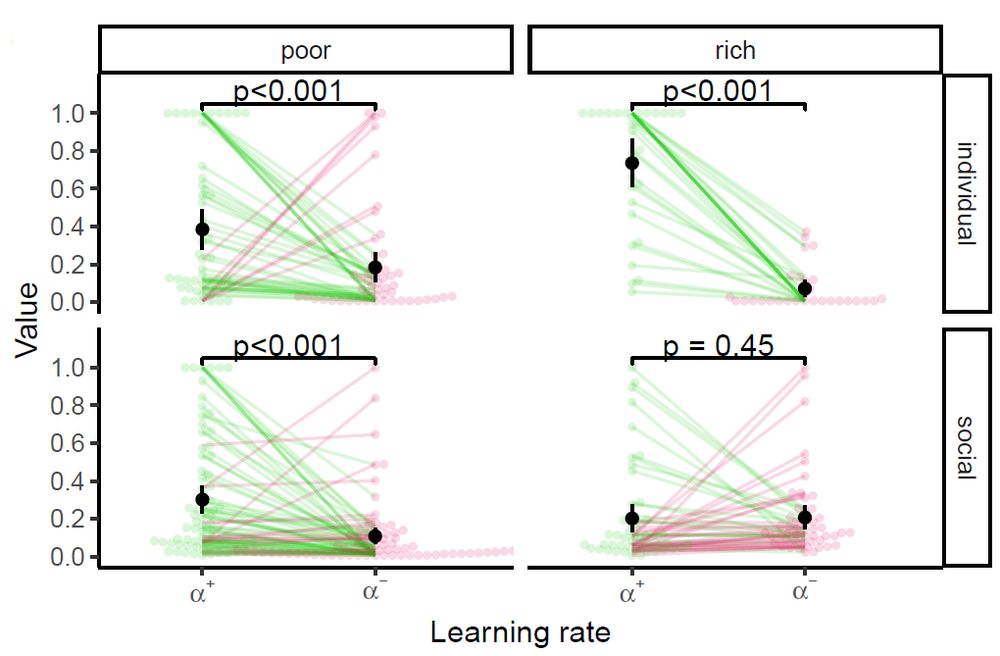

Participants were significantly positivity-biased in both individual conditions (matching previous findings), but they were only positivity biased in poor environments for social learning, while we found no significant bias in rich environments (where positivity bias is maladaptive).

May 26, 2025 at 11:30 AM

Participants were significantly positivity-biased in both individual conditions (matching previous findings), but they were only positivity biased in poor environments for social learning, while we found no significant bias in rich environments (where positivity bias is maladaptive).

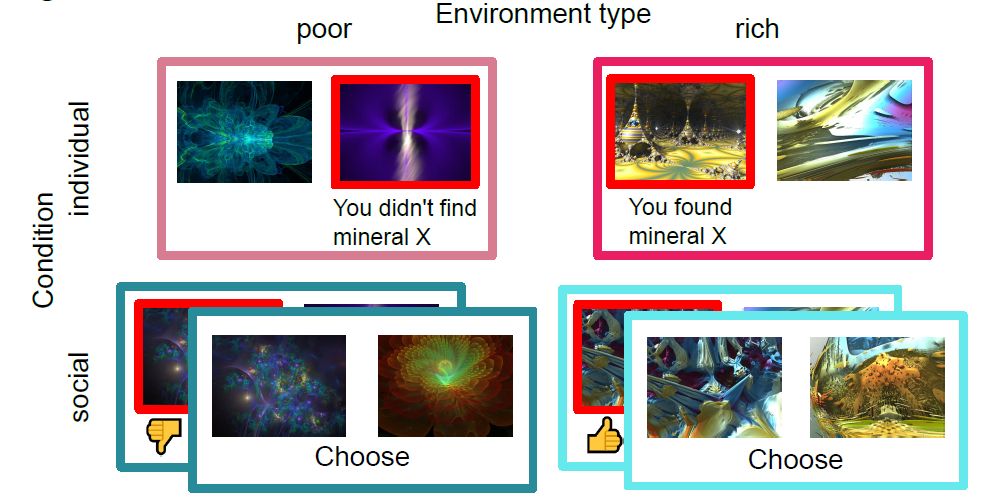

We then ran a within-subjects 2x2 design as an online experiment. Each participant completed two armed bandits in rich and poor environments, and while learning socially or individually.

May 26, 2025 at 11:30 AM

We then ran a within-subjects 2x2 design as an online experiment. Each participant completed two armed bandits in rich and poor environments, and while learning socially or individually.

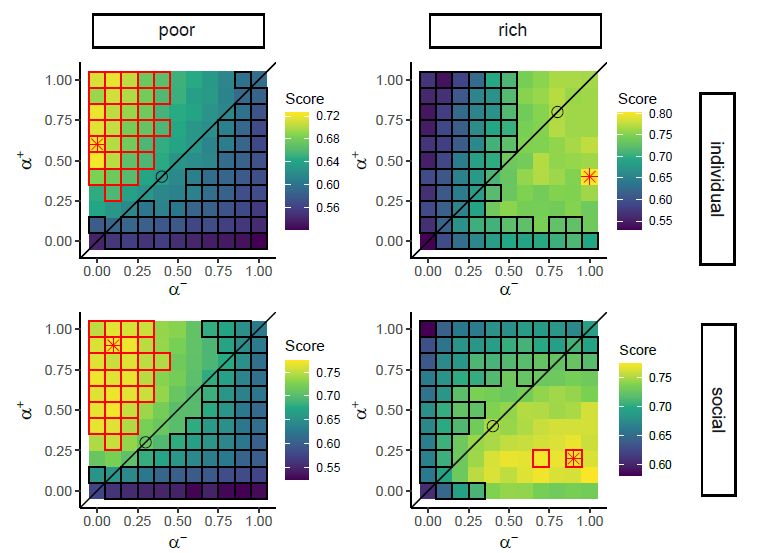

What bias is adaptive depends on what kind of environment you're in: in poor environments (rare rewards), a positivity bias is beneficial, while the opposite is true in rich environments. Our simulations show that this holds regardless of individual or social contexts.

May 26, 2025 at 11:30 AM

What bias is adaptive depends on what kind of environment you're in: in poor environments (rare rewards), a positivity bias is beneficial, while the opposite is true in rich environments. Our simulations show that this holds regardless of individual or social contexts.

However, one of the major perks of social learning is supposed to be that you can learn from others' mistakes, so you don't have to repeat them yourself. This would imply we should be negativity biased when learning from others. So does the stable positivity bias hold regardless?

May 26, 2025 at 11:30 AM

However, one of the major perks of social learning is supposed to be that you can learn from others' mistakes, so you don't have to repeat them yourself. This would imply we should be negativity biased when learning from others. So does the stable positivity bias hold regardless?

In individual learning, prior research often reports a stable positivity bias: learning rates for positive prediction errors are higher than those for negative ones (i.e. we update positive outcomes more strongly than negative ones).

May 26, 2025 at 11:30 AM

In individual learning, prior research often reports a stable positivity bias: learning rates for positive prediction errors are higher than those for negative ones (i.e. we update positive outcomes more strongly than negative ones).

Secret 8/7 -- if you don't have access to PNAS, the preprint is also still around osf.io/preprints/ps... 🔓

September 23, 2024 at 12:00 PM

Secret 8/7 -- if you don't have access to PNAS, the preprint is also still around osf.io/preprints/ps... 🔓

Thank you, and good catch! Looks like my 🎉got parsed into the link by accident. Here's the paper: www.pnas.org/doi/10.1073/... :)

September 23, 2024 at 11:59 AM

Thank you, and good catch! Looks like my 🎉got parsed into the link by accident. Here's the paper: www.pnas.org/doi/10.1073/... :)

7/7 I'm beyond thrilled that this project is officially published now, and forever grateful to my collaborators, without whom this would've been impossible -- @watarutoyokawa.bsky.social, Kevin Lala, Wolfgang Gaissmaier, and @thecharleywu.bsky.social.

Now off with you! Go read the full paper!

Now off with you! Go read the full paper!

September 23, 2024 at 10:45 AM

7/7 I'm beyond thrilled that this project is officially published now, and forever grateful to my collaborators, without whom this would've been impossible -- @watarutoyokawa.bsky.social, Kevin Lala, Wolfgang Gaissmaier, and @thecharleywu.bsky.social.

Now off with you! Go read the full paper!

Now off with you! Go read the full paper!

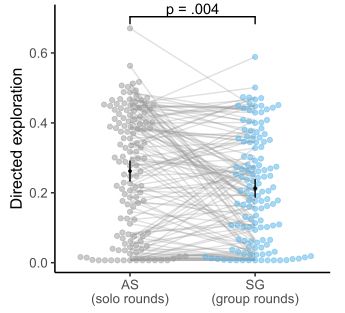

6/7 Participants used social information as an exploration tool: when it was possible to learn from others, they reduced the amount of directed individual exploration they did -- this might be resource-rational, given that exploration has been found to be cognitively costly.

September 23, 2024 at 10:44 AM

6/7 Participants used social information as an exploration tool: when it was possible to learn from others, they reduced the amount of directed individual exploration they did -- this might be resource-rational, given that exploration has been found to be cognitively costly.

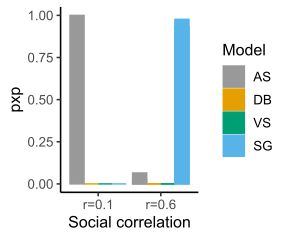

5/7 Participants adjusted how much they relied on social information to the task -- when we lowered social correlations, they stopped using social information altogether.

September 23, 2024 at 10:44 AM

5/7 Participants adjusted how much they relied on social information to the task -- when we lowered social correlations, they stopped using social information altogether.

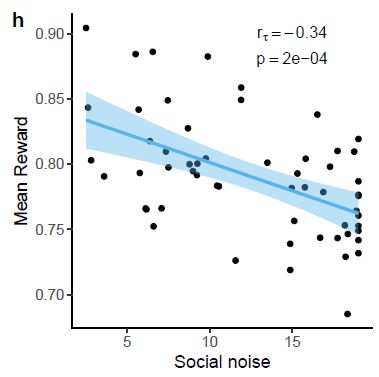

4/7 Participants treated social information as noisy individual information, following the predictions of our Social Generalization model. They also performed better when they relied on social information, using it to their advantage.

September 23, 2024 at 10:43 AM

4/7 Participants treated social information as noisy individual information, following the predictions of our Social Generalization model. They also performed better when they relied on social information, using it to their advantage.

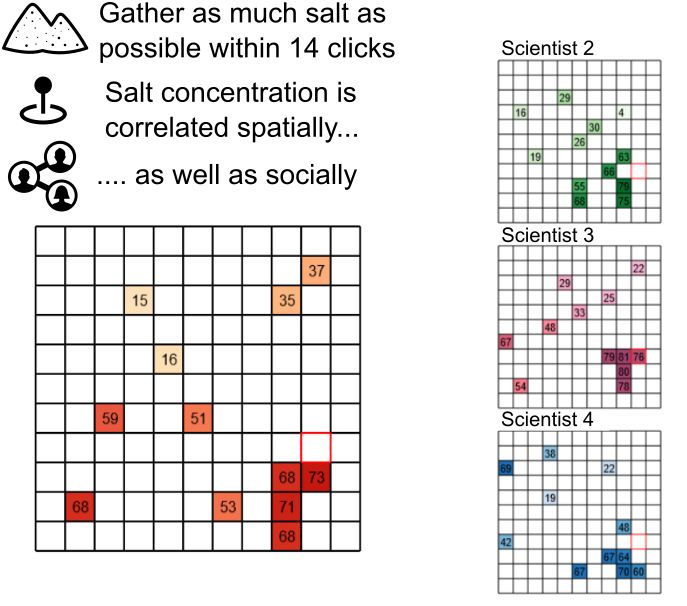

3/7 To investigate how humans handle settings where preferences are non-identical, we ran 3 experiments using the socially correlated bandit -- a task in which social information is helpful, but imitation is not optimal.

September 23, 2024 at 10:42 AM

3/7 To investigate how humans handle settings where preferences are non-identical, we ran 3 experiments using the socially correlated bandit -- a task in which social information is helpful, but imitation is not optimal.

2/7 In prior research on how we can computationally model social learning, participants and demonstrators generally shared the exact same goal, making imitation optimal. In real life, however, you might not want to blindly imitate any person you come across.

September 23, 2024 at 10:41 AM

2/7 In prior research on how we can computationally model social learning, participants and demonstrators generally shared the exact same goal, making imitation optimal. In real life, however, you might not want to blindly imitate any person you come across.