Alan Ramponi

@alanramponi.bsky.social

Senior researcher at DH@FBK. Natural language processing, language variation and diversity, social impact of NLP. Prev: ITU Cph, Unitn.

🇮🇹, he/him. #NLP #NLProc

Website: alanramponi.github.io

🇮🇹, he/him. #NLP #NLProc

Website: alanramponi.github.io

Further information:

🌐 Website: noisy-text.github.io/2025/

📕 Proceedings: aclanthology.org/volumes/2025...

Organizers: JinYeong Bak, Rob van der Goot, Hyeju Jang, Weerayut Buaphet, Alan Ramponi, Wei Xu, Alan Ritter

See you tomorrow!

🌐 Website: noisy-text.github.io/2025/

📕 Proceedings: aclanthology.org/volumes/2025...

Organizers: JinYeong Bak, Rob van der Goot, Hyeju Jang, Weerayut Buaphet, Alan Ramponi, Wei Xu, Alan Ritter

See you tomorrow!

May 2, 2025 at 9:39 PM

Further information:

🌐 Website: noisy-text.github.io/2025/

📕 Proceedings: aclanthology.org/volumes/2025...

Organizers: JinYeong Bak, Rob van der Goot, Hyeju Jang, Weerayut Buaphet, Alan Ramponi, Wei Xu, Alan Ritter

See you tomorrow!

🌐 Website: noisy-text.github.io/2025/

📕 Proceedings: aclanthology.org/volumes/2025...

Organizers: JinYeong Bak, Rob van der Goot, Hyeju Jang, Weerayut Buaphet, Alan Ramponi, Wei Xu, Alan Ritter

See you tomorrow!

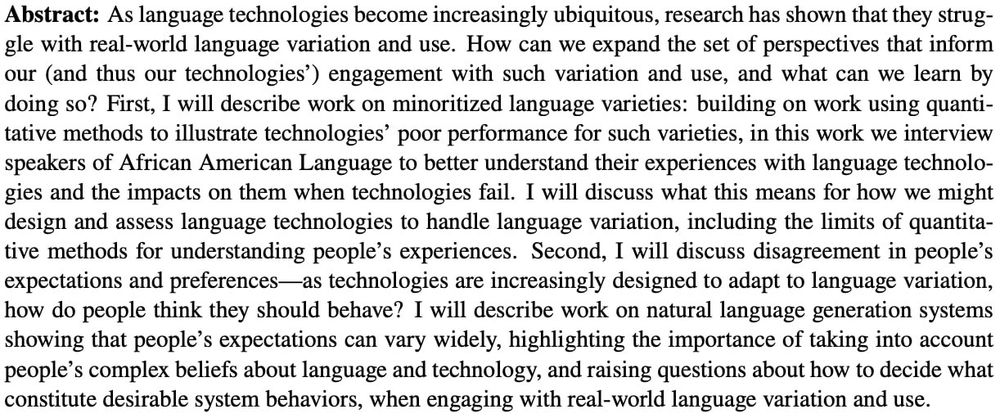

Keynote talk 2️⃣

🗣️ Su Lin Blodgett (Microsoft Research Montréal)

🕤 May 3rd, 16:00 (UTC-6 time)

✨ What Can We Learn from Perspectives on Noisy

User-Generated Text?

🗣️ Su Lin Blodgett (Microsoft Research Montréal)

🕤 May 3rd, 16:00 (UTC-6 time)

✨ What Can We Learn from Perspectives on Noisy

User-Generated Text?

May 2, 2025 at 9:39 PM

Keynote talk 2️⃣

🗣️ Su Lin Blodgett (Microsoft Research Montréal)

🕤 May 3rd, 16:00 (UTC-6 time)

✨ What Can We Learn from Perspectives on Noisy

User-Generated Text?

🗣️ Su Lin Blodgett (Microsoft Research Montréal)

🕤 May 3rd, 16:00 (UTC-6 time)

✨ What Can We Learn from Perspectives on Noisy

User-Generated Text?

Keynote talk 1️⃣

🗣️ Verena Blaschke (LMU Munich & MCML)

🕤 May 3rd, 09:30 (UTC-6 time)

✨ Beyond “noisy” text: How (and why) to process dialect data

🗣️ Verena Blaschke (LMU Munich & MCML)

🕤 May 3rd, 09:30 (UTC-6 time)

✨ Beyond “noisy” text: How (and why) to process dialect data

May 2, 2025 at 9:39 PM

Keynote talk 1️⃣

🗣️ Verena Blaschke (LMU Munich & MCML)

🕤 May 3rd, 09:30 (UTC-6 time)

✨ Beyond “noisy” text: How (and why) to process dialect data

🗣️ Verena Blaschke (LMU Munich & MCML)

🕤 May 3rd, 09:30 (UTC-6 time)

✨ Beyond “noisy” text: How (and why) to process dialect data

👋 great initiative!

March 12, 2025 at 7:12 AM

👋 great initiative!

We release data, code, and the full annotation guidelines to encourage extensions to cover new languages, topics, and additional perspectives 🗣️

See you in Albuquerque! 🏜️

See you in Albuquerque! 🏜️

February 25, 2025 at 1:40 PM

We release data, code, and the full annotation guidelines to encourage extensions to cover new languages, topics, and additional perspectives 🗣️

See you in Albuquerque! 🏜️

See you in Albuquerque! 🏜️

A manual analysis of LLMs’ outputs unveils and quantifies different types of issues that call for future research to make generated responses less brittle in complex setups such as ours 🕵️♀️

Check the paper for full results, analyses, discussion and insights! 📝

8/8

Check the paper for full results, analyses, discussion and insights! 📝

8/8

February 25, 2025 at 1:40 PM

A manual analysis of LLMs’ outputs unveils and quantifies different types of issues that call for future research to make generated responses less brittle in complex setups such as ours 🕵️♀️

Check the paper for full results, analyses, discussion and insights! 📝

8/8

Check the paper for full results, analyses, discussion and insights! 📝

8/8

Our results show that fallacy detection, which involves capturing lexical, semantic, and even pragmatic aspects of communication, is still far from being addressed with LLMs in a zero-shot setup, especially if we aim at embracing human label variation

7/🧵

7/🧵

February 25, 2025 at 1:40 PM

Our results show that fallacy detection, which involves capturing lexical, semantic, and even pragmatic aspects of communication, is still far from being addressed with LLMs in a zero-shot setup, especially if we aim at embracing human label variation

7/🧵

7/🧵

We design multi-task fallacy detection baselines and assess LLMs in a zero-shot setting in four fallacy detection setups of increasing complexity: at the post- or the span-level, and using either fallacy macro-categories or the full inventory

6/🧵

6/🧵

February 25, 2025 at 1:40 PM

We design multi-task fallacy detection baselines and assess LLMs in a zero-shot setting in four fallacy detection setups of increasing complexity: at the post- or the span-level, and using either fallacy macro-categories or the full inventory

6/🧵

6/🧵

In the paper, we provide in-depth analyses and insights into the full annotation process 📝

We also conducted experiments by simultaneously accounting for multiple test sets (beyond “single ground truth”), partial span matches, overlaps, and the varying severity of labeling errors

5/🧵

We also conducted experiments by simultaneously accounting for multiple test sets (beyond “single ground truth”), partial span matches, overlaps, and the varying severity of labeling errors

5/🧵

February 25, 2025 at 1:40 PM

In the paper, we provide in-depth analyses and insights into the full annotation process 📝

We also conducted experiments by simultaneously accounting for multiple test sets (beyond “single ground truth”), partial span matches, overlaps, and the varying severity of labeling errors

5/🧵

We also conducted experiments by simultaneously accounting for multiple test sets (beyond “single ground truth”), partial span matches, overlaps, and the varying severity of labeling errors

5/🧵

Due to the complexity of the task, we avoided crowdsourcing and instead devised multiple rounds of annotation and discussion among two expert annotators. We minimize annotation errors whilst keeping signals of human label variation on the whole dataset

⚠️ Natural disagreement is not noise!

4/🧵

⚠️ Natural disagreement is not noise!

4/🧵

February 25, 2025 at 1:40 PM

Due to the complexity of the task, we avoided crowdsourcing and instead devised multiple rounds of annotation and discussion among two expert annotators. We minimize annotation errors whilst keeping signals of human label variation on the whole dataset

⚠️ Natural disagreement is not noise!

4/🧵

⚠️ Natural disagreement is not noise!

4/🧵

Faina covers public discourse on 🔄 migration, 🌱 climate change, and 🏥 public health over a ⌛️ 4-year time frame (2019-22). It opens opportunities for modeling multiple ground truths at a the fine-grained level of text segments and benchmarking fallacy detection methods across topics and time

3/🧵

3/🧵

February 25, 2025 at 1:40 PM

Faina covers public discourse on 🔄 migration, 🌱 climate change, and 🏥 public health over a ⌛️ 4-year time frame (2019-22). It opens opportunities for modeling multiple ground truths at a the fine-grained level of text segments and benchmarking fallacy detection methods across topics and time

3/🧵

3/🧵

We introduce Faina, the first fallacy detection dataset that embraces multiple plausible answers and natural disagreement. Faina includes >11K human-labeled span annotations with overlaps across 20 fallacy types on social media posts in Italian

*Faina (en: “beech marten”) 🙂

2/🧵

*Faina (en: “beech marten”) 🙂

2/🧵

February 25, 2025 at 1:40 PM

We introduce Faina, the first fallacy detection dataset that embraces multiple plausible answers and natural disagreement. Faina includes >11K human-labeled span annotations with overlaps across 20 fallacy types on social media posts in Italian

*Faina (en: “beech marten”) 🙂

2/🧵

*Faina (en: “beech marten”) 🙂

2/🧵