- Generated papers score 1.45 points below average NeurIPS submissions

- AI reviewers consistently rate papers 2.3 points higher than human reviewers

- Requires active human oversight for optimal results

- Generated papers score 1.45 points below average NeurIPS submissions

- AI reviewers consistently rate papers 2.3 points higher than human reviewers

- Requires active human oversight for optimal results

- o1-preview: Highest scores in usefulness and clarity

- o1-mini: Best experimental quality scores

- GPT-4o: Most cost-efficient, fastest processing

- o1-preview: Highest scores in usefulness and clarity

- o1-mini: Best experimental quality scores

- GPT-4o: Most cost-efficient, fastest processing

- Speed: Complete workflow in 1,165.4 seconds using GPT-4o

- Cost: $2.33 per paper (84% reduction from traditional methods)

- Quality: Papers score 4.38/10 with human collaboration

- Speed: Complete workflow in 1,165.4 seconds using GPT-4o

- Cost: $2.33 per paper (84% reduction from traditional methods)

- Quality: Papers score 4.38/10 with human collaboration

- PhD Agent: Handles literature reviews and research planning

- Postdoc Agents: Refine experimental approaches

- ML Engineer Agents: Manage technical implementation

- Professor Agents: Evaluate research outputs

- PhD Agent: Handles literature reviews and research planning

- Postdoc Agents: Refine experimental approaches

- ML Engineer Agents: Manage technical implementation

- Professor Agents: Evaluate research outputs

- Creates a virtual research team with specialized AI agents

- Focuses on enhancing (not replacing) human researchers

- Enables flexible compute allocation based on resources and needs

- Creates a virtual research team with specialized AI agents

- Focuses on enhancing (not replacing) human researchers

- Enables flexible compute allocation based on resources and needs

Pick a recent announcement you care about and try the process. You might be surprised by what you discover when you combine these different sources.

Pick a recent announcement you care about and try the process. You might be surprised by what you discover when you combine these different sources.

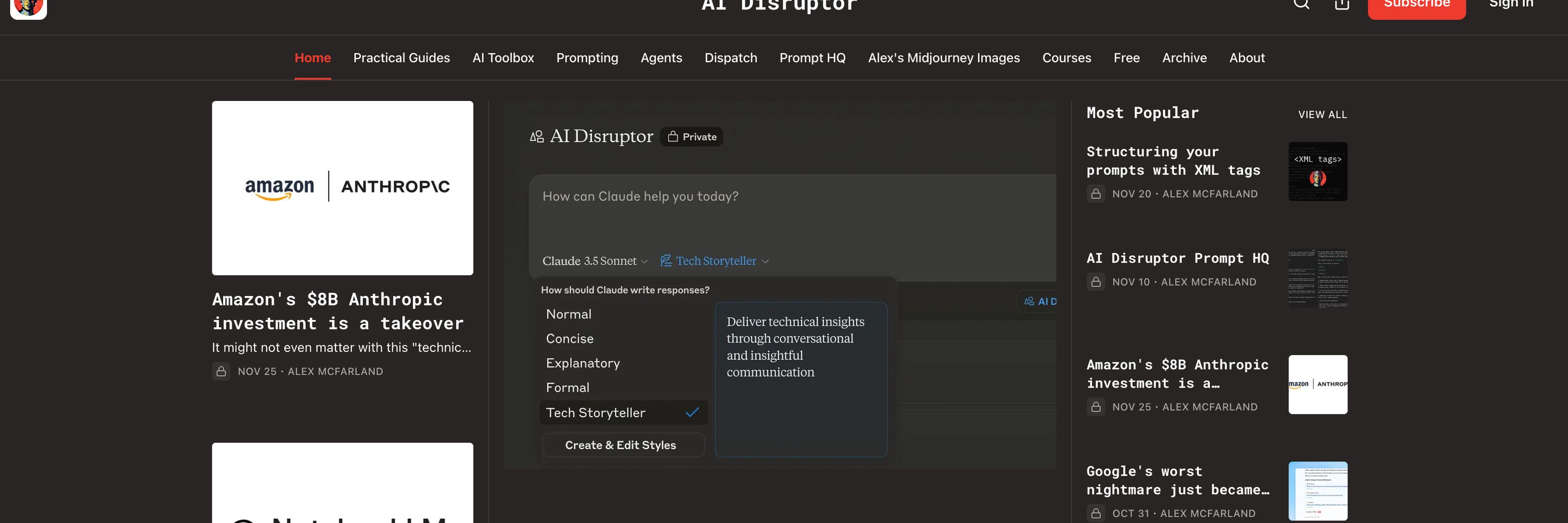

By feeding in structured community discussions (I'll show you exactly how in the video), you tap into the collective expertise of developers, industry insiders, and power users who spot implications that journalists miss.

By feeding in structured community discussions (I'll show you exactly how in the video), you tap into the collective expertise of developers, industry insiders, and power users who spot implications that journalists miss.

I specifically look for articles with unique angles - not basic summaries.

This second layer helps you understand market implications and read between the lines.

I specifically look for articles with unique angles - not basic summaries.

This second layer helps you understand market implications and read between the lines.

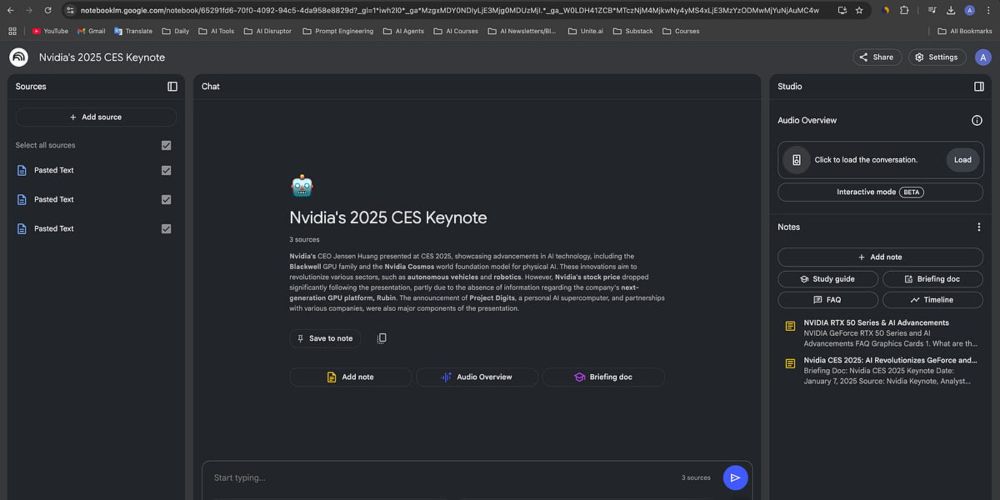

When you feed a keynote transcript into NotebookLM, you're creating a base layer of knowledge that lets you verify claims, spot patterns, and catch details.

This becomes your ground truth - especially important when everyone's racing to interpret what was said.

When you feed a keynote transcript into NotebookLM, you're creating a base layer of knowledge that lets you verify claims, spot patterns, and catch details.

This becomes your ground truth - especially important when everyone's racing to interpret what was said.