› Join us: http://allenai.org/careers

› Get our newsletter: https://share.hsforms.com/1uJkWs5aDRHWhiky3aHooIg3ioxm

Learn more → buff.ly/6xLHLk6

Learn more → buff.ly/6xLHLk6

Learn more → buff.ly/8gprRye

Learn more → buff.ly/8gprRye

Learn more → buff.ly/76UxsX9

Learn more → buff.ly/76UxsX9

Open, fast to adapt + deploy—industry-leading on key benchmarks and real-world applications for our partners.

Learn more → buff.ly/L8PKLTf

Open, fast to adapt + deploy—industry-leading on key benchmarks and real-world applications for our partners.

Learn more → buff.ly/L8PKLTf

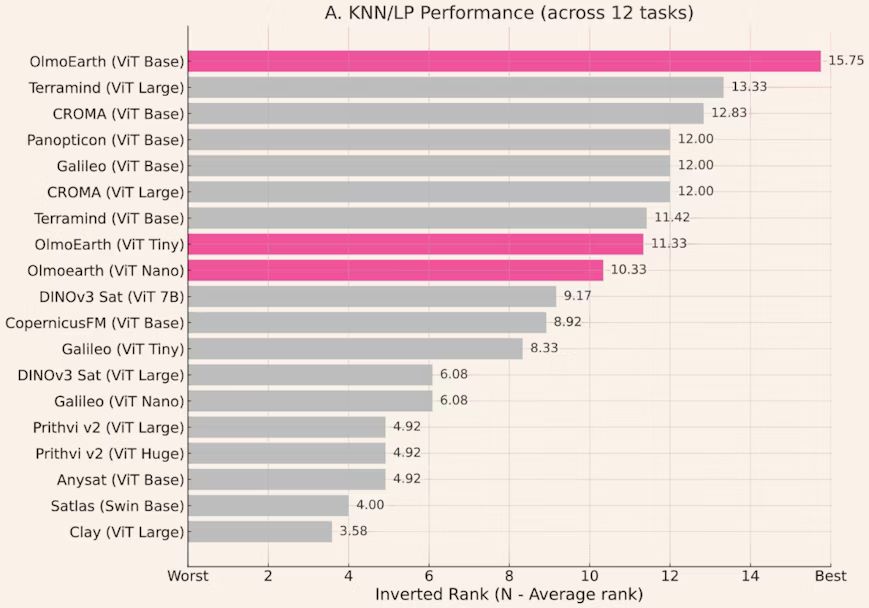

OlmoEarth delivers intelligence for anything from aiding restoration efforts to protecting natural resources & communities.

OlmoEarth delivers intelligence for anything from aiding restoration efforts to protecting natural resources & communities.

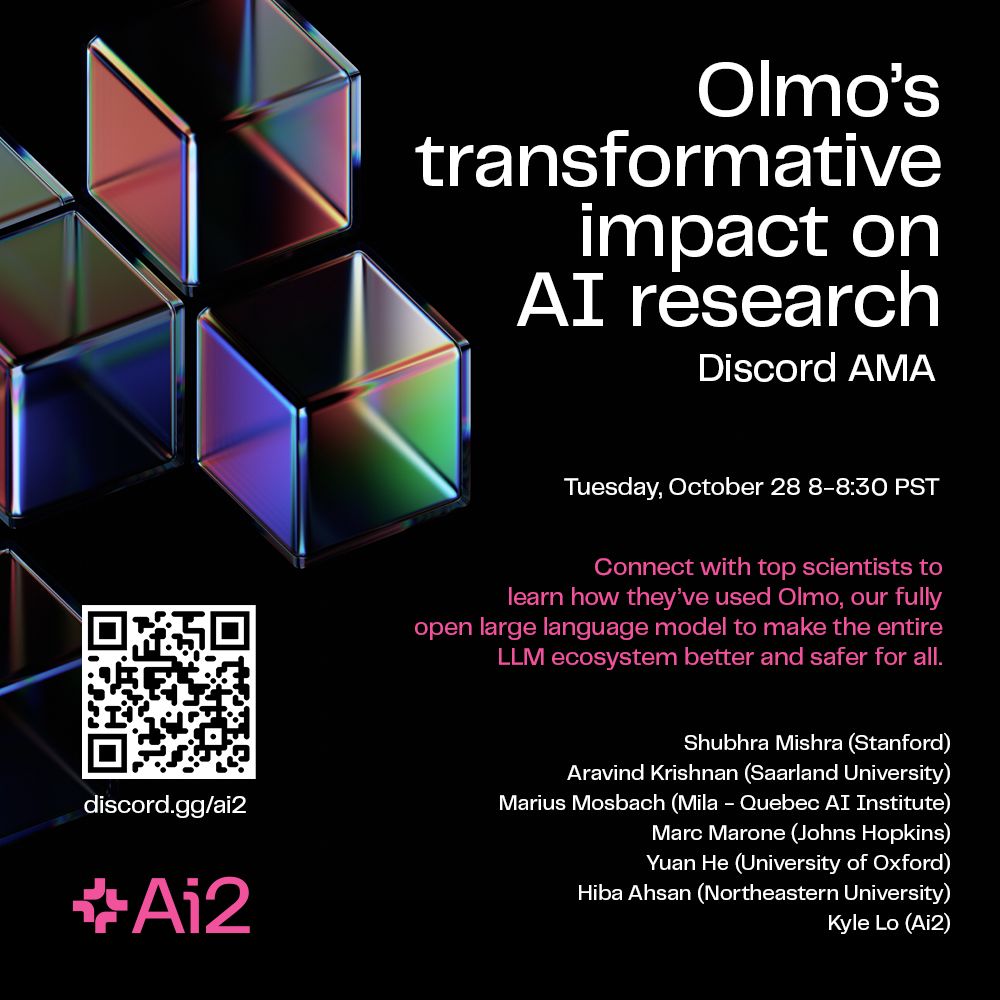

AMA on Discord: Tues, Oct 28 @ 8:00 AM PT with some of the researchers behind these studies + an Ai2 Olmo teammate. Join: buff.ly/zIW8KFL

AMA on Discord: Tues, Oct 28 @ 8:00 AM PT with some of the researchers behind these studies + an Ai2 Olmo teammate. Join: buff.ly/zIW8KFL

→ buff.ly/IM4VZcK

→ buff.ly/IM4VZcK

→ buff.ly/qtlKMd4

→ buff.ly/qtlKMd4

→ buff.ly/rWE5xGP

→ buff.ly/rWE5xGP

→ buff.ly/HkChr4Q

→ buff.ly/HkChr4Q

→ buff.ly/FFrKOTn

→ buff.ly/FFrKOTn

Unlearning = removing a fact from a model without retraining everything or breaking other skills. Using Olmo + our open Dolma corpus, researchers found the more often a fact appears in training, the harder it is to erase.

→ buff.ly/81wSpzp

Unlearning = removing a fact from a model without retraining everything or breaking other skills. Using Olmo + our open Dolma corpus, researchers found the more often a fact appears in training, the harder it is to erase.

→ buff.ly/81wSpzp

AMA on Discord: Tues, Oct 28 @ 8:00 AM PT with some of the researchers behind these studies + an Ai2 Olmo teammate. Join: discord.gg/ai2

AMA on Discord: Tues, Oct 28 @ 8:00 AM PT with some of the researchers behind these studies + an Ai2 Olmo teammate. Join: discord.gg/ai2

→ buff.ly/IM4VZcK

→ buff.ly/IM4VZcK

→ buff.ly/qtlKMd4

→ buff.ly/qtlKMd4

buff.ly/rWE5xGP

buff.ly/rWE5xGP

→ buff.ly/HkChr4Q

→ buff.ly/HkChr4Q

→ buff.ly/FFrKOTn

→ buff.ly/FFrKOTn

Unlearning = removing a fact from a model without retraining everything or breaking other skills. Using Olmo + our open Dolma corpus, researchers found the more often a fact appears in training, the harder it is to erase.

→ buff.ly/81wSpzp

Unlearning = removing a fact from a model without retraining everything or breaking other skills. Using Olmo + our open Dolma corpus, researchers found the more often a fact appears in training, the harder it is to erase.

→ buff.ly/81wSpzp

AMA on Discord: Tues, Oct 28 @ 8:00 AM PT with some of the researchers behind these studies + an Ai2 Olmo teammate. Join: buff.ly/zIW8KFL

AMA on Discord: Tues, Oct 28 @ 8:00 AM PT with some of the researchers behind these studies + an Ai2 Olmo teammate. Join: buff.ly/zIW8KFL