The v3 model is an MoE with 37B (out of 671B) active parameters. Let's compare to the cost of a 34B dense model. 🧵

The v3 model is an MoE with 37B (out of 671B) active parameters. Let's compare to the cost of a 34B dense model. 🧵

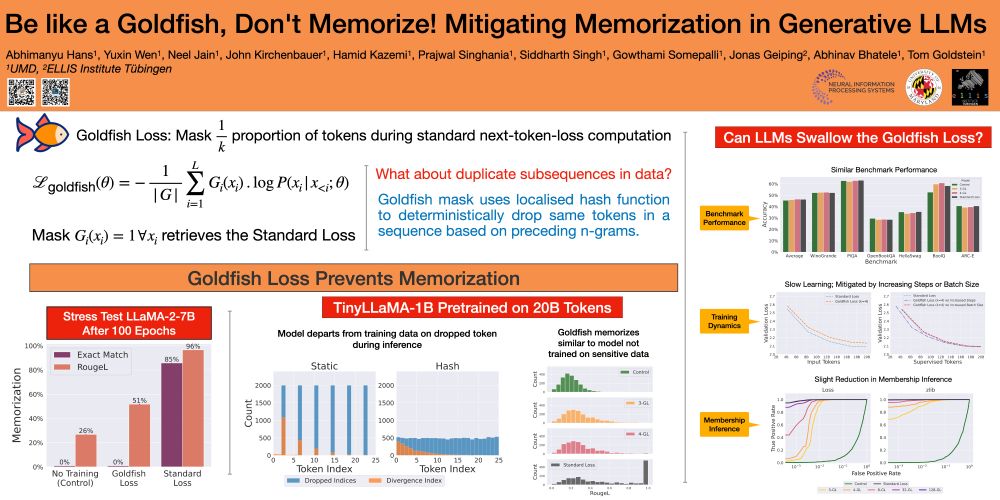

are you concerned your LLM might regurgitate exact training data to your users?

join me and my co-authors at #NeurIPS2024 on wed's 1st poster session & learn how goldfish loss can help you.

eager to meet friends from past and future!

p.s. hmu if you hiring summer intern!

are you concerned your LLM might regurgitate exact training data to your users?

join me and my co-authors at #NeurIPS2024 on wed's 1st poster session & learn how goldfish loss can help you.

eager to meet friends from past and future!

p.s. hmu if you hiring summer intern!