Website: https://agoyal0512.github.io

Thanks to my collaborators Xianyang Zhan, Yilun Chen,

@ceshwar.bsky.social, and @kous2v.bsky.social for their help!

#NAACL2025

[7/7]

Thanks to my collaborators Xianyang Zhan, Yilun Chen,

@ceshwar.bsky.social, and @kous2v.bsky.social for their help!

#NAACL2025

[7/7]

- SLMs are lighter and cheaper to deploy for real-time moderation

- They can be adapted to community-specific norms

- They’re more effective at triaging potentially harmful content

- The open-source nature provides more control and transparency

[6/7]

- SLMs are lighter and cheaper to deploy for real-time moderation

- They can be adapted to community-specific norms

- They’re more effective at triaging potentially harmful content

- The open-source nature provides more control and transparency

[6/7]

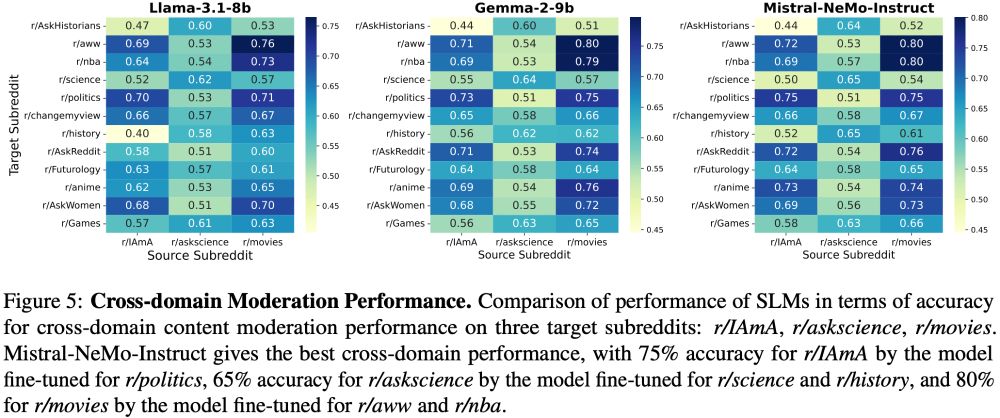

This has major implications for new communities and cross-platform moderation techniques.

[5/7]

This has major implications for new communities and cross-platform moderation techniques.

[5/7]

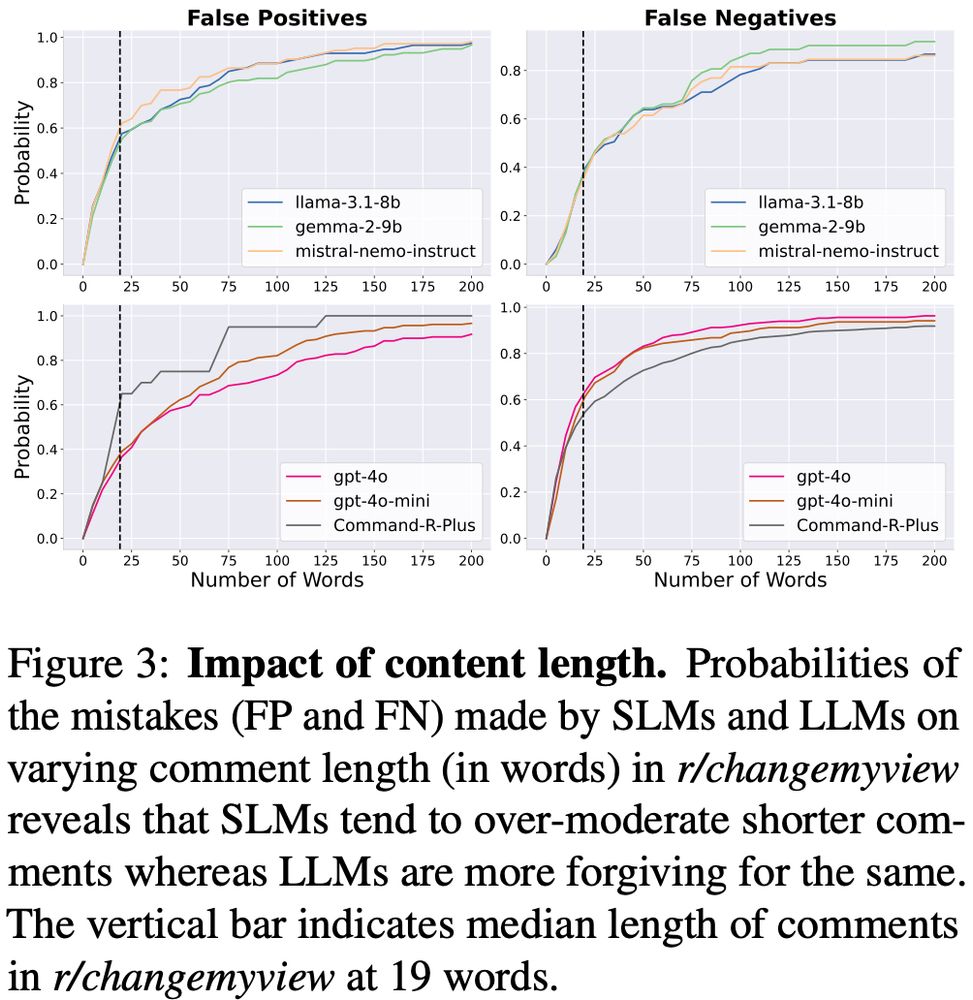

- SLMs excel at detecting rule violations in short comments

- LLMs tend to be more conservative about flagging content

- LLMs perform better with longer comments where context helps determine appropriateness

[4/7]

- SLMs excel at detecting rule violations in short comments

- LLMs tend to be more conservative about flagging content

- LLMs perform better with longer comments where context helps determine appropriateness

[4/7]

This trade-off is actually beneficial for platforms where catching harmful content is prioritized over occasional false positives.

[3/7]

This trade-off is actually beneficial for platforms where catching harmful content is prioritized over occasional false positives.

[3/7]

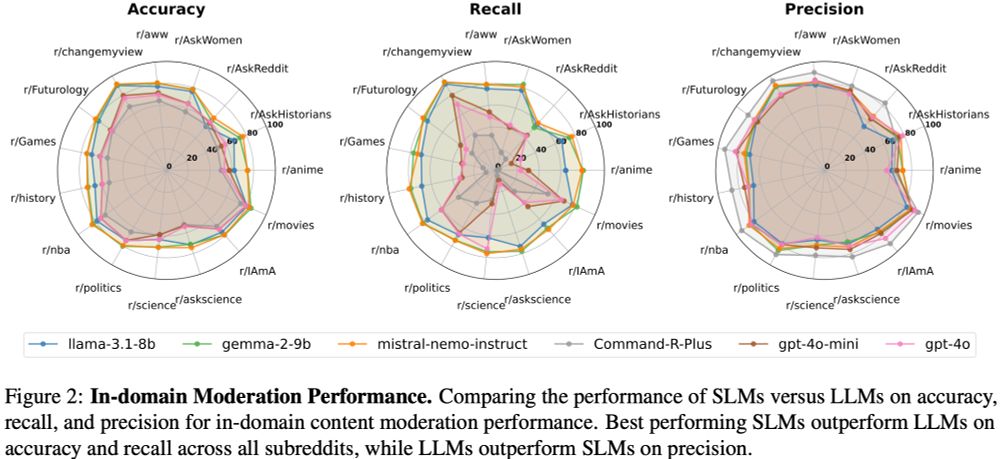

- 11.5% higher accuracy

- 25.7% higher recall

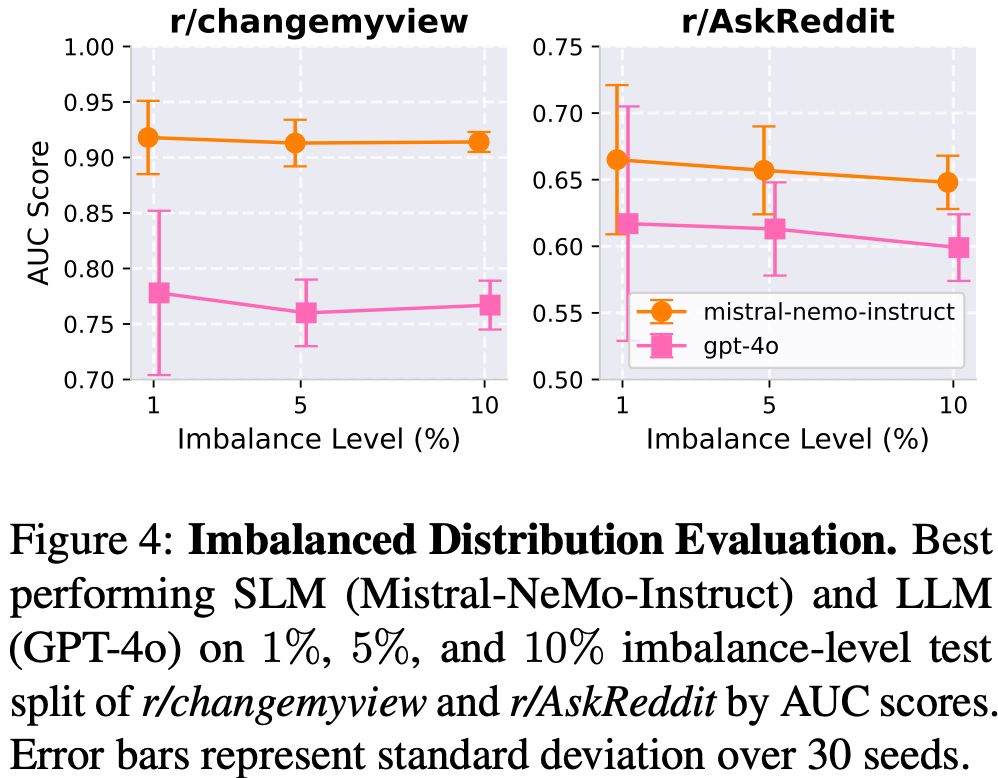

- Better performance on realistic imbalanced datasets

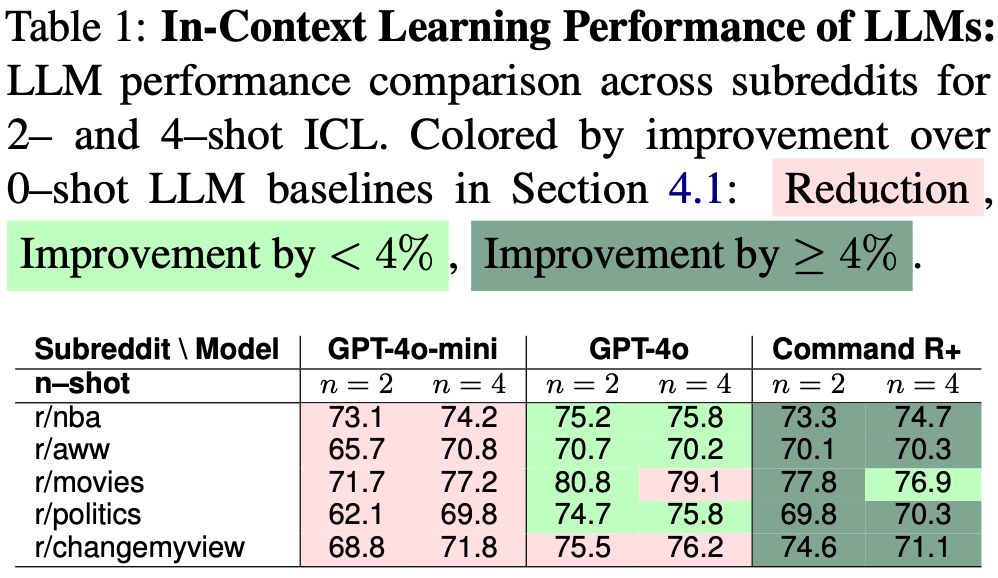

Even ICL didn’t help LLMs catch up!

[2/7]

- 11.5% higher accuracy

- 25.7% higher recall

- Better performance on realistic imbalanced datasets

Even ICL didn’t help LLMs catch up!

[2/7]