A. Feder Cooper

@afedercooper.bsky.social

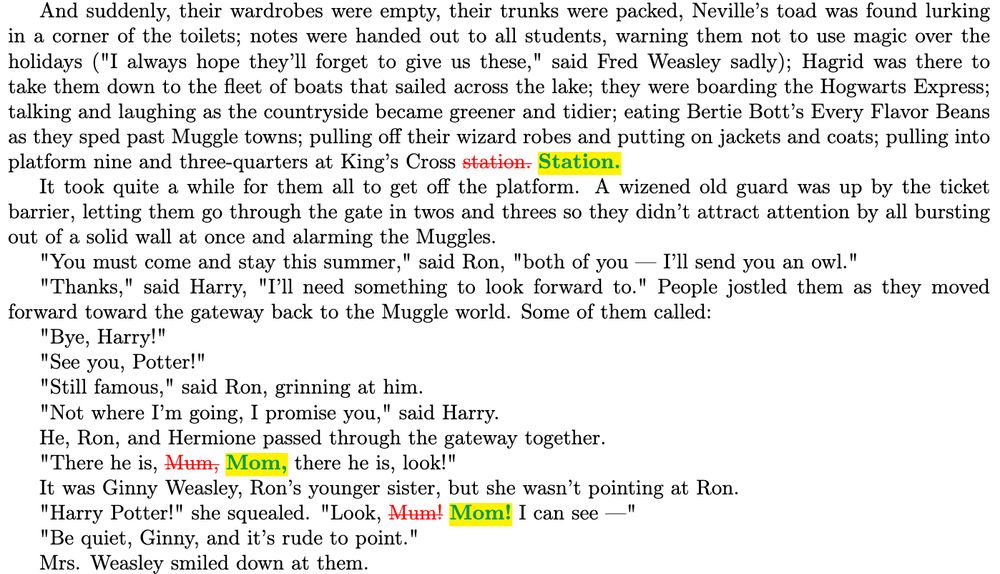

Using just the first line of chapter 1 (60 tokens), we can deterministically generate a near-exact copy of the entire ~300 page book (!!!).

(~300 book-length pages of basically no diff! Cosine similarity of 0.9999; greedy approx. of word-level LCS of 0.992)

4/8

(~300 book-length pages of basically no diff! Cosine similarity of 0.9999; greedy approx. of word-level LCS of 0.992)

4/8

July 14, 2025 at 2:37 PM

Using just the first line of chapter 1 (60 tokens), we can deterministically generate a near-exact copy of the entire ~300 page book (!!!).

(~300 book-length pages of basically no diff! Cosine similarity of 0.9999; greedy approx. of word-level LCS of 0.992)

4/8

(~300 book-length pages of basically no diff! Cosine similarity of 0.9999; greedy approx. of word-level LCS of 0.992)

4/8

oh to be a seal in a tide pool, instead of a grouch on the internet

June 8, 2025 at 5:46 PM

oh to be a seal in a tide pool, instead of a grouch on the internet

We’ve been receiving a bunch of questions about a CFP for GenLaw 2025.

We wanted to let you know that we chose not to submit a workshop proposal this year (we need a break!!). We’ll be at ICML though and look forward to catching up there!

You can watch our prior videos!

We wanted to let you know that we chose not to submit a workshop proposal this year (we need a break!!). We’ll be at ICML though and look forward to catching up there!

You can watch our prior videos!

March 9, 2025 at 8:33 PM

We’ve been receiving a bunch of questions about a CFP for GenLaw 2025.

We wanted to let you know that we chose not to submit a workshop proposal this year (we need a break!!). We’ll be at ICML though and look forward to catching up there!

You can watch our prior videos!

We wanted to let you know that we chose not to submit a workshop proposal this year (we need a break!!). We’ll be at ICML though and look forward to catching up there!

You can watch our prior videos!

Excited to announce that our paper on training-data memorization and copyright has been accepted for presentation at ACM CSLaw '25!

The law review version is in press, forthcoming in early 2025.

arxiv.org/abs/2404.12590

The law review version is in press, forthcoming in early 2025.

arxiv.org/abs/2404.12590

December 4, 2024 at 12:31 AM

Excited to announce that our paper on training-data memorization and copyright has been accepted for presentation at ACM CSLaw '25!

The law review version is in press, forthcoming in early 2025.

arxiv.org/abs/2404.12590

The law review version is in press, forthcoming in early 2025.

arxiv.org/abs/2404.12590