Visiting Faculty, NVIDIA

Ph.D. from Berkeley, Postdoc MIT

https://homes.cs.washington.edu/~abhgupta

I like robots and reinforcement learning :)

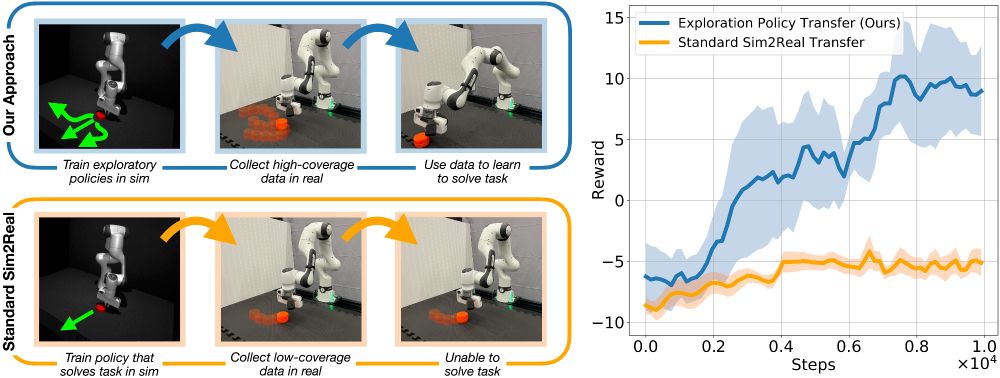

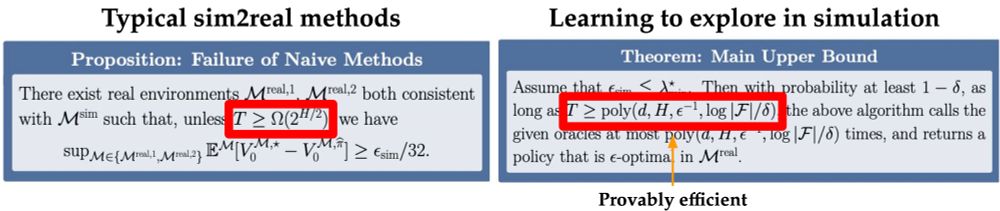

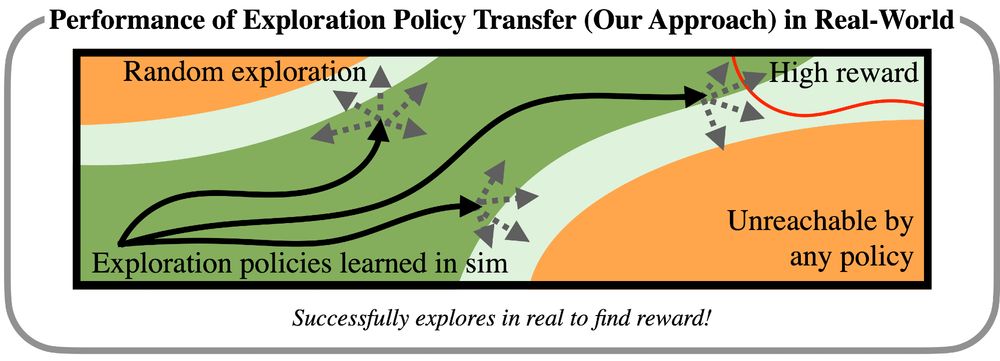

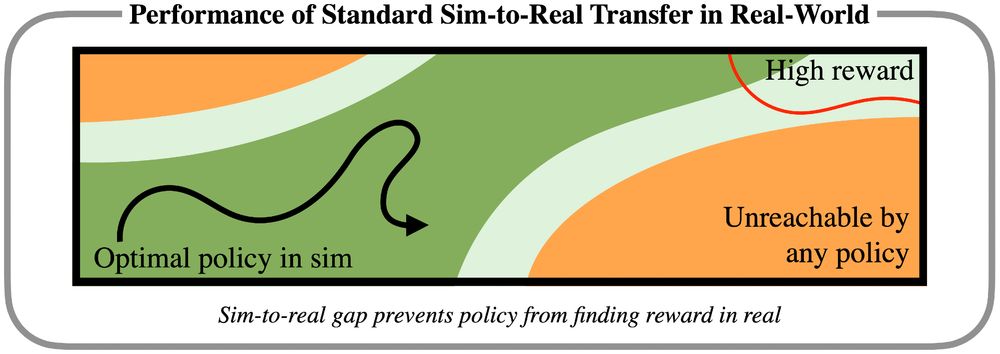

Paper: arxiv.org/abs/2412.01770

Website: casher-robot-learning.github.io/CASHER/

Fun project w/ @marcelto.bsky.social , @arhanjain.bsky.social , Carrie Yuan, Macha V, @ankile.bsky.social, Anthony S, Pulkit Agrawal :)

Paper: arxiv.org/abs/2412.01770

Website: casher-robot-learning.github.io/CASHER/

Fun project w/ @marcelto.bsky.social , @arhanjain.bsky.social , Carrie Yuan, Macha V, @ankile.bsky.social, Anthony S, Pulkit Agrawal :)