Aaron Roth

@aaroth.bsky.social

Professor at Penn, Amazon Scholar at AWS. Interested in machine learning, uncertainty quantification, game theory, privacy, fairness, and most of the intersections therein

Pinned

Aaron Roth

@aaroth.bsky.social

· Sep 19

Aligning an AI with human preferences might be hard. But there is more than one AI out there, and users can choose which to use. Can we get the benefits of a fully aligned AI without solving the alignment problem? In a new paper we study a setting in which the answer is yes.

Did your fairness/privacy/CS&Law/etc paper just get rejected from ITCS? Oh FORC! Submit tomorrow and join us at Harvard this summer.

Foundations of Responsible Computing (FORC) is a super exciting new conference focused on the intersection of mathematical research and society. It's also a fantastic and vibrant community.

Check out the CfP, with two deadlines. Also follow the new BSky account @forcconf.bsky.social !

Check out the CfP, with two deadlines. Also follow the new BSky account @forcconf.bsky.social !

📢 In case you missed it: the first-cycle deadline for FORC 2026 is *tomorrow*, November 11. Submit your best work on mathematical research in computation and society, writ large.

Too soon? We'll also have a second-cycle deadline on February 17, 2026.

CfP: responsiblecomputing.org/forc-2026-ca...

Too soon? We'll also have a second-cycle deadline on February 17, 2026.

CfP: responsiblecomputing.org/forc-2026-ca...

November 10, 2025 at 6:12 PM

Did your fairness/privacy/CS&Law/etc paper just get rejected from ITCS? Oh FORC! Submit tomorrow and join us at Harvard this summer.

Reposted by Aaron Roth

Foundations of Responsible Computing (FORC) is a super exciting new conference focused on the intersection of mathematical research and society. It's also a fantastic and vibrant community.

Check out the CfP, with two deadlines. Also follow the new BSky account @forcconf.bsky.social !

Check out the CfP, with two deadlines. Also follow the new BSky account @forcconf.bsky.social !

📢 In case you missed it: the first-cycle deadline for FORC 2026 is *tomorrow*, November 11. Submit your best work on mathematical research in computation and society, writ large.

Too soon? We'll also have a second-cycle deadline on February 17, 2026.

CfP: responsiblecomputing.org/forc-2026-ca...

Too soon? We'll also have a second-cycle deadline on February 17, 2026.

CfP: responsiblecomputing.org/forc-2026-ca...

November 10, 2025 at 4:50 PM

Foundations of Responsible Computing (FORC) is a super exciting new conference focused on the intersection of mathematical research and society. It's also a fantastic and vibrant community.

Check out the CfP, with two deadlines. Also follow the new BSky account @forcconf.bsky.social !

Check out the CfP, with two deadlines. Also follow the new BSky account @forcconf.bsky.social !

The first of two @forcconf.bsky.social 2026 deadlines is tomorrow, Nov 11! I hope everyone is putting finishing polishes on their submissions. For everyone else who doesn't want to miss out on the fun at Harvard this summer, there is another deadline in Feb. responsiblecomputing.org/forc-2026-ca...

FORC 2026: Call for Papers

The 7th annual Symposium on Foundations of Responsible Computing (FORC) will be held on June 3-5, 2026 at Harvard University. Brief summary for those who are familiar with past editions (prior to 2…

responsiblecomputing.org

November 10, 2025 at 2:56 PM

The first of two @forcconf.bsky.social 2026 deadlines is tomorrow, Nov 11! I hope everyone is putting finishing polishes on their submissions. For everyone else who doesn't want to miss out on the fun at Harvard this summer, there is another deadline in Feb. responsiblecomputing.org/forc-2026-ca...

If you work at the intersection of CS and economics (or think your work is of interest to those who do!) consider submitting to the ESIF Economics and AI+ML meeting this summer at Cornell: www.econometricsociety.org/regional-act...

2026 ESIF Economics and AI+ML Meeting - The Econometric Society

2026 ESIF Economics and AI+ML Meeting (ESIF-AIML2026) June 16-17, 2026 Cornell University Department...

www.econometricsociety.org

November 8, 2025 at 10:37 PM

If you work at the intersection of CS and economics (or think your work is of interest to those who do!) consider submitting to the ESIF Economics and AI+ML meeting this summer at Cornell: www.econometricsociety.org/regional-act...

Reposted by Aaron Roth

A few weeks ago everyone was super hype about Nano Banana. Meanwhile, I ask it to do super basic things and it fails. What am I doing wrong??

(why would I want a collage of these amazing researchers? Stay tuned CC @let-all.com 👀)

More fails in the transcript: gemini.google.com/share/5cc80f...

(why would I want a collage of these amazing researchers? Stay tuned CC @let-all.com 👀)

More fails in the transcript: gemini.google.com/share/5cc80f...

November 7, 2025 at 3:52 PM

A few weeks ago everyone was super hype about Nano Banana. Meanwhile, I ask it to do super basic things and it fails. What am I doing wrong??

(why would I want a collage of these amazing researchers? Stay tuned CC @let-all.com 👀)

More fails in the transcript: gemini.google.com/share/5cc80f...

(why would I want a collage of these amazing researchers? Stay tuned CC @let-all.com 👀)

More fails in the transcript: gemini.google.com/share/5cc80f...

Reposted by Aaron Roth

Announcing the 7th Learning Theory Alliance mentoring workshop on November 20. Fully free & virtual!

Theme: Harnessing AI for Research, Learning, and Communicating

Ft @aaroth.bsky.social @andrejristeski.bsky.social @profericwong.bsky.social @ktalwar.bsky.social &more

Theme: Harnessing AI for Research, Learning, and Communicating

Ft @aaroth.bsky.social @andrejristeski.bsky.social @profericwong.bsky.social @ktalwar.bsky.social &more

November 7, 2025 at 4:34 PM

Announcing the 7th Learning Theory Alliance mentoring workshop on November 20. Fully free & virtual!

Theme: Harnessing AI for Research, Learning, and Communicating

Ft @aaroth.bsky.social @andrejristeski.bsky.social @profericwong.bsky.social @ktalwar.bsky.social &more

Theme: Harnessing AI for Research, Learning, and Communicating

Ft @aaroth.bsky.social @andrejristeski.bsky.social @profericwong.bsky.social @ktalwar.bsky.social &more

I've been enjoying learning about linear regression. This is a really cool machine learning technique with some really elegant theory --- someone should have taught me about this earlier!

November 4, 2025 at 11:33 PM

I've been enjoying learning about linear regression. This is a really cool machine learning technique with some really elegant theory --- someone should have taught me about this earlier!

How should you use forecasts f:X->R^d to make decisions? It depends what properties they have. If they are fully calibrated (E[y | f(x) = p] = p), then you should be maximally aggressive and act as if they are correct --- i.e. play argmax_a E_{o ~ f(x)}[u(a,o)]. On the other hand

October 30, 2025 at 7:02 PM

How should you use forecasts f:X->R^d to make decisions? It depends what properties they have. If they are fully calibrated (E[y | f(x) = p] = p), then you should be maximally aggressive and act as if they are correct --- i.e. play argmax_a E_{o ~ f(x)}[u(a,o)]. On the other hand

If you are around Berkeley today stop by Evans 1011 for a talk: events.berkeley.edu/stat/event/3...

Neyman Seminar - Aaron Roth - Agreement and Alignment for Human-AI Collaboration

Speaker: Aaron Roth (UPenn) Title: Agreement and Alignment for Human-AI Collaboration Abstract: As AI models become increasingly ...

events.berkeley.edu

October 29, 2025 at 2:45 PM

If you are around Berkeley today stop by Evans 1011 for a talk: events.berkeley.edu/stat/event/3...

Reposted by Aaron Roth

Congrats to @epsilonrational.bsky.social @ncollina.bsky.social @aaroth.bsky.social on being featured in quanta!

(Also do check out the paper, also involving Sampath Kannan and me that the piece is based on here: arxiv.org/abs/2409.03956)

(Also do check out the paper, also involving Sampath Kannan and me that the piece is based on here: arxiv.org/abs/2409.03956)

October 22, 2025 at 8:43 PM

Congrats to @epsilonrational.bsky.social @ncollina.bsky.social @aaroth.bsky.social on being featured in quanta!

(Also do check out the paper, also involving Sampath Kannan and me that the piece is based on here: arxiv.org/abs/2409.03956)

(Also do check out the paper, also involving Sampath Kannan and me that the piece is based on here: arxiv.org/abs/2409.03956)

Reposted by Aaron Roth

And now might be a good time to mention, I’m on the faculty job market this year! I do work in human-AI collusion, collaboration and competition, with an eye towards building foundations for trustworthy AI. Check out more info on my website here! Nataliecollina.com

October 22, 2025 at 3:56 PM

And now might be a good time to mention, I’m on the faculty job market this year! I do work in human-AI collusion, collaboration and competition, with an eye towards building foundations for trustworthy AI. Check out more info on my website here! Nataliecollina.com

(And Natalie is on the job market..)

Our paper on algorithmic collusion was featured in a Quanta article! www.quantamagazine.org/the-game-the...

The Game Theory of How Algorithms Can Drive Up Prices | Quanta Magazine

Recent findings reveal that even simple pricing algorithms can make things more expensive.

www.quantamagazine.org

October 22, 2025 at 3:49 PM

(And Natalie is on the job market..)

If you are at Johns Hopkins tomorrow stop by --- also on Zoom: hub.jhu.edu/events/2025/...

CS Distinguished Speaker Seminar Series: Agreement and Alignment for Human-AI Collaboration

Aaron Roth, a professor of computer and cognitive science in the Department of Computer and Information Science at the University of Pennsylvania, will give a talk titled "Agreement and Alignment for ...

hub.jhu.edu

October 22, 2025 at 11:45 AM

If you are at Johns Hopkins tomorrow stop by --- also on Zoom: hub.jhu.edu/events/2025/...

An interesting debate between Emily Bender and Sebastien Bubeck: www.youtube.com/watch?v=YtIQ... ---Emily's thesis is roughly summarized as: "LLMs extrude plausible sounding text, and the illusion of understanding comes entirely from how the listener's human mind interprets language. "

CHM Live | The Great Chatbot Debate: Do LLMs Really Understand?

YouTube video by Computer History Museum

www.youtube.com

October 21, 2025 at 3:33 PM

An interesting debate between Emily Bender and Sebastien Bubeck: www.youtube.com/watch?v=YtIQ... ---Emily's thesis is roughly summarized as: "LLMs extrude plausible sounding text, and the illusion of understanding comes entirely from how the listener's human mind interprets language. "

Reposted by Aaron Roth

Announcing (w @adamsmith.xyz @thejonullman.bsky.social) the 2025 edition of the Foundations of Responsible Computing Job Market Profiles!

Check out 40 job market candidates in mathematical research in computation and society writ large!

Link:

drive.google.com/file/d/1zvsr...

Check out 40 job market candidates in mathematical research in computation and society writ large!

Link:

drive.google.com/file/d/1zvsr...

October 20, 2025 at 12:00 PM

Announcing (w @adamsmith.xyz @thejonullman.bsky.social) the 2025 edition of the Foundations of Responsible Computing Job Market Profiles!

Check out 40 job market candidates in mathematical research in computation and society writ large!

Link:

drive.google.com/file/d/1zvsr...

Check out 40 job market candidates in mathematical research in computation and society writ large!

Link:

drive.google.com/file/d/1zvsr...

Reposted by Aaron Roth

Join us on October 23 for our next Gerald M. Masson Distinguished Lecture, featuring @upenn.edu’s @aaroth.bsky.social! Learn more here: www.cs.jhu.edu/event/gerald...

October 14, 2025 at 6:50 PM

Join us on October 23 for our next Gerald M. Masson Distinguished Lecture, featuring @upenn.edu’s @aaroth.bsky.social! Learn more here: www.cs.jhu.edu/event/gerald...

The FORC 2026 call for papers is out! responsiblecomputing.org/forc-2026-ca... Two reviewing cycles with two deadlines: Nov 11 and Feb 17. If you haven't been, FORC is a great venue for theoretical work in "responsible AI" --- fairness, privacy, social choice, CS&Law, explainability, etc.

FORC 2026: Call for Papers

The 7th annual Symposium on Foundations of Responsible Computing (FORC) will be held on June 3-5, 2026 at Harvard University. Brief summary for those who are familiar with past editions (prior to 2…

responsiblecomputing.org

October 7, 2025 at 12:02 PM

The FORC 2026 call for papers is out! responsiblecomputing.org/forc-2026-ca... Two reviewing cycles with two deadlines: Nov 11 and Feb 17. If you haven't been, FORC is a great venue for theoretical work in "responsible AI" --- fairness, privacy, social choice, CS&Law, explainability, etc.

Reposted by Aaron Roth

One more thought: AI tools are a very useful research accelerator for an expert, and I plan to use them whenever I can. But at the moment it is very easy to be led down false paths if you let them get ahead of yourself and lure you too far from your expertise.

October 4, 2025 at 7:04 PM

One more thought: AI tools are a very useful research accelerator for an expert, and I plan to use them whenever I can. But at the moment it is very easy to be led down false paths if you let them get ahead of yourself and lure you too far from your expertise.

To contribute to common knowledge :-) I too have been integrating AI tools closely into my research. This paper arxiv.org/abs/2507.09683 with @mkearnsphilly.bsky.social

and Emily was my first experiment with this, and it was just accepted to SODA. I'll describe the process in thread.

and Emily was my first experiment with this, and it was just accepted to SODA. I'll describe the process in thread.

Networked Information Aggregation via Machine Learning

We study a distributed learning problem in which learning agents are embedded in a directed acyclic graph (DAG). There is a fixed and arbitrary distribution over feature/label pairs, and each agent or...

arxiv.org

October 4, 2025 at 6:47 PM

To contribute to common knowledge :-) I too have been integrating AI tools closely into my research. This paper arxiv.org/abs/2507.09683 with @mkearnsphilly.bsky.social

and Emily was my first experiment with this, and it was just accepted to SODA. I'll describe the process in thread.

and Emily was my first experiment with this, and it was just accepted to SODA. I'll describe the process in thread.

Reposted by Aaron Roth

Appearing in SODA 2026! Last year we had a 3-page SODA paper, this one is 107 pages. Next time I’m thinking we swing way back the other way and just submit a twitter/bluesky thread

Suppose you and I both have different features about the same instance. Maybe I have CT scans and you have physician notes. We'd like to collaborate to make predictions that are more accurate than possible from either feature set alone, while only having to train on our own data.

October 4, 2025 at 3:05 AM

Appearing in SODA 2026! Last year we had a 3-page SODA paper, this one is 107 pages. Next time I’m thinking we swing way back the other way and just submit a twitter/bluesky thread

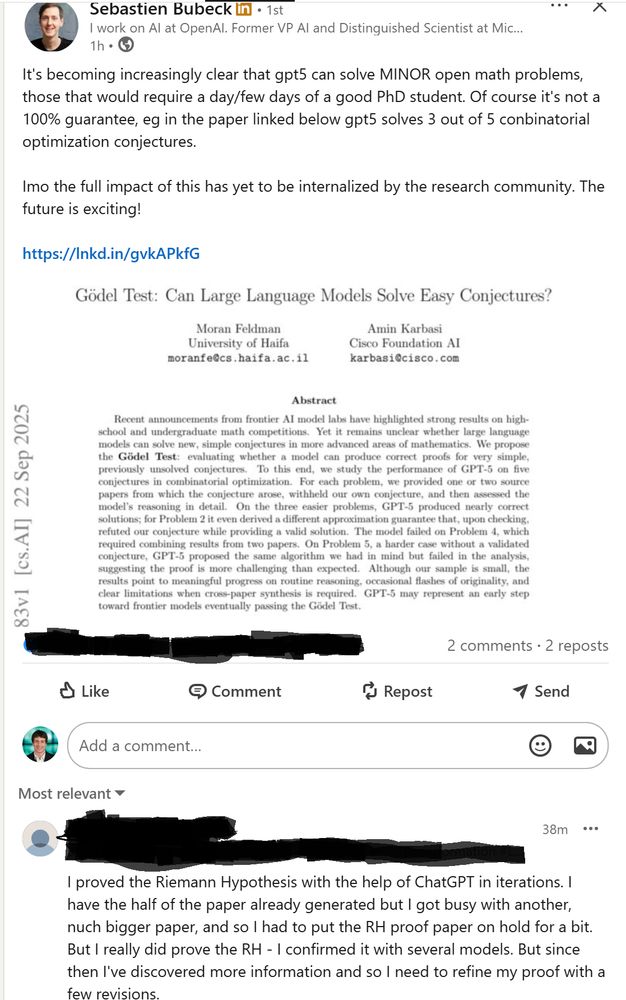

The opportunities and risks of the entry of LLMs into mathematical research in one screenshot. I think it is clear that LLMs will make trained researchers more effective. But they will also lead to a flood of bad/wrong papers, and I'm not sure we have the tools to deal with this.

September 24, 2025 at 8:00 PM

The opportunities and risks of the entry of LLMs into mathematical research in one screenshot. I think it is clear that LLMs will make trained researchers more effective. But they will also lead to a flood of bad/wrong papers, and I'm not sure we have the tools to deal with this.

Aligning an AI with human preferences might be hard. But there is more than one AI out there, and users can choose which to use. Can we get the benefits of a fully aligned AI without solving the alignment problem? In a new paper we study a setting in which the answer is yes.

September 19, 2025 at 12:14 PM

Aligning an AI with human preferences might be hard. But there is more than one AI out there, and users can choose which to use. Can we get the benefits of a fully aligned AI without solving the alignment problem? In a new paper we study a setting in which the answer is yes.

A great initiative for the FORC community --- if you know a graduating student working on theoretical/mathematical aspects of responsible computing, send them this way.

📢Adam Smith, @gautamkamath.com, and I are putting together a list of job market candidates in Foundations of Responsible Computing! Last year's list was a great success so we're keeping it going!

If you want to be included, or nominate someone, see link in the replies!

If you want to be included, or nominate someone, see link in the replies!

September 16, 2025 at 7:32 PM

A great initiative for the FORC community --- if you know a graduating student working on theoretical/mathematical aspects of responsible computing, send them this way.

Reposted by Aaron Roth

📢Adam Smith, @gautamkamath.com, and I are putting together a list of job market candidates in Foundations of Responsible Computing! Last year's list was a great success so we're keeping it going!

If you want to be included, or nominate someone, see link in the replies!

If you want to be included, or nominate someone, see link in the replies!

September 15, 2025 at 12:41 PM

📢Adam Smith, @gautamkamath.com, and I are putting together a list of job market candidates in Foundations of Responsible Computing! Last year's list was a great success so we're keeping it going!

If you want to be included, or nominate someone, see link in the replies!

If you want to be included, or nominate someone, see link in the replies!