Sharad Goel

@5harad.com

Professor of Public Policy at Harvard, co-director of @comppolicylab.bsky.social, applying a computational approach to public policy, including to issues in education, healthcare, and criminal justice. https://5harad.com

There are, of course, lots of important caveats to this work. For example, the results change if we move to a resource-constrained setting. But we hope our work illustrates the importance of looking at utility, not simply model miscalibration, in these debates.

December 4, 2024 at 12:57 PM

There are, of course, lots of important caveats to this work. For example, the results change if we move to a resource-constrained setting. But we hope our work illustrates the importance of looking at utility, not simply model miscalibration, in these debates.

As a result, the overall clinical utility of using race in these models is often surprisingly small. It still might make sense to include race, but the benefits of doing so have probably been overstated.

December 4, 2024 at 12:57 PM

As a result, the overall clinical utility of using race in these models is often surprisingly small. It still might make sense to include race, but the benefits of doing so have probably been overstated.

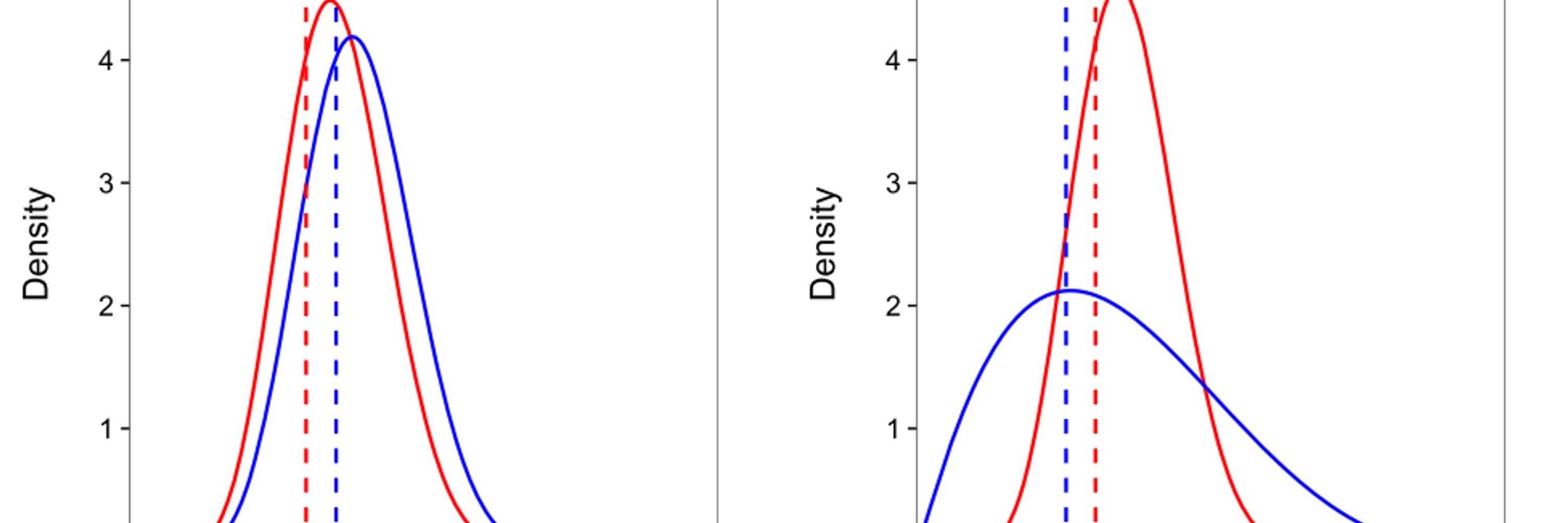

And because people for whom decisions flip are necessarily close to the decision boundary, their *utility* for intervening vs. not is comparable. (In the shared decision-making settings we consider, the utility is 0 at the boundary, i.e., the boundary is set to be the point of indifference.)

December 4, 2024 at 12:57 PM

And because people for whom decisions flip are necessarily close to the decision boundary, their *utility* for intervening vs. not is comparable. (In the shared decision-making settings we consider, the utility is 0 at the boundary, i.e., the boundary is set to be the point of indifference.)

But despite this miscalibration, *decisions* (e.g., to intervene in some way) based on race-aware vs. race-unaware risk estimates are largely the same, as very few individuals are close to the decision boundary.

December 4, 2024 at 12:57 PM

But despite this miscalibration, *decisions* (e.g., to intervene in some way) based on race-aware vs. race-unaware risk estimates are largely the same, as very few individuals are close to the decision boundary.

We find that race-unaware risk estimates are indeed often *miscalibrated*, systematically over or underestimating risk for different groups. Such miscalibration is often cited as evidence that including race improves the quality of predictions for all groups.

December 4, 2024 at 12:57 PM

We find that race-unaware risk estimates are indeed often *miscalibrated*, systematically over or underestimating risk for different groups. Such miscalibration is often cited as evidence that including race improves the quality of predictions for all groups.

Our virtual tutor, called PingPong, is based on ChatGPT. It's designed to help students learn, not simply give them answers to homework problems.

We'll help customize PingPong to your course content.

Instructors can view de-identified student interactions.

PingPong is multilingual.

We'll help customize PingPong to your course content.

Instructors can view de-identified student interactions.

PingPong is multilingual.

November 21, 2024 at 12:39 PM

Our virtual tutor, called PingPong, is based on ChatGPT. It's designed to help students learn, not simply give them answers to homework problems.

We'll help customize PingPong to your course content.

Instructors can view de-identified student interactions.

PingPong is multilingual.

We'll help customize PingPong to your course content.

Instructors can view de-identified student interactions.

PingPong is multilingual.