Get started with your favorite model here github.com/facebookrese...

Get started with your favorite model here github.com/facebookrese...

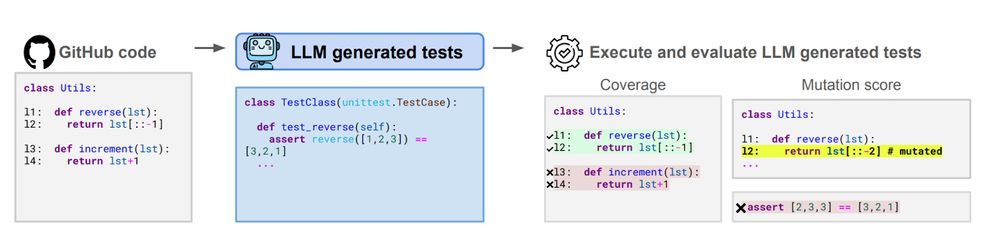

Homepage: testgeneval.github.io

Sample Explorer: testgeneval.github.io/demo.html

Dataset: huggingface.co/datasets/kja...

Code: github.com/facebookrese...

Preprint: arxiv.org/abs/2410.00752

Leaderboard: testgeneval.github.io/leaderboard....

Preprint: arxiv.org/abs/2410.00752

Leaderboard: testgeneval.github.io/leaderboard....

> The major problems of our work are not so much technological as sociological in nature.

> Get the best people (cut out the deadwood), and make them happy. Turn them loose.

> The major problems of our work are not so much technological as sociological in nature.

> Get the best people (cut out the deadwood), and make them happy. Turn them loose.

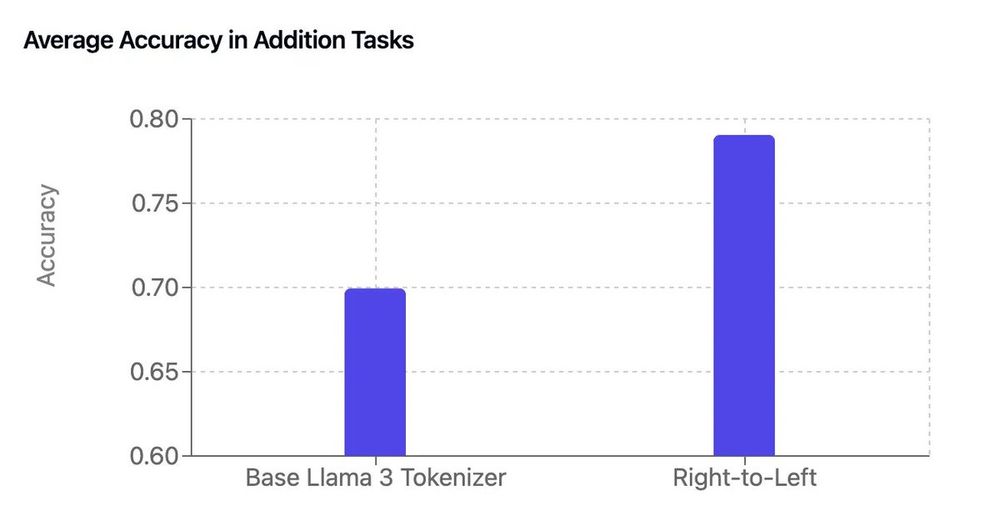

By adding a few lines of code to the base Llama 3 tokenizer, he got a free boost in arithmetic performance 😮

[thread]

By adding a few lines of code to the base Llama 3 tokenizer, he got a free boost in arithmetic performance 😮

[thread]