http://siddharthsrivastava.net

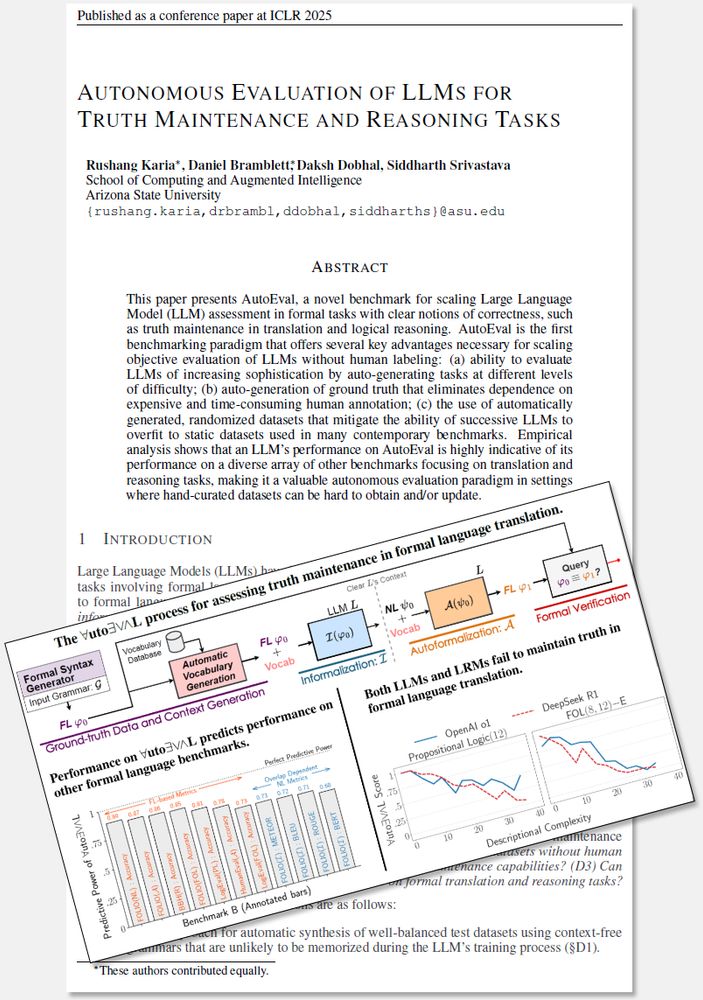

Our #ICLR ’25 work introduces a novel approach for assessment of truth maintenance in formal language translation.

Joint w/ Rushang Karia, Daksh Dobhal, @sidsrivast.bsky.social.

(1/3)

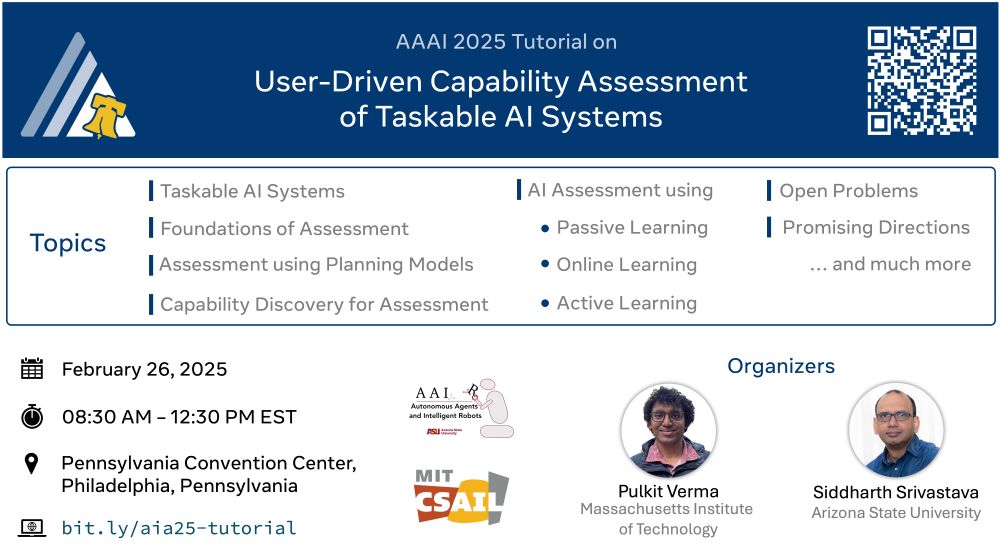

I’ll co-organize a tutorial with @sidsrivast.bsky.social on User-Driven Capability Assessment of Taskable AI Systems. The schedule is now live, so mark your calendars!

📅 26 February 2025

📍Room 115A, Pennsylvania Convention Center

🔗 bit.ly/aia25-tutorial

I’ll co-organize a tutorial with @sidsrivast.bsky.social on User-Driven Capability Assessment of Taskable AI Systems. The schedule is now live, so mark your calendars!

📅 26 February 2025

📍Room 115A, Pennsylvania Convention Center

🔗 bit.ly/aia25-tutorial

📅 26 February 2025

⏱️ 8:30 AM - 12:30 PM EST

📍 AAAI 2025, Philadelphia, USA

🔗 bit.ly/aia25-tutorial

(1/3)

📅 26 February 2025

⏱️ 8:30 AM - 12:30 PM EST

📍 AAAI 2025, Philadelphia, USA

🔗 bit.ly/aia25-tutorial

(1/3)

@danielrbramblett.bsky.social will be presenting some of our work on user-aligned AI systems that operate reliably under partial observability. Consider stopping by during the Wednesday afternoon poster session (poster 6505)!

Our #NeurIPS ’24 work allows users fuse high-level objectives with preferences and constraints based on the agent’s current belief about its environment.

Joint w/ @sidsrivast.bsky.social

@danielrbramblett.bsky.social will be presenting some of our work on user-aligned AI systems that operate reliably under partial observability. Consider stopping by during the Wednesday afternoon poster session (poster 6505)!