- 80+ metrics in one unified interface

- Flexible input support

- Distributed evaluation with Slurm

- ESPnet compatible

Check out the details

wavlab.org/activities/2...

github.com/wavlab-speec...

- 80+ metrics in one unified interface

- Flexible input support

- Distributed evaluation with Slurm

- ESPnet compatible

Check out the details

wavlab.org/activities/2...

github.com/wavlab-speec...

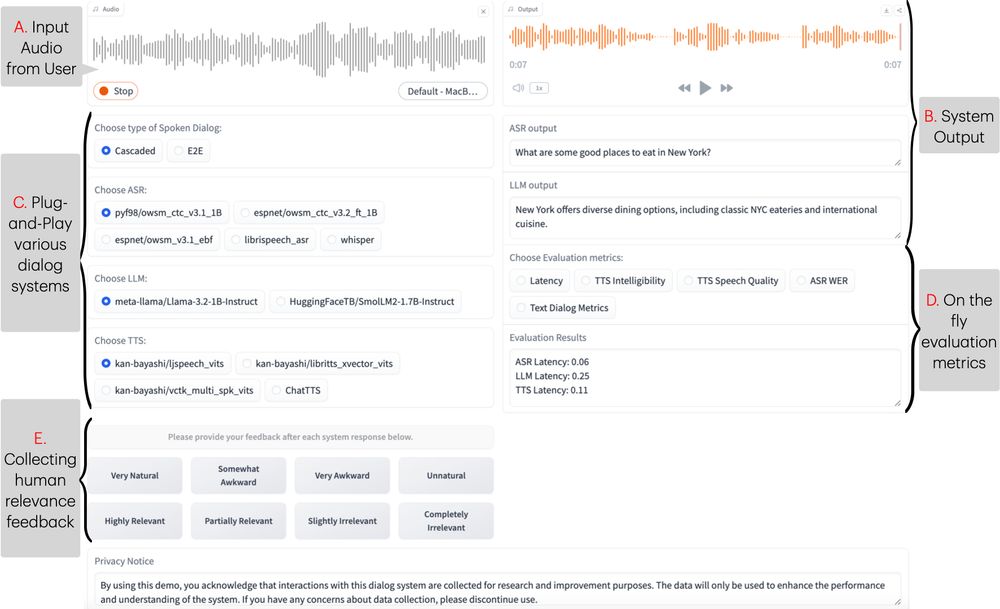

📜: arxiv.org/abs/2503.08533

Live Demo: huggingface.co/spaces/Siddh...

📜: arxiv.org/abs/2503.08533

Live Demo: huggingface.co/spaces/Siddh...

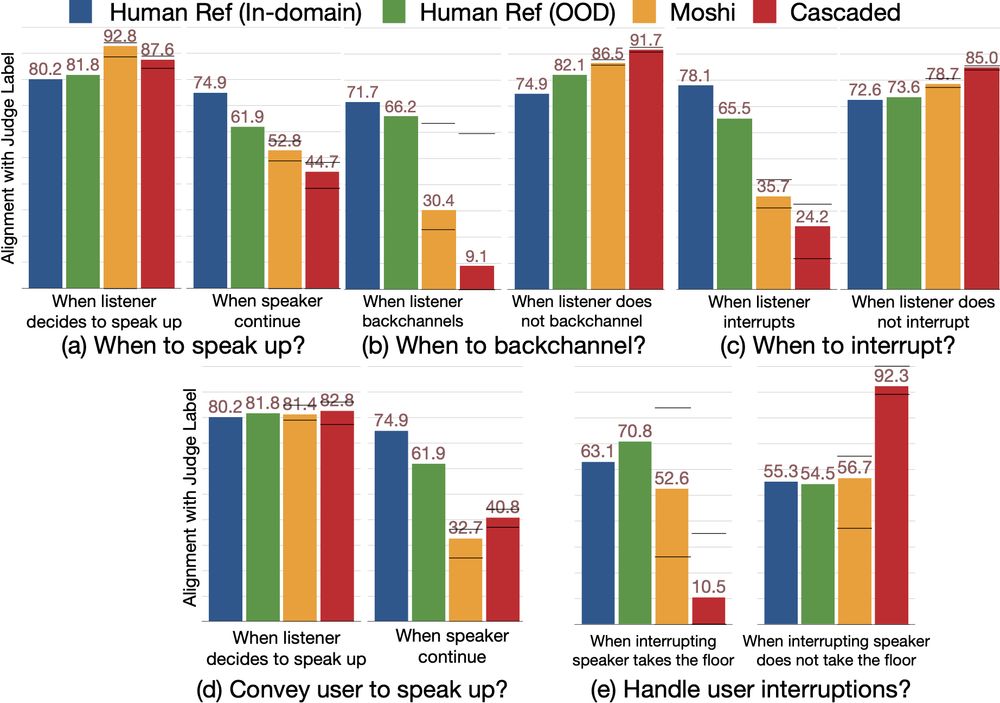

Can Audio Foundation Models like Moshi and GPT-4o truly engage in natural conversations? 🗣️🔊

We benchmark their turn-taking abilities and uncover major gaps in conversational AI. 🧵👇

📜: arxiv.org/abs/2503.01174

Can Audio Foundation Models like Moshi and GPT-4o truly engage in natural conversations? 🗣️🔊

We benchmark their turn-taking abilities and uncover major gaps in conversational AI. 🧵👇

📜: arxiv.org/abs/2503.01174

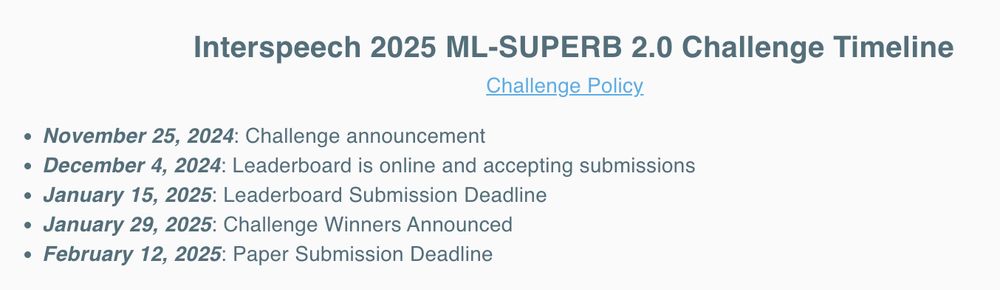

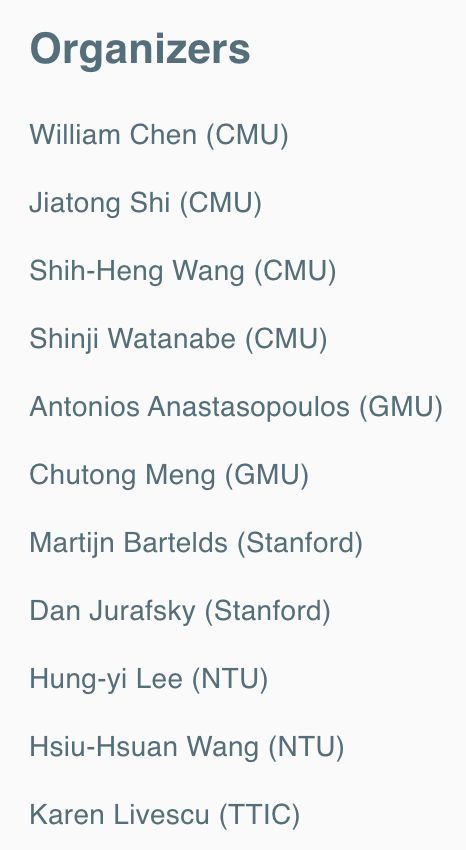

We are organizing a special session at #Interspeech2025 on: Interpretability in Audio & Speech Technology

Check out the special session website: sites.google.com/view/intersp...

Paper submission deadline 📆 12 February 2025

We are organizing a special session at #Interspeech2025 on: Interpretability in Audio & Speech Technology

Check out the special session website: sites.google.com/view/intersp...

Paper submission deadline 📆 12 February 2025

💻 multilingual.superbbenchmark.org

💻 multilingual.superbbenchmark.org

#Interspeech2025

#Interspeech2025

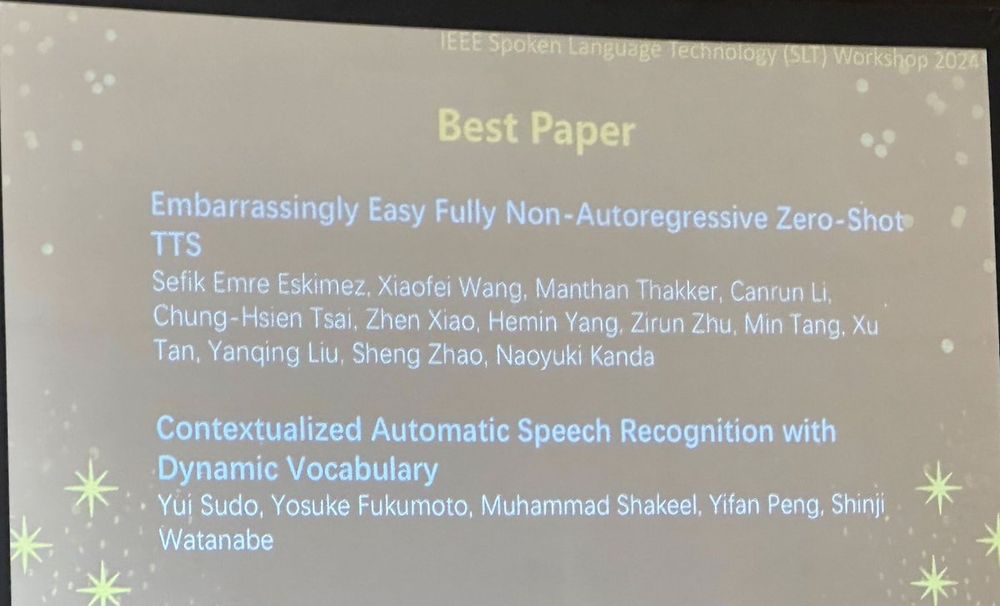

We got the best paper award at IEEE SLT'24! This work elegantly and straightforwardly solves contextual biasing issues with dynamic vocabulary arxiv.org/abs/2405.13344. Congrats, Yui, Yosuke, Shakeel, and Yifan!

! I'm super happy!

We got the best paper award at IEEE SLT'24! This work elegantly and straightforwardly solves contextual biasing issues with dynamic vocabulary arxiv.org/abs/2405.13344. Congrats, Yui, Yosuke, Shakeel, and Yifan!

! I'm super happy!

We are running another "Multimodal information based speech (MISP)" Challenge at @interspeech.bsky.social

Participate!

Spread the word!

More info 👇

mispchallenge.github.io/mispchalleng...

We are running another "Multimodal information based speech (MISP)" Challenge at @interspeech.bsky.social

Participate!

Spread the word!

More info 👇

mispchallenge.github.io/mispchalleng...