✈️ Mila - Quebec AI Institute | University of Copenhagen 🏰

#NLProc #ML #XAI

Recreational sufferer

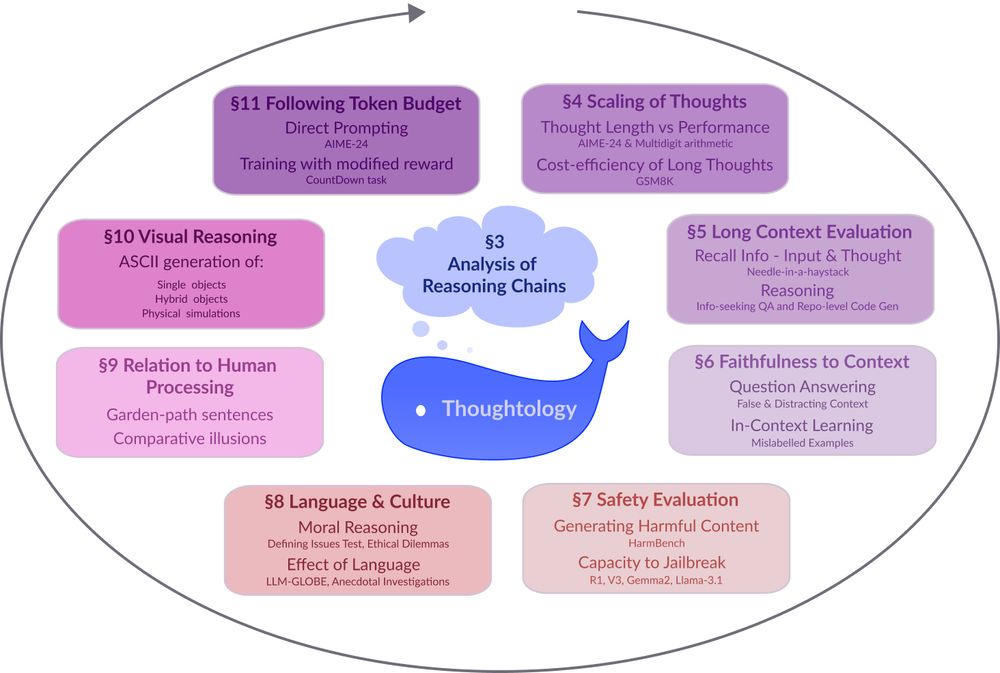

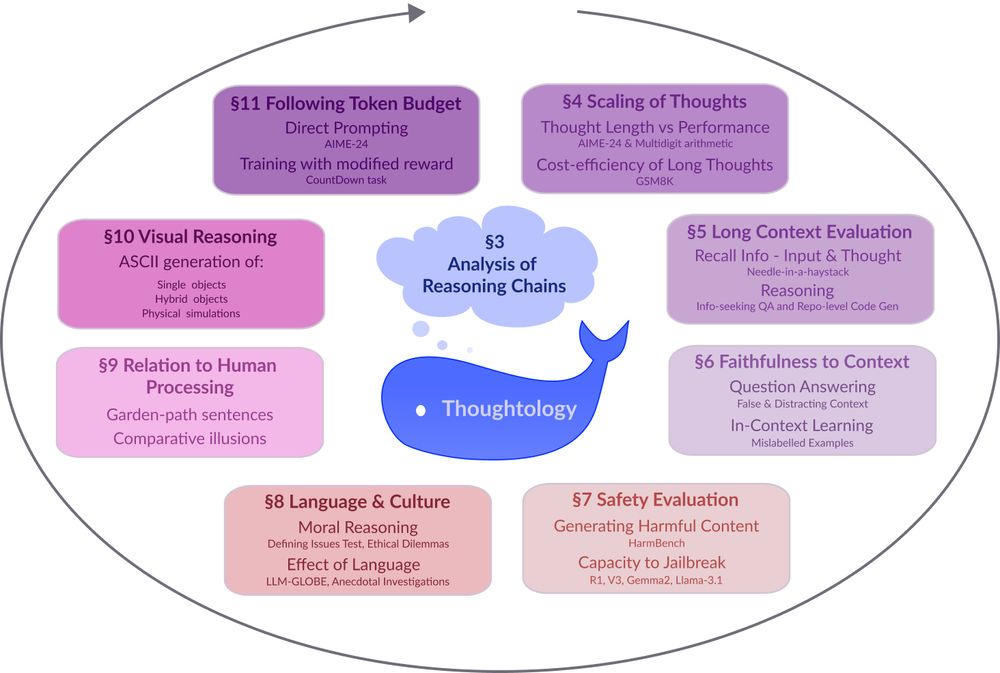

🔗: mcgill-nlp.github.io/thoughtology/

🌟 We quantify rumination; repetitive thoughts are associated with incorrect responses

🌟 We add 2 LRMs: gpt-oss and Qwen3. Both show a reasoning 'sweet spot'

See 📃 : openreview.net/forum?id=BZw...

🌟 We quantify rumination; repetitive thoughts are associated with incorrect responses

🌟 We add 2 LRMs: gpt-oss and Qwen3. Both show a reasoning 'sweet spot'

See 📃 : openreview.net/forum?id=BZw...

w/ Michelle Yang, @sivareddyg.bsky.social , @msonderegger.bsky.social and @dallascard.bsky.social👇(1/12)

w/ Michelle Yang, @sivareddyg.bsky.social , @msonderegger.bsky.social and @dallascard.bsky.social👇(1/12)

🔗 arxiv.org/abs/2504.07128

🔗 arxiv.org/abs/2504.07128

We encourage you to read the full paper for a more detailed discussion of our findings and hope that our insights encourage future work studying the reasoning behaviour of LLMs.

We encourage you to read the full paper for a more detailed discussion of our findings and hope that our insights encourage future work studying the reasoning behaviour of LLMs.

🔗: mcgill-nlp.github.io/thoughtology/

🔗: mcgill-nlp.github.io/thoughtology/

We release 🧙🏽♀️DRUID to facilitate studies of context usage in real-world scenarios.

arxiv.org/abs/2412.17031

w/ @saravera.bsky.social, H.Yu, @rnv.bsky.social, C.Lioma, M.Maistro, @apepa.bsky.social and @iaugenstein.bsky.social ⭐️

We release 🧙🏽♀️DRUID to facilitate studies of context usage in real-world scenarios.

arxiv.org/abs/2412.17031

w/ @saravera.bsky.social, H.Yu, @rnv.bsky.social, C.Lioma, M.Maistro, @apepa.bsky.social and @iaugenstein.bsky.social ⭐️