The CfP for Ray Summit 2025 San Francisco is open, and we'd love to have you submit a talk on anything and everything ML + AI + distributed computing!

first time speakers are welcome 🫶

www.anyscale.com/blog/ray-sum...

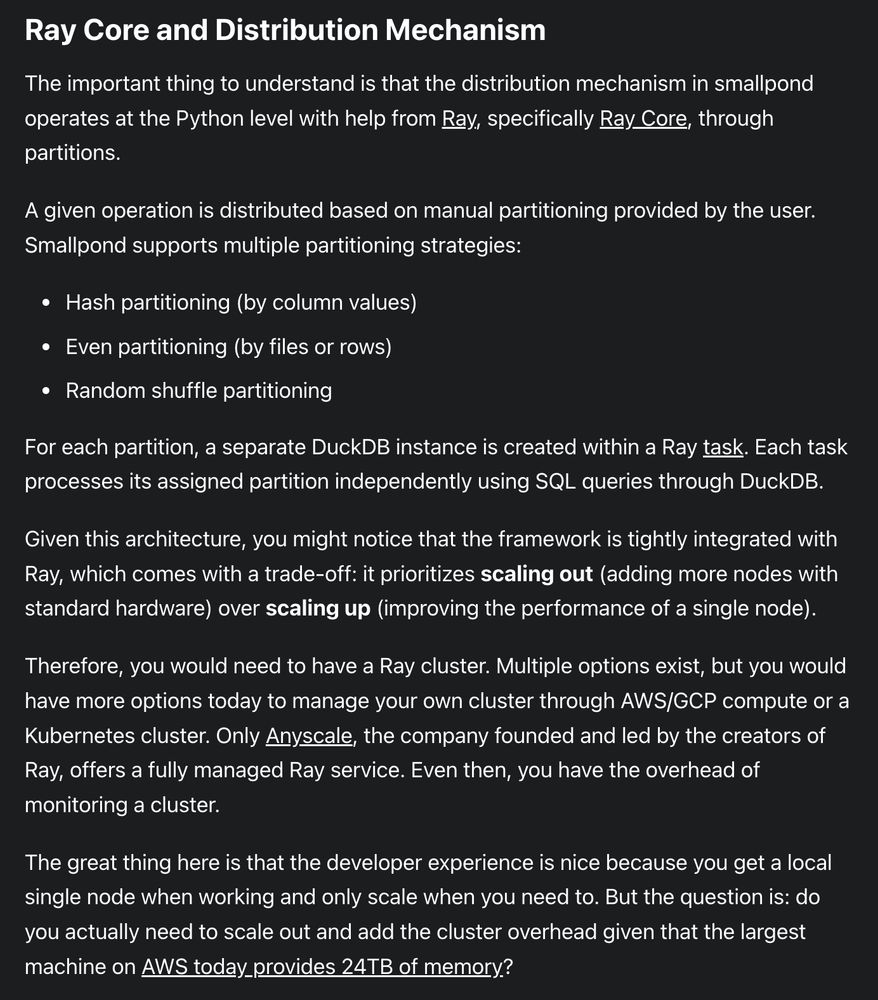

- Smallpond targets high performance data processing.

- It provides a high-level dataframe API

- Targets petabyte-level scaling

The challenges around training data prep only grow when you include multimodal data.

- Smallpond targets high performance data processing.

- It provides a high-level dataframe API

- Targets petabyte-level scaling

The challenges around training data prep only grow when you include multimodal data.

aws.amazon.com/blogs/openso...

aws.amazon.com/blogs/openso...