LLMs are collapsed. Their output itself is good, but the distribution of their outputs is always the same. Thus, no training on own output

LLMs memorise too much. Humans cannot, so we have to find patterns and understand.

LLMs are collapsed. Their output itself is good, but the distribution of their outputs is always the same. Thus, no training on own output

LLMs memorise too much. Humans cannot, so we have to find patterns and understand.

www.nature.com/articles/s41...

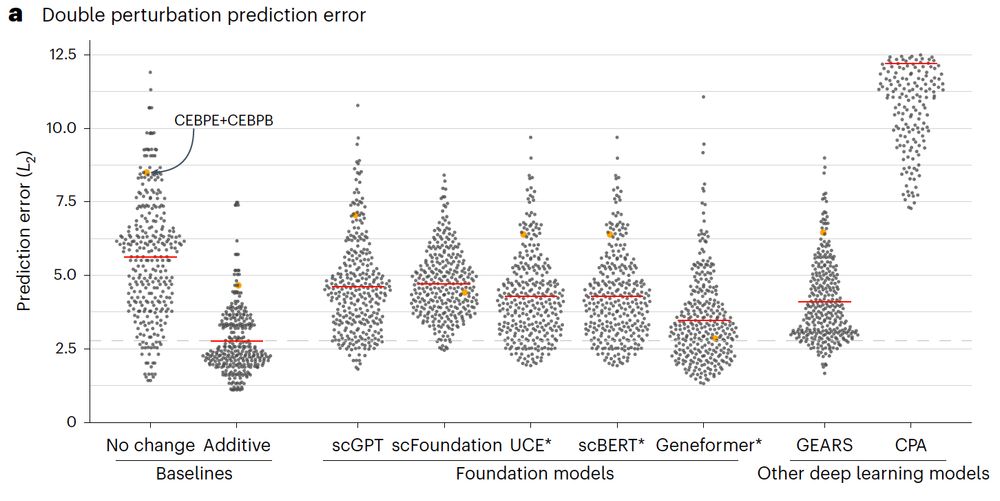

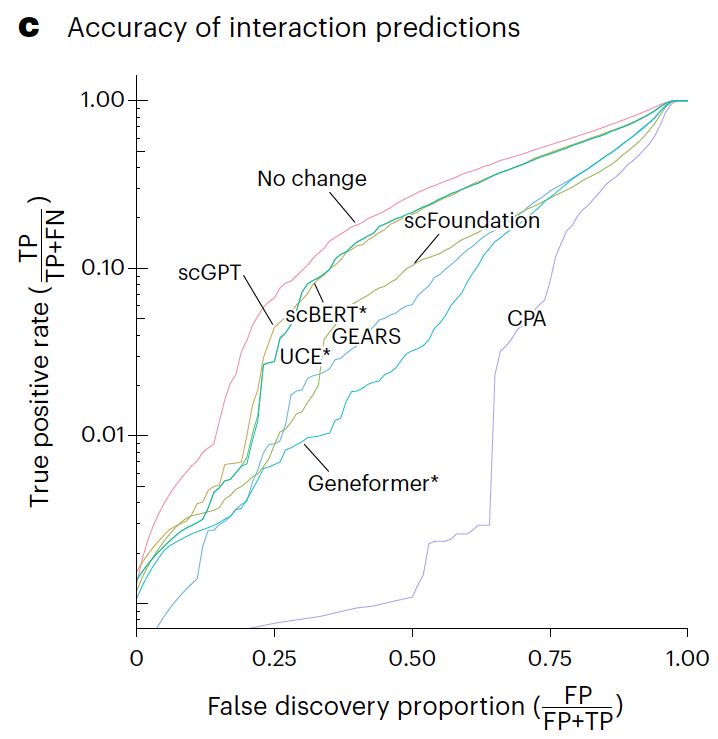

We show that none of the available* models outperform simple linear baselines. Since the original preprint, we added more methods, metrics, and prettier figures!

🧵

www.nature.com/articles/s41...

We show that none of the available* models outperform simple linear baselines. Since the original preprint, we added more methods, metrics, and prettier figures!

🧵

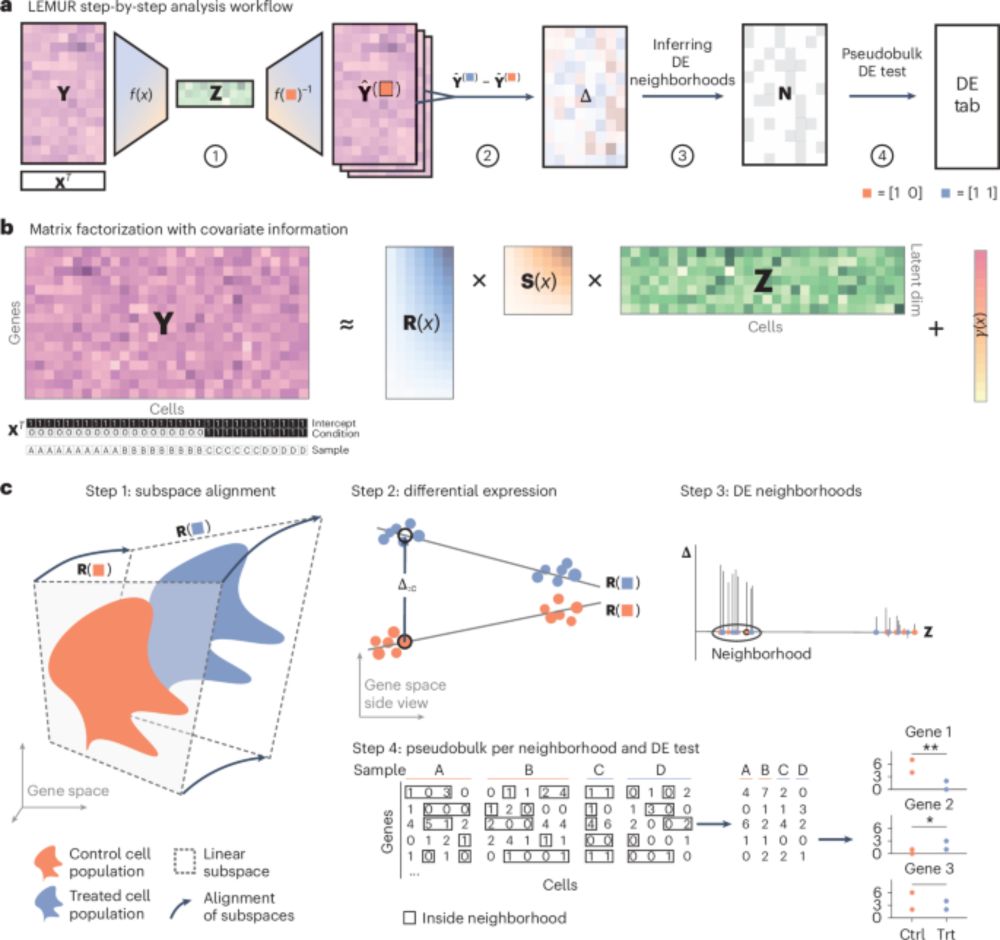

genomebiology.biomedcentral.com/articles/10....

At #CeMM, we’re thrilled to join the growing #BlueskyScience community.

Follow us to hear more about:

🔬 Breakthroughs from our labs

📚 Fresh publications

🎤 Events & symposiums

🌟 Stories from our vibrant scientific community

Let’s explore science together!🧬

At #CeMM, we’re thrilled to join the growing #BlueskyScience community.

Follow us to hear more about:

🔬 Breakthroughs from our labs

📚 Fresh publications

🎤 Events & symposiums

🌟 Stories from our vibrant scientific community

Let’s explore science together!🧬

doi.org/10.1038/s415...