https://preethac.github.io/

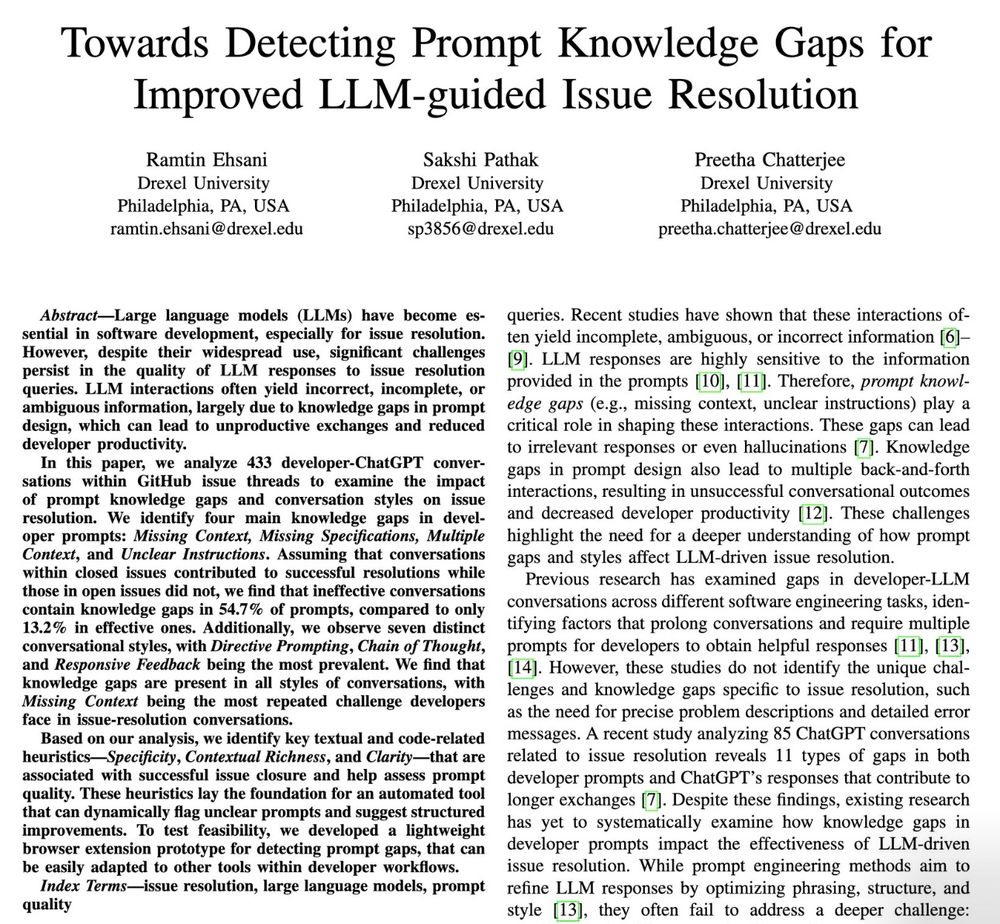

We propose a 3-layer knowledge injection framework that incrementally feeds LLMs with bug, repository, and project knowledge.

Preprint of our ASE '25 paper: arxiv.org/pdf/2506.24015

We propose a 3-layer knowledge injection framework that incrementally feeds LLMs with bug, repository, and project knowledge.

Preprint of our ASE '25 paper: arxiv.org/pdf/2506.24015

We propose a 3-layer knowledge injection framework that incrementally feeds LLMs with bug, repository, and project knowledge.

Preprint of our ASE '25 paper: arxiv.org/pdf/2506.24015

We propose a 3-layer knowledge injection framework that incrementally feeds LLMs with bug, repository, and project knowledge.

Preprint of our ASE '25 paper: arxiv.org/pdf/2506.24015

In our latest EMSE paper, we look into: when developers unknowingly share vulnerable code with LLMs, do these models proactively raise security red flags? 🧵

👉 Read the paper: arxiv.org/abs/2502.14202

In our latest EMSE paper, we look into: when developers unknowingly share vulnerable code with LLMs, do these models proactively raise security red flags? 🧵

👉 Read the paper: arxiv.org/abs/2502.14202

Preprint: soar-lab.github.io//papers/MSR2...

Preprint: soar-lab.github.io//papers/MSR2...

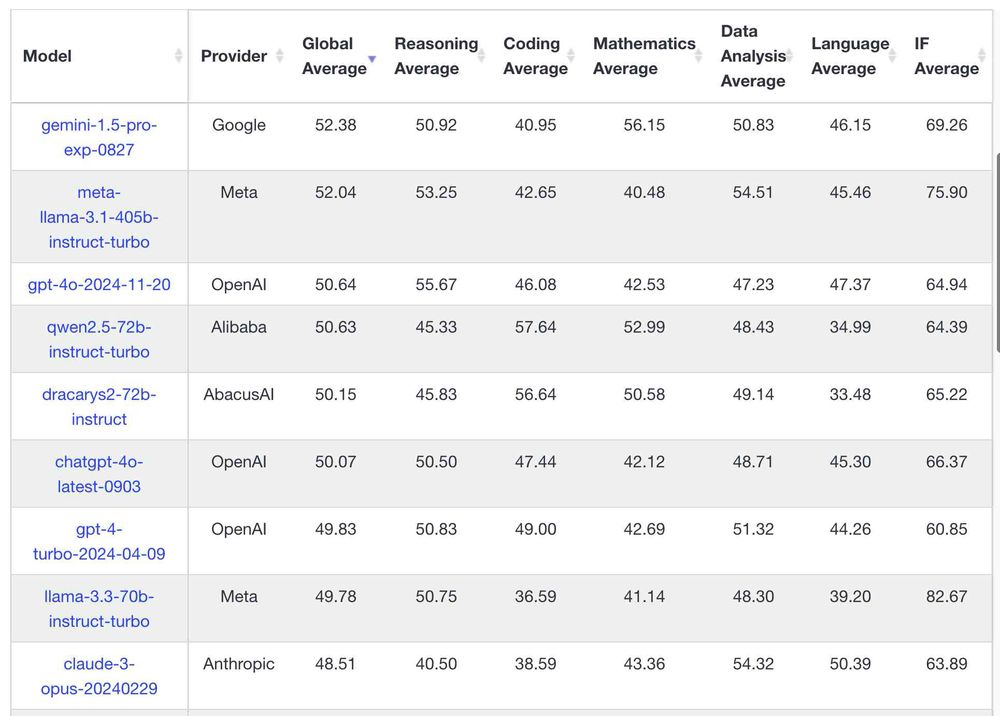

(The exact same laptop that could just about run a GPT-3 class model 20 months ago)

The new Llama 3.3 70B is a striking example of the huge efficiency gains we've seen in the last two years

simonwillison.net/2024/Dec/9/l...

(The exact same laptop that could just about run a GPT-3 class model 20 months ago)

The new Llama 3.3 70B is a striking example of the huge efficiency gains we've seen in the last two years

simonwillison.net/2024/Dec/9/l...

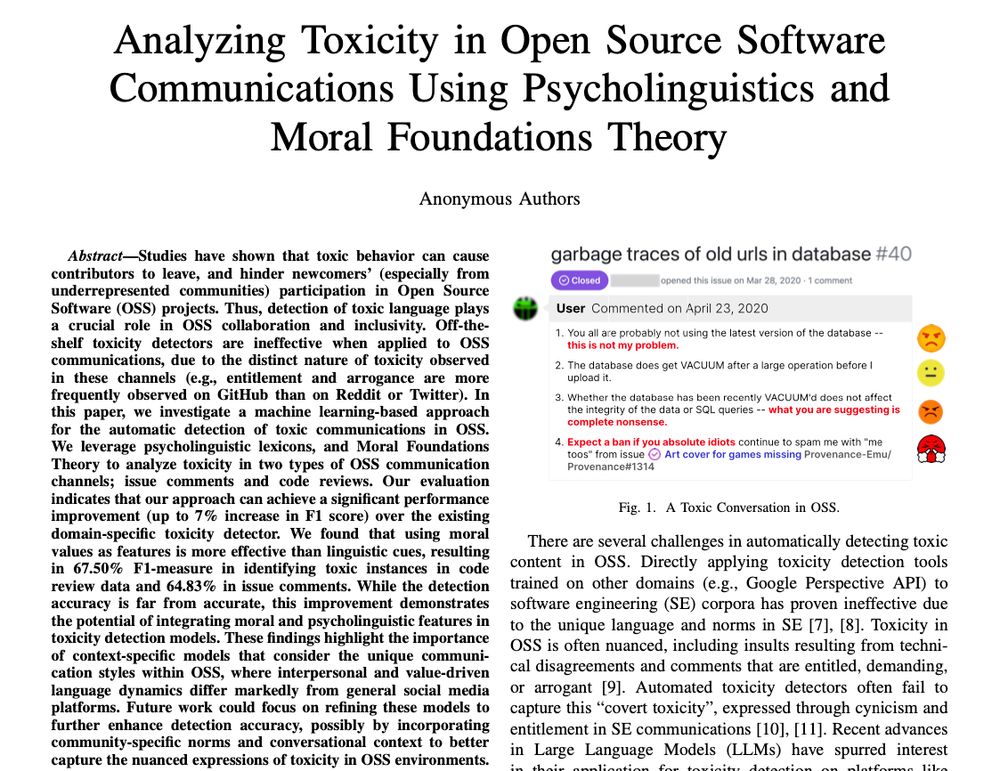

Our work shows promise in improving toxicity detection in OSS using moral values & psycholinguistic cues. Preprint coming soon.

Our work shows promise in improving toxicity detection in OSS using moral values & psycholinguistic cues. Preprint coming soon.