Now in npj Digital Medicine www.nature.com/articles/s41...

Also in Doctor Penguin!

Big thanks to Maria Bordukova, @raulrod.bsky.social Papichaya Quengdaeng Daniel Garger @fschmich.bsky.social Michael Menden Helmholtz Munich Roche

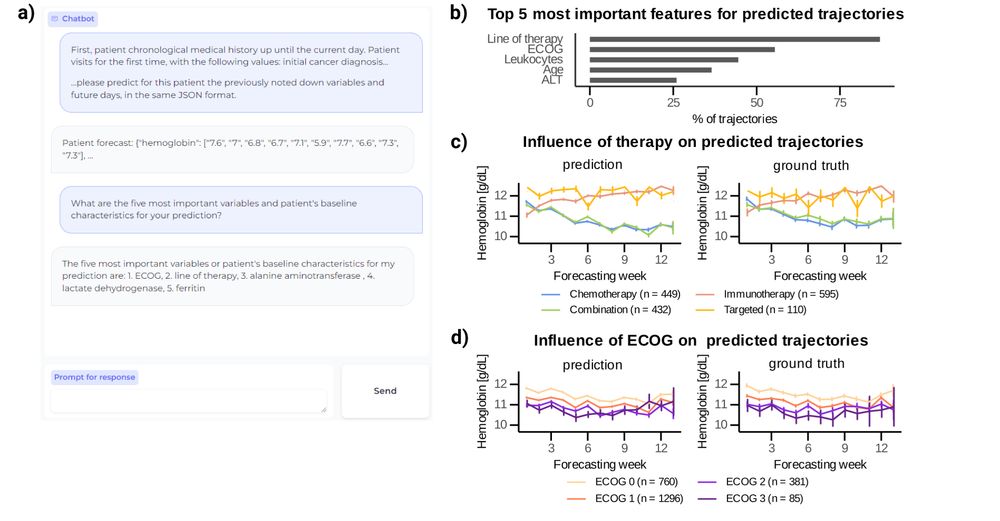

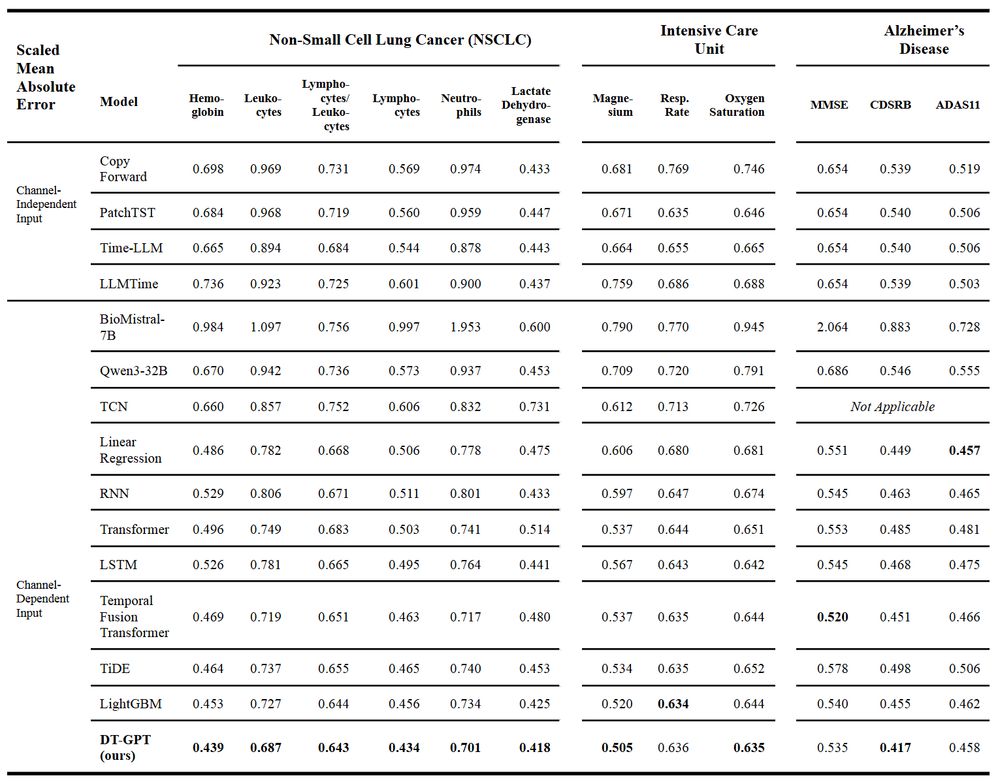

“Large Language Models forecast Patient Health Trajectories enabling Digital Twins”

“Large Language Models forecast Patient Health Trajectories enabling Digital Twins”

Now in npj Digital Medicine www.nature.com/articles/s41...

Also in Doctor Penguin!

Big thanks to Maria Bordukova, @raulrod.bsky.social Papichaya Quengdaeng Daniel Garger @fschmich.bsky.social Michael Menden Helmholtz Munich Roche

Now in npj Digital Medicine www.nature.com/articles/s41...

Also in Doctor Penguin!

Big thanks to Maria Bordukova, @raulrod.bsky.social Papichaya Quengdaeng Daniel Garger @fschmich.bsky.social Michael Menden Helmholtz Munich Roche

#MedSky #MedAI #MLSky

Learn more on our HAI blog:

hai.stanford.edu/news/advanci...

Learn more on our HAI blog:

hai.stanford.edu/news/advanci...

🚀Apply now for our 2 summer internships for 2025:

1️⃣ Multimodal - www.linkedin.com/jobs/view/40...

2️⃣ Preclinical - www.linkedin.com/jobs/view/40...

DM me if you have any questions or know anybody who would be interested in this.

🚀Apply now for our 2 summer internships for 2025:

1️⃣ Multimodal - www.linkedin.com/jobs/view/40...

2️⃣ Preclinical - www.linkedin.com/jobs/view/40...

DM me if you have any questions or know anybody who would be interested in this.

go.bsky.app/PddA2uy

go.bsky.app/PddA2uy

I've got room to include more, so please tag anyone you think I should add! 🧪🩺 🤖 🛟

I've got room to include more, so please tag anyone you think I should add! 🧪🩺 🤖 🛟

“Large Language Models forecast Patient Health Trajectories enabling Digital Twins”

Reposted from X to have some content here :)

“Large Language Models forecast Patient Health Trajectories enabling Digital Twins”

Reposted from X to have some content here :)