Gift link

Gift link

Executive Order 14110 was revoked (Safe, Secure, and Trustworthy Development and Use of Artificial Intelligence)

Executive Order 14110 was revoked (Safe, Secure, and Trustworthy Development and Use of Artificial Intelligence)

Paper: arxiv.org/abs/2412.17427

Paper: arxiv.org/abs/2412.17427

2. Is this dataset meant for training generative AI? 🤷♀️ but more likely for research and statistical analysis.

3. Is it ok to duplicate and distribute people’s data without agency to opt out? I’d argue no.

📊 1M public posts from Bluesky's firehose API

🔍 Includes text, metadata, and language predictions

🔬 Perfect to experiment with using ML for Bluesky 🤗

huggingface.co/datasets/blu...

2. Is this dataset meant for training generative AI? 🤷♀️ but more likely for research and statistical analysis.

3. Is it ok to duplicate and distribute people’s data without agency to opt out? I’d argue no.

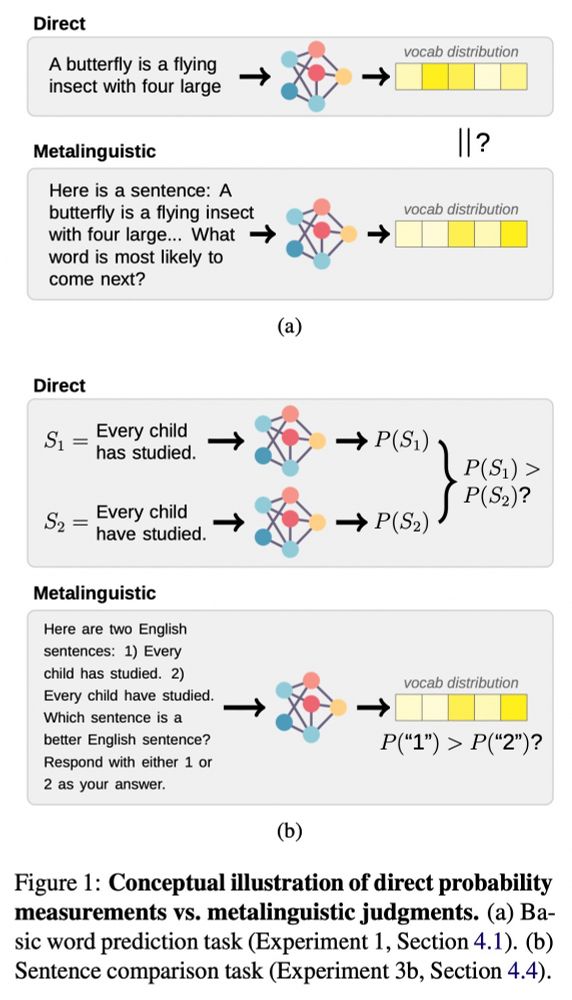

Here @jennhu.bsky.social provides an excellent illustration of how that approach fails even at the most basic level.

Paper: arxiv.org/abs/2305.13264

Original thread: twitter.com/_jennhu/stat...

Here @jennhu.bsky.social provides an excellent illustration of how that approach fails even at the most basic level.