magnusross.github.io/posts/moms-m...

magnusross.github.io/posts/moms-m...

The best thing is…

You can deploy the Blackwell platform without approvals.

Just sign in, select the instance type, and start your deployment:

cloud.datacrunch.io?utm_source=b...

The best thing is…

You can deploy the Blackwell platform without approvals.

Just sign in, select the instance type, and start your deployment:

cloud.datacrunch.io?utm_source=b...

We are an infrastructure partner of Black Forest Labs for Kontext, a suite of generative flow matching models for text-to-image and image-to-image editing.

Learn more: datacrunch.io/managed-endp...

We are an infrastructure partner of Black Forest Labs for Kontext, a suite of generative flow matching models for text-to-image and image-to-image editing.

Learn more: datacrunch.io/managed-endp...

🇫🇮 This event will bring together 250 AI engineers, researchers, and founders under one roof in Helsinki.

🔗 You can still grab one of the last remaining seats: lu.ma/x5hhj79x

🇫🇮 This event will bring together 250 AI engineers, researchers, and founders under one roof in Helsinki.

🔗 You can still grab one of the last remaining seats: lu.ma/x5hhj79x

Check out our poster #205 on Sunday May 4th in Hall A-E if you are in Phuket. Finland's rising star @huangdaolang.bsky.social will be there to assist you :D

arxiv.org/abs/2410.07930

@fxbriol.bsky.social @samikaski.bsky.social

Check out our poster #205 on Sunday May 4th in Hall A-E if you are in Phuket. Finland's rising star @huangdaolang.bsky.social will be there to assist you :D

arxiv.org/abs/2410.07930

@fxbriol.bsky.social @samikaski.bsky.social

AI disruption is baked in.

AI disruption is baked in.

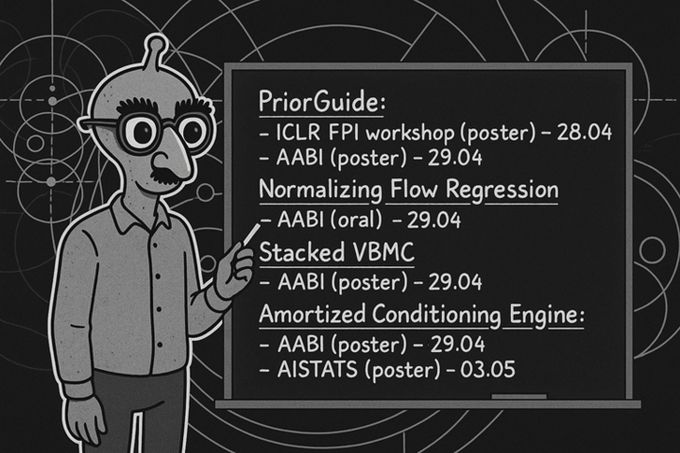

Go talk to my incredible PhD students @huangdaolang.bsky.social & @chengkunli.bsky.social + amazing collaborator Severi Rissanen.

@univhelsinkics.bsky.social FCAI

Go talk to my incredible PhD students @huangdaolang.bsky.social & @chengkunli.bsky.social + amazing collaborator Severi Rissanen.

@univhelsinkics.bsky.social FCAI

Normalizing Flow Regression (NFR) — an offline Bayesian inference method.

What if you could get a full posterior using *only* the evaluations you *already* have, maybe from optimization runs?

Normalizing Flow Regression (NFR) — an offline Bayesian inference method.

What if you could get a full posterior using *only* the evaluations you *already* have, maybe from optimization runs?

codeml-workshop.github.io/codeml2025/

codeml-workshop.github.io/codeml2025/

- understanding things deeply, reading the actual source

- being willing to help other people

- status doesn’t matter, good ideas come from anywhere

endler.dev/2025/best-pr...

- understanding things deeply, reading the actual source

- being willing to help other people

- status doesn’t matter, good ideas come from anywhere

endler.dev/2025/best-pr...

Cool stuff by Antonio and WavespeedAI team to get FLUX-dev inference (SOTA diffusion) in under a second on a B200.

datacrunch.io/blog/flux-on...

Cool stuff by Antonio and WavespeedAI team to get FLUX-dev inference (SOTA diffusion) in under a second on a B200.

datacrunch.io/blog/flux-on...

You judge the result...

(text continues 👇)

You judge the result...

(text continues 👇)

The data is worth a look also as it shows how LM Arena results can be manipulated to be more pleasing to humans. t.co/rqAey9SMwh

The data is worth a look also as it shows how LM Arena results can be manipulated to be more pleasing to humans. t.co/rqAey9SMwh

github.com/datacrunch-r...

I didnt relaize it inserts

"Co-Authored-By: Claude

I would have got away with it if it wasn't for that pesky Claude code.

github.com/datacrunch-r...

I didnt relaize it inserts

"Co-Authored-By: Claude

I would have got away with it if it wasn't for that pesky Claude code.

You'll find a comprehensive overview of the techniques, their benefits and implications, and our benchmarks.

datacrunch.io/blog/deepsee...

arxiv.org/abs/2501.18795.

arxiv.org/abs/2501.18795.

Be among the first to gain instant access to 1x, 2x, 4x, and 8x B200 GPUs with our high-performance VMs.

Sign up and enjoy expert support with secure service where performance meets sustainability.

🔗 cloud.datacrunch.io

Be among the first to gain instant access to 1x, 2x, 4x, and 8x B200 GPUs with our high-performance VMs.

Sign up and enjoy expert support with secure service where performance meets sustainability.

🔗 cloud.datacrunch.io

We thank their team for this independent evaluation, validating our approach to pushing the boundary of resource-efficient AI infrastructure ⬇️

We thank their team for this independent evaluation, validating our approach to pushing the boundary of resource-efficient AI infrastructure ⬇️

arxiv.org/abs/2503.16672

arxiv.org/abs/2503.16672