https://mingxue-xu.github.io/

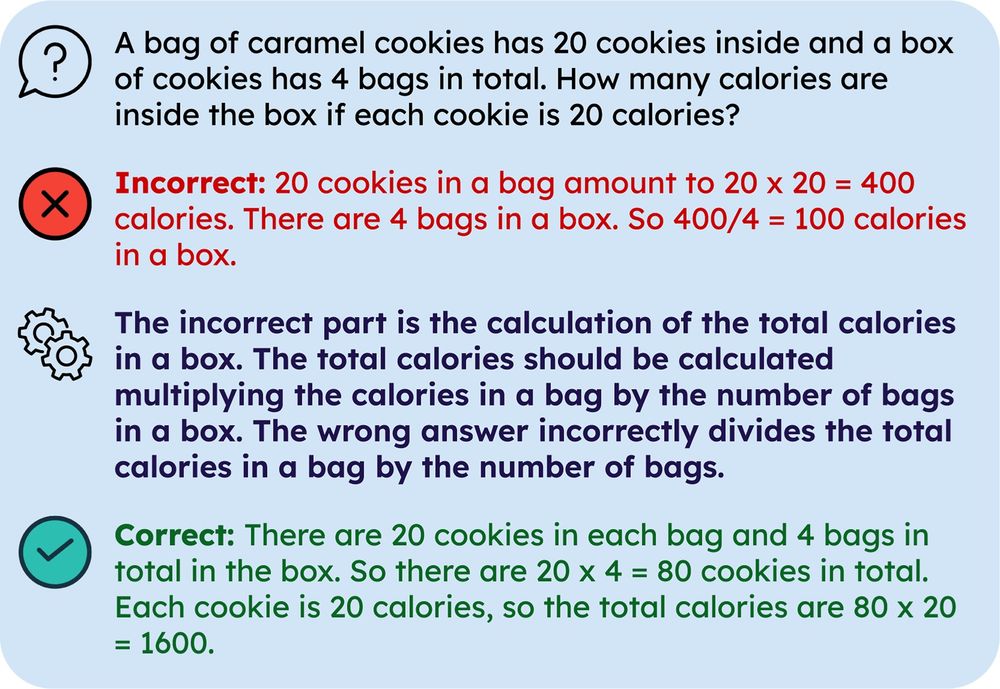

When LLMs learn from previous incorrect answers, they typically observe corrective feedback in the form of rationales explaining each mistake. In our new preprint, we find these rationales do not help, in fact they hurt performance!

🧵

When LLMs learn from previous incorrect answers, they typically observe corrective feedback in the form of rationales explaining each mistake. In our new preprint, we find these rationales do not help, in fact they hurt performance!

🧵

Here are the greetings from spring 🌸👇

Here are the greetings from spring 🌸👇

To follow us all click 'follow all' in the starter pack below

go.bsky.app/Bv5thAb

To follow us all click 'follow all' in the starter pack below

go.bsky.app/Bv5thAb