Thank you @simonwillison.net

Thank you @simonwillison.net

My notes here: simonwillison.net/2025/Oct/4/d...

My notes here: simonwillison.net/2025/Oct/4/d...

"GEPA: Reflective Prompt Evolution Can Outperform Reinforcement Learning" presents such elegant ideas by a collection of amazing researchers!

Here is a tldr of how it works:

"GEPA: Reflective Prompt Evolution Can Outperform Reinforcement Learning" presents such elegant ideas by a collection of amazing researchers!

Here is a tldr of how it works:

ColBERT vectors are often 10 bytes each. Ten bytes. That’s like 4 numbers.

It’s not “many vectors work better than one vector”. It’s “set similarity works better than dot product”.

Even with the same storage cost.

ColBERT vectors are often 10 bytes each. Ten bytes. That’s like 4 numbers.

It’s not “many vectors work better than one vector”. It’s “set similarity works better than dot product”.

Even with the same storage cost.

late interaction models do embedding vector index queries and reranking at the same time leading to far higher accuracy

huggingface.co/NeuML/colber...

late interaction models do embedding vector index queries and reranking at the same time leading to far higher accuracy

huggingface.co/NeuML/colber...

🔗 Register today 👉 lu.ma/mlflow423

Join the global MLflow community for two exciting tech deep dives:

🔹 MLflow + #DSPy Integration

🔹 Cleanlab + #MLflow

🎥 Streaming live on YouTube, LinkedIn, and X

💬 Live Q&A with the presenters

#opensource #oss

🔗 Register today 👉 lu.ma/mlflow423

Join the global MLflow community for two exciting tech deep dives:

🔹 MLflow + #DSPy Integration

🔹 Cleanlab + #MLflow

🎥 Streaming live on YouTube, LinkedIn, and X

💬 Live Q&A with the presenters

#opensource #oss

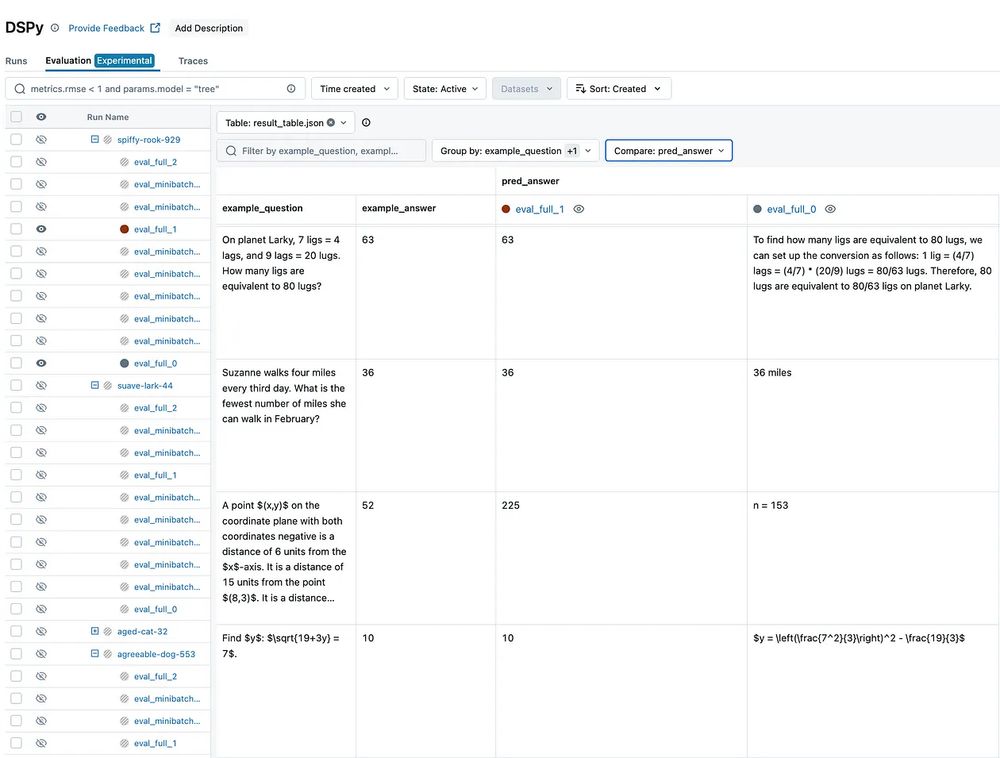

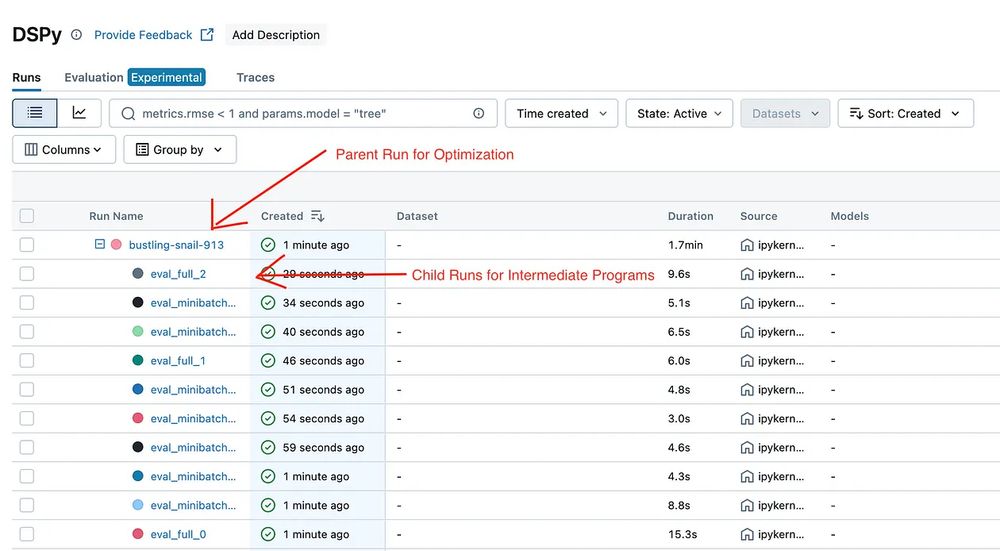

#MLflow is the first to bring full visibility into DSPy’s prompt optimization process. More observability, less guesswork.

Get started today! ➡️ medium.com/@AI-on-Datab...

#opensource

#MLflow is the first to bring full visibility into DSPy’s prompt optimization process. More observability, less guesswork.

Get started today! ➡️ medium.com/@AI-on-Datab...

#opensource

🔹 Explore the new MLflow + #DSPy integration

🔹 Learn how Cleanlab adds trust to AI workflows with MLflow

💬 Live Q&A + demos

📺 Streamed on YouTube, LinkedIn, and X

👉 RSVP: lu.ma/mlflow423

#opensource #mlflow #oss

🔹 Explore the new MLflow + #DSPy integration

🔹 Learn how Cleanlab adds trust to AI workflows with MLflow

💬 Live Q&A + demos

📺 Streamed on YouTube, LinkedIn, and X

👉 RSVP: lu.ma/mlflow423

#opensource #mlflow #oss

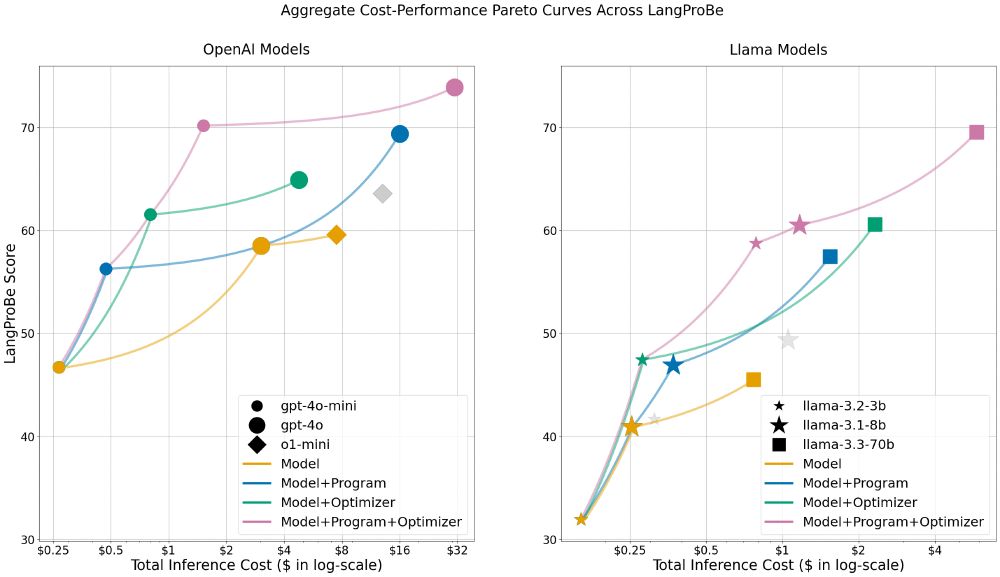

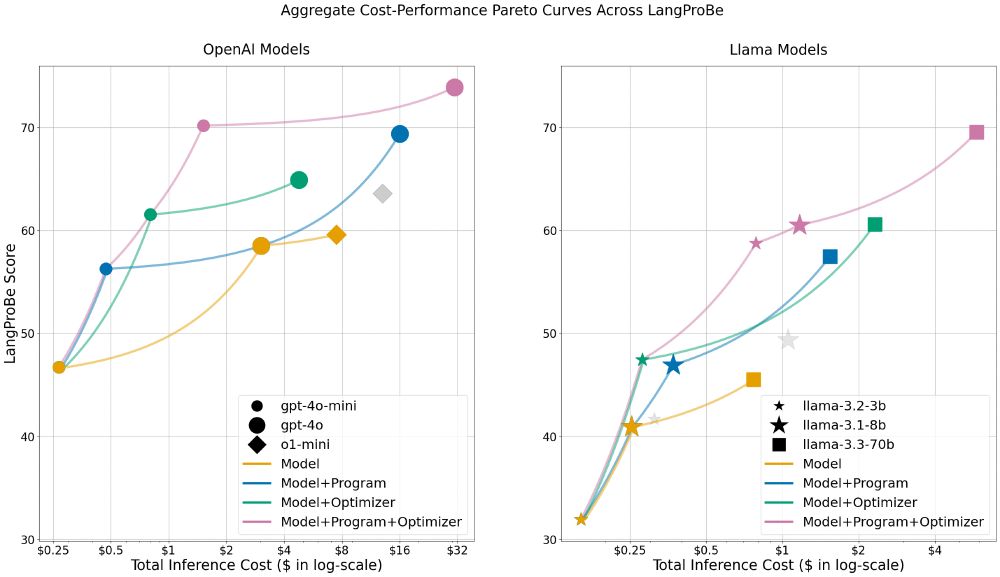

LangProBe from Shangyin Tan, @lakshyaaagrawal.bsky.social, Arnav Singhvi, Liheng Lai, @michaelryan207.bsky.social et al begins to ask what complete *AI systems* we should build & under what settings

We find that, on avg across diverse tasks, smaller models within optimized programs beat calls to larger models at a fraction of the cost.

LangProBe from Shangyin Tan, @lakshyaaagrawal.bsky.social, Arnav Singhvi, Liheng Lai, @michaelryan207.bsky.social et al begins to ask what complete *AI systems* we should build & under what settings

We find that, on avg across diverse tasks, smaller models within optimized programs beat calls to larger models at a fraction of the cost.

We find that, on avg across diverse tasks, smaller models within optimized programs beat calls to larger models at a fraction of the cost.

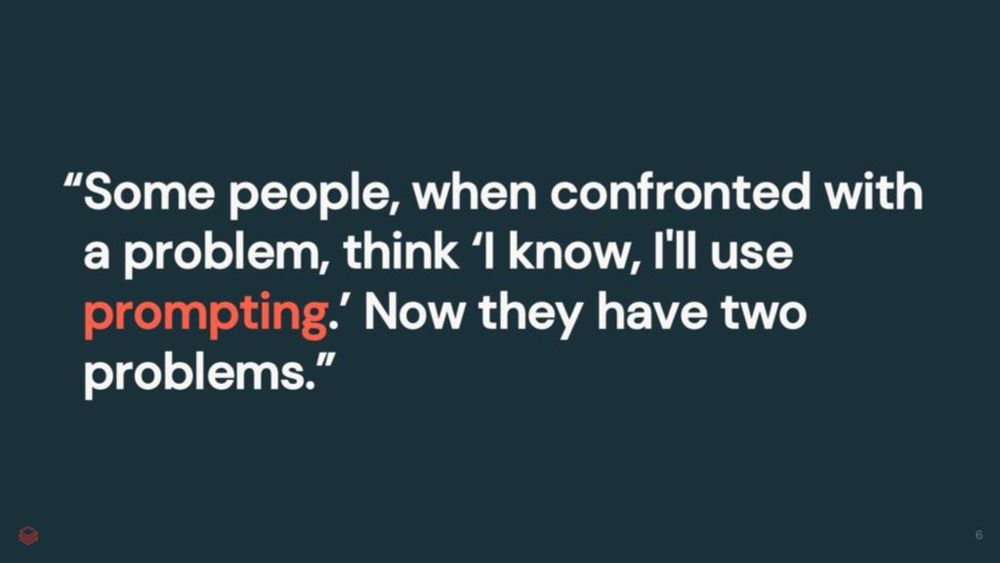

But it's prematurely abstracting that leads to the bitterness of wasted effort, and not "modularity doesn't work for AI". 2/2

But it's prematurely abstracting that leads to the bitterness of wasted effort, and not "modularity doesn't work for AI". 2/2

It's not that they're not crucial for intelligent software. But it takes building many half-working systems to abstract successfully, and it takes good abstractions to have primitives worth composing.

🧵1/2

It's not that they're not crucial for intelligent software. But it takes building many half-working systems to abstract successfully, and it takes good abstractions to have primitives worth composing.

🧵1/2

Hope this was useful!

Hope this was useful!

But that's not true: if you look at how aggressive ColBERTv2 representations are compressed, it's often ~20 bytes per vector (like 5 floats), which can be smaller than popular uncompressed single vectors!

But that's not true: if you look at how aggressive ColBERTv2 representations are compressed, it's often ~20 bytes per vector (like 5 floats), which can be smaller than popular uncompressed single vectors!

For ColBERT, you typically *fix* more than you break because you're moving *tokens* in a much smaller (and far more composable!) space.

For ColBERT, you typically *fix* more than you break because you're moving *tokens* in a much smaller (and far more composable!) space.

A dot product is just very hard to learn. An intuition I learned from Menon et al (2021) is that:

A dot product is just very hard to learn. An intuition I learned from Menon et al (2021) is that: