We show that in sign-diverse networks, inherent non-gradient “curl” terms arise, and can, depending on network architecture, destabilize gradient-descent solutions or paradoxically accelerate learning beyond pure gradient flow.

🧵⬇️

www.arxiv.org/abs/2510.02765

We show that in sign-diverse networks, inherent non-gradient “curl” terms arise, and can, depending on network architecture, destabilize gradient-descent solutions or paradoxically accelerate learning beyond pure gradient flow.

🧵⬇️

www.arxiv.org/abs/2510.02765

foodaidforgaza.org

and anyone can donate to the organization they recommend, Gaza soup kitchen, which serves up to 6000 meals daily: givebutter.com/AReeXq

foodaidforgaza.org

and anyone can donate to the organization they recommend, Gaza soup kitchen, which serves up to 6000 meals daily: givebutter.com/AReeXq

Friday 2-083.

Friday 2-083.

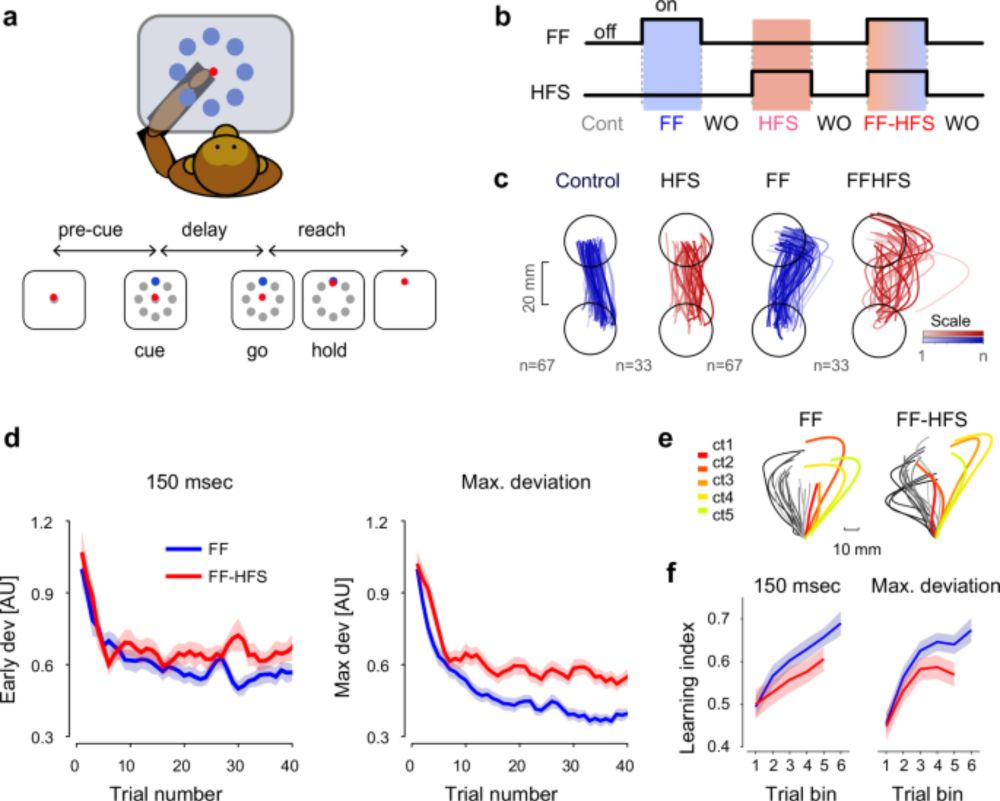

1/n Thrilled to share our latest research, now published in Nature Communications! 🎉 This study dives deep into how the cerebellum shapes cortical preparatory activity during motor adaptation.

www.nature.com/articles/s41...

#neuroskyence #motorcontrol #cerebellum #motoradaptation

1/n Thrilled to share our latest research, now published in Nature Communications! 🎉 This study dives deep into how the cerebellum shapes cortical preparatory activity during motor adaptation.

www.nature.com/articles/s41...

#neuroskyence #motorcontrol #cerebellum #motoradaptation

How does the brain simultaneously infer both context and meaning from the same stimuli, enabling rapid, flexible adaptation in dynamic environments? www.biorxiv.org/content/10.1...

Led by John Scharcz and @janbauer.bsky.social

How does the brain simultaneously infer both context and meaning from the same stimuli, enabling rapid, flexible adaptation in dynamic environments? www.biorxiv.org/content/10.1...

Led by John Scharcz and @janbauer.bsky.social