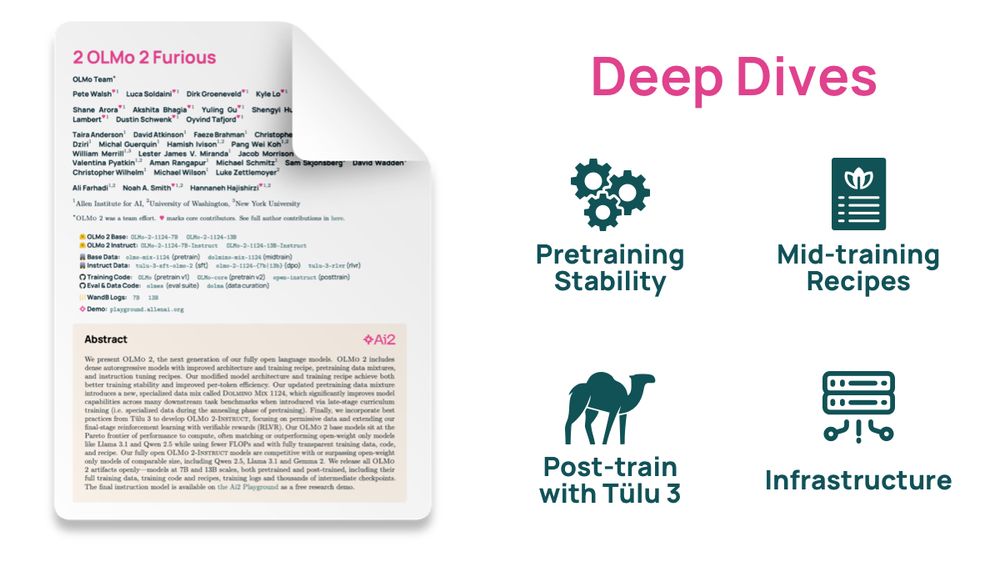

We get in the weeds with this one, with 50+ pages on 4 crucial components of LLM development pipeline:

We get in the weeds with this one, with 50+ pages on 4 crucial components of LLM development pipeline:

Played around with o1 and the ‘thinking’ Gemini model. The cot output (for Gemini) can confusing and convoluted, but it got 3/5 problems right. Stopped on the remaining 2.

These models are an impressive interpretability test bed.

Played around with o1 and the ‘thinking’ Gemini model. The cot output (for Gemini) can confusing and convoluted, but it got 3/5 problems right. Stopped on the remaining 2.

These models are an impressive interpretability test bed.

We don't have to hope Adam magically finds models that learn useful features; we can optimize for models that encode for interpretable features!

[1/n] Does AlphaFold3 "know" biophysics and the physics of protein folding? Are protein language models (pLMs) learning coevolutionary patterns? You can try to guess the answer to these questions using mechanistic interpretability.

We don't have to hope Adam magically finds models that learn useful features; we can optimize for models that encode for interpretable features!