What I think a lot of people who haven't done qualitative research may not understand is that the intended outcome of qualitative research is not only the analysis produced but also the growth of the researcher performing that analysis.

What I think a lot of people who haven't done qualitative research may not understand is that the intended outcome of qualitative research is not only the analysis produced but also the growth of the researcher performing that analysis.

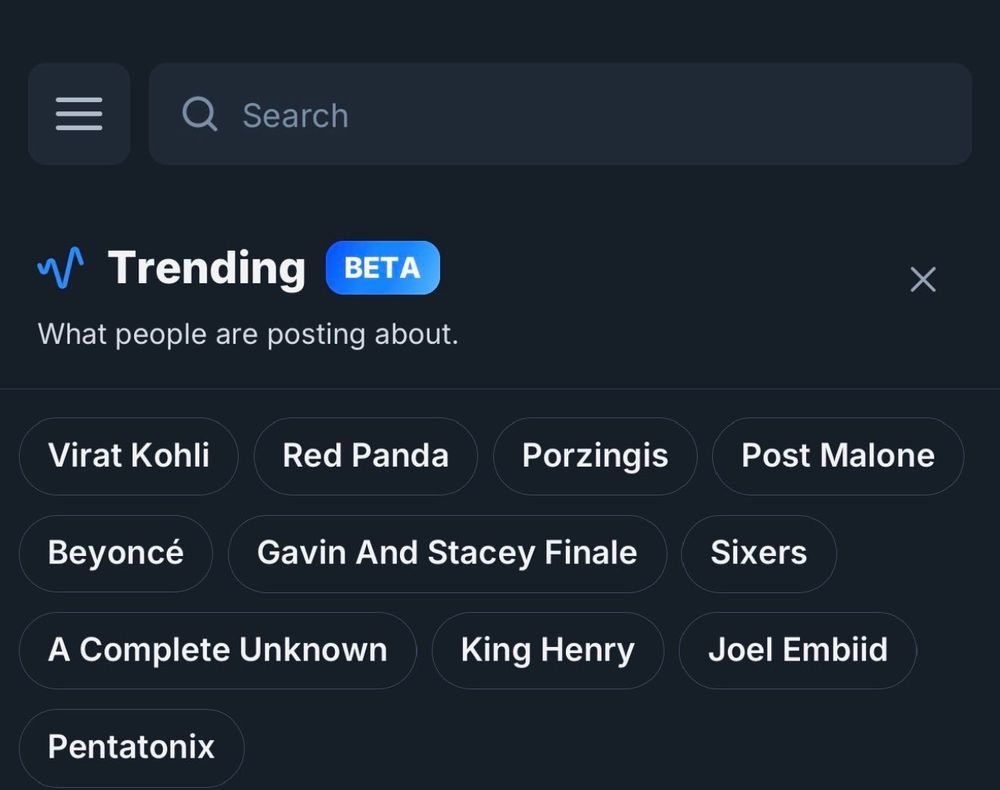

Post Guidance lets moderators prevent rule-breaking by triggering interventions as users write posts!

We implemented PG on Reddit and tested it in a massive field experiment (n=97k). It became a feature!

arxiv.org/abs/2411.16814