Interested in Computer Vision, Geometry, and learning both at the same time

https://www.jgaubil.com/

We definitively tried to see whether the operations implemented by the layers followed known algorithms. A least squared-based optimisation like in your paper was a good candidate, given how often Procrustes problems show up in 3D vision - but alas we couldn't identify one

We definitively tried to see whether the operations implemented by the layers followed known algorithms. A least squared-based optimisation like in your paper was a good candidate, given how often Procrustes problems show up in 3D vision - but alas we couldn't identify one

Is this internal iterative refinement a known phenomenon in 3D networks, or are you referring to a specific architecture?

Is this internal iterative refinement a known phenomenon in 3D networks, or are you referring to a specific architecture?

We’re working on a clean version of the code, and we’ll release it once yours truly are done with the CVPR deadline [7/8]

We’re working on a clean version of the code, and we’ll release it once yours truly are done with the CVPR deadline [7/8]

We identified attention heads specialized in finding correspondences across views.

We can clearly see the geometric refinement on this difficult image pair by visualizing their cross-attention maps! [6/8]

We identified attention heads specialized in finding correspondences across views.

We can clearly see the geometric refinement on this difficult image pair by visualizing their cross-attention maps! [6/8]

Nevertheless, this doesn’t mean cross-attention layers are useless - without them, no communication between views.

This instead suggests that cross and self-attention layers play very different roles [5/8]

Nevertheless, this doesn’t mean cross-attention layers are useless - without them, no communication between views.

This instead suggests that cross and self-attention layers play very different roles [5/8]

We can observe the impact of each layer on the iterative reconstruction process by comparing the pointmap error before and after the layer.

Here, we plot of the error difference for every layer of DUSt3R’s second-view decoder [4/8]

We can observe the impact of each layer on the iterative reconstruction process by comparing the pointmap error before and after the layer.

Here, we plot of the error difference for every layer of DUSt3R’s second-view decoder [4/8]

For easy image pairs, a good estimate of the relative position emerges early in the decoder, whereas harder pairs require more decoder blocks, sometimes even failing to converge [3/8]

For easy image pairs, a good estimate of the relative position emerges early in the decoder, whereas harder pairs require more decoder blocks, sometimes even failing to converge [3/8]

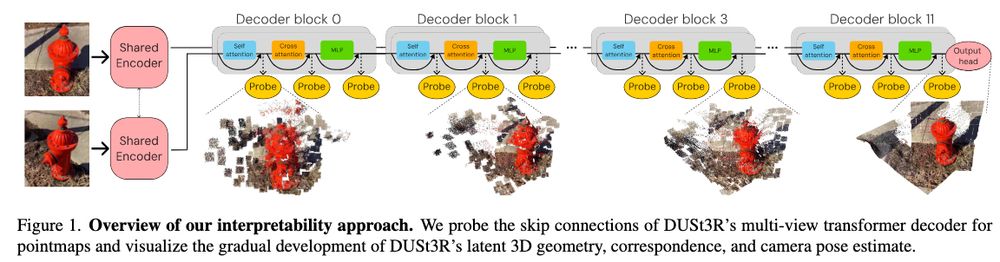

We can then analyze its inference through the sequence of reconstructions - see below! [2/8]

We can then analyze its inference through the sequence of reconstructions - see below! [2/8]

We share findings on the iterative nature of reconstruction, the roles of cross and self-attention, and the emergence of correspondences across the network [1/8] ⬇️

Michal Stary, Julien Gaubil, Ayush Tewari, Vincent Sitzmann

arxiv.org/abs/2510.24907

Trending on www.scholar-inbox.com

We share findings on the iterative nature of reconstruction, the roles of cross and self-attention, and the emergence of correspondences across the network [1/8] ⬇️

Michal Stary, Julien Gaubil, Ayush Tewari, Vincent Sitzmann

arxiv.org/abs/2510.24907

Trending on www.scholar-inbox.com

Michal Stary, Julien Gaubil, Ayush Tewari, Vincent Sitzmann

arxiv.org/abs/2510.24907

Trending on www.scholar-inbox.com

We use Dust3r as a black box. This work looks under the hood at what is going on. The internal representations seem to "iteratively" refine towards the final answer. Quite similar to what goes on in point cloud net

We use Dust3r as a black box. This work looks under the hood at what is going on. The internal representations seem to "iteratively" refine towards the final answer. Quite similar to what goes on in point cloud net

Check out our new work: MILo: Mesh-In-the-Loop Gaussian Splatting!

🎉Accepted to SIGGRAPH Asia 2025 (TOG)

MILo is a novel differentiable framework that extracts meshes directly from Gaussian parameters during training.

🧵👇

Check out our new work: MILo: Mesh-In-the-Loop Gaussian Splatting!

🎉Accepted to SIGGRAPH Asia 2025 (TOG)

MILo is a novel differentiable framework that extracts meshes directly from Gaussian parameters during training.

🧵👇

I’d say it implies multi-view consistency of the geometry and would therefore add an arrow at the left of your chart. Do you agree, and if so, don’t you think we should start there?

I’d say it implies multi-view consistency of the geometry and would therefore add an arrow at the left of your chart. Do you agree, and if so, don’t you think we should start there?

Sign up now to be randomly matched with peers for a SIGGRAPH conference coffee!

Are you a researcher of an underrepresented gender registered for SIGGRAPH? Do you want an opportunity to network with your peers? Learn more and sign up here:

www.wigraph.org/events/2025-...

Sign up now to be randomly matched with peers for a SIGGRAPH conference coffee!

🍵MAtCha reconstructs sharp, accurate and scalable meshes of both foreground AND background from just a few unposed images (eg 3 to 10 images)...

...While also working with dense-view datasets (hundreds of images)!

🍵MAtCha reconstructs sharp, accurate and scalable meshes of both foreground AND background from just a few unposed images (eg 3 to 10 images)...

...While also working with dense-view datasets (hundreds of images)!