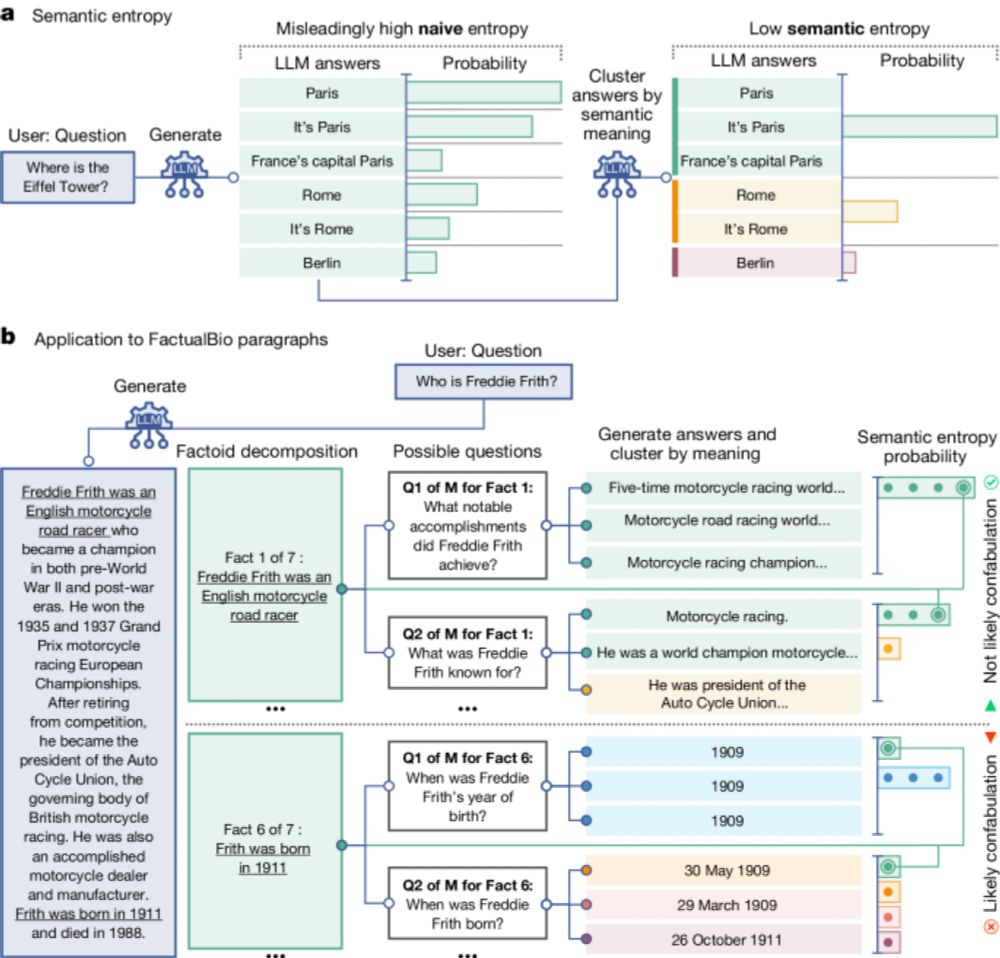

The idea is simple. Cluster potential answers based on the entailment, and then the entropy of the potential answers tells us how "unsure" it is, which is linked to confabulation.

www.nature.com/articles/s41...

The idea is simple. Cluster potential answers based on the entailment, and then the entropy of the potential answers tells us how "unsure" it is, which is linked to confabulation.

www.nature.com/articles/s41...