aisafetyeventsandtraining.substack.com/p/ai-safety-...

aisafetyeventsandtraining.substack.com/p/ai-safety-...

aisafetyeventsandtraining.substack.com/p/ai-safety-...

aisafetyeventsandtraining.substack.com/p/ai-safety-...

horizonomega.substack.com/p/guaranteed...

The monthly seminar series grew to 230 subscribers in 2024, hosting 8 technical talks. We had ~490 RSVPs, with ~76 hours and ~900 views of the recordings. Seeking 2025 funding; plans include bibliography and debates.

horizonomega.substack.com/p/guaranteed...

The monthly seminar series grew to 230 subscribers in 2024, hosting 8 technical talks. We had ~490 RSVPs, with ~76 hours and ~900 views of the recordings. Seeking 2025 funding; plans include bibliography and debates.

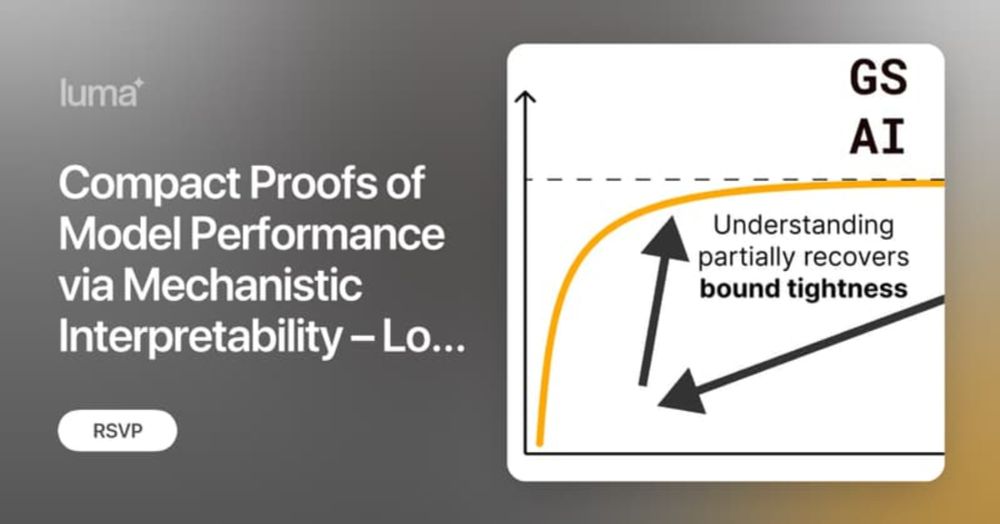

Thu January 9, 18:00-19:00 UTC

Join: lu.ma/08gr7mrs

Part of the Guaranteed Safe AI Seminars

Thu January 9, 18:00-19:00 UTC

Join: lu.ma/08gr7mrs

Part of the Guaranteed Safe AI Seminars

by Louis Jaburi

Thu December 12, 18:00-19:00 UTC

Join: lu.ma/g24bvacw

Last Guaranteed Safe AI seminar of the year

by Louis Jaburi

Thu December 12, 18:00-19:00 UTC

Join: lu.ma/g24bvacw

Last Guaranteed Safe AI seminar of the year

- Guaranteed Safe AI Seminars

- AI Safety Unconference 2025

- AI Safety Events & Training newsletter

- Monthly Montréal AI safety R&D events

- Grow partnerships

We are looking for donations to support this work. More info:

manifund.org/projects/hor...

- Guaranteed Safe AI Seminars

- AI Safety Unconference 2025

- AI Safety Events & Training newsletter

- Monthly Montréal AI safety R&D events

- Grow partnerships

We are looking for donations to support this work. More info:

manifund.org/projects/hor...

Bayesian oracles and safety bounds by Yoshua Bengio

Relevant readings:

- yoshuabengio.org/2024/08/29/b...

- arxiv.org/abs/2408.05284

Join: lu.ma/4ylbvs75

Bayesian oracles and safety bounds by Yoshua Bengio

Relevant readings:

- yoshuabengio.org/2024/08/29/b...

- arxiv.org/abs/2408.05284

Join: lu.ma/4ylbvs75

by Yoshua Bengio, Scientific Director, Mila & Full Professor, U. Montreal

November 14, 18:00-19:00 UTC

Join: lu.ma/4ylbvs75

Part of the Guaranteed Safe AI Seminars

by Yoshua Bengio, Scientific Director, Mila & Full Professor, U. Montreal

November 14, 18:00-19:00 UTC

Join: lu.ma/4ylbvs75

Part of the Guaranteed Safe AI Seminars

horizonomega.substack.com/p/announcing...

horizonomega.substack.com/p/announcing...

by Charbel-Raphaël Ségerie & Épiphanie Gédéon

August 8, 17:00-18:00 UTC

Join: lu.ma/xpf046sa

As part of the Guaranteed Safe AI Seminars

by Charbel-Raphaël Ségerie & Épiphanie Gédéon

August 8, 17:00-18:00 UTC

Join: lu.ma/xpf046sa

As part of the Guaranteed Safe AI Seminars

Proving safety for narrow AI outputs – Evan Miyazono, Atlas Computing

Thursday, July 11 11:30-12:30 UTC-5

RSVP: lu.ma/2715xmzn

Proving safety for narrow AI outputs – Evan Miyazono, Atlas Computing

Thursday, July 11 11:30-12:30 UTC-5

RSVP: lu.ma/2715xmzn

horizonomega.substack.com/p/introducin...

horizonomega.substack.com/p/introducin...

Gaia: Distributed planetary-scale AI safety

By Rafael Kaufmann, Co-founder and CTO.

Thursday June 13, 13:00-14:00 Eastern, online.

Join us!

lu.ma/qn8p4wp4

Gaia: Distributed planetary-scale AI safety

By Rafael Kaufmann, Co-founder and CTO.

Thursday June 13, 13:00-14:00 Eastern, online.

Join us!

lu.ma/qn8p4wp4

**Provable AI Safety, Steve Omohundro**

May 9th, 13:00-14:00 EDT, online

lu.ma/3fz12am7

**Provable AI Safety, Steve Omohundro**

May 9th, 13:00-14:00 EDT, online

lu.ma/3fz12am7

April 11th, 13:00-14:00 EDT. Monthly, on 2nd Thursday.

RSVP: lu.ma/provableaisa...

Talks:

- Synthesizing Gatekeepers for Safe Reinforcement Learning (Sefas)

- Verifying Global Properties of Neural Networks (Soletskyi)

April 11th, 13:00-14:00 EDT. Monthly, on 2nd Thursday.

RSVP: lu.ma/provableaisa...

Talks:

- Synthesizing Gatekeepers for Safe Reinforcement Learning (Sefas)

- Verifying Global Properties of Neural Networks (Soletskyi)

A newsletter listing upcoming events and open calls related to AI safety.

aisafetyeventstracker.substack.com/p/ai-safety-...

A newsletter listing upcoming events and open calls related to AI safety.

aisafetyeventstracker.substack.com/p/ai-safety-...

Listing upcoming events and open calls related to AI safety

aisafetyeventstracker.substack.com/p/ai-safety-...

Listing upcoming events and open calls related to AI safety

aisafetyeventstracker.substack.com/p/ai-safety-...