https://haofeixu.github.io/

we learn a recurrent model ReSplat that is able to iteratively improve the reconstruction quality in a feed-forward manner!

haofeixu.github.io/resplat/

we learn a recurrent model ReSplat that is able to iteratively improve the reconstruction quality in a feed-forward manner!

haofeixu.github.io/resplat/

📄 Paper: www.scholar-inbox.com/papers/He202...

arxiv.org/pdf/2508.13148

💻 Code: github.com/autonomousvi...

🌐 Project Page: cli212.github.io/MDPO/

📄 Paper: www.scholar-inbox.com/papers/He202...

arxiv.org/pdf/2508.13148

💻 Code: github.com/autonomousvi...

🌐 Project Page: cli212.github.io/MDPO/

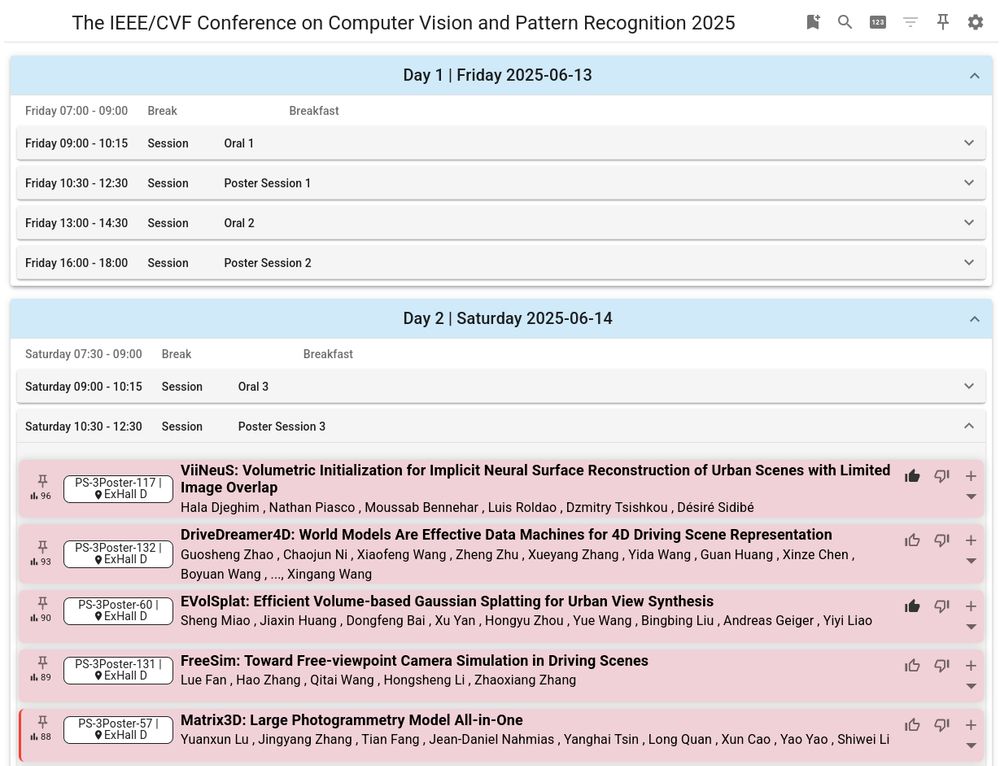

www.scholar-inbox.com/conference/c...

www.scholar-inbox.com/conference/c...

DepthSplat is a feed-forward model that achieves high-quality Gaussian reconstruction and view synthesis in just 0.6 seconds.

Looking forward to great conversations at the conference!

🔗 haofeixu.github.io/depthsplat/

DepthSplat is a feed-forward model that achieves high-quality Gaussian reconstruction and view synthesis in just 0.6 seconds.

Looking forward to great conversations at the conference!

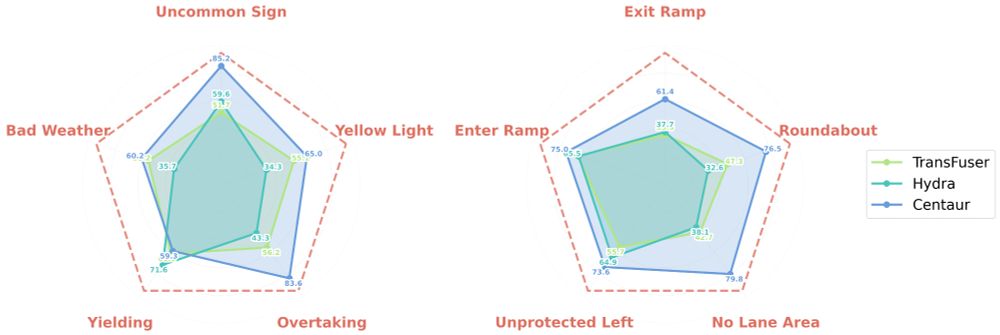

A Vision-Language-Action (VLA) model that achieves state-of-the-art driving performance with language capabilities.

Code: github.com/RenzKa/simli...

Paper: arxiv.org/abs/2503.09594

A Vision-Language-Action (VLA) model that achieves state-of-the-art driving performance with language capabilities.

Code: github.com/RenzKa/simli...

Paper: arxiv.org/abs/2503.09594

Can meshes capture fuzzy geometry? Volumetric Surfaces uses adaptive textured shells to model hair, fur without the splatting / volume overhead. It’s fast, looks great, and runs in real time even on budget phones.

🔗 autonomousvision.github.io/volsurfs/

📄 arxiv.org/pdf/2409.02482

Can meshes capture fuzzy geometry? Volumetric Surfaces uses adaptive textured shells to model hair, fur without the splatting / volume overhead. It’s fast, looks great, and runs in real time even on budget phones.

🔗 autonomousvision.github.io/volsurfs/

📄 arxiv.org/pdf/2409.02482

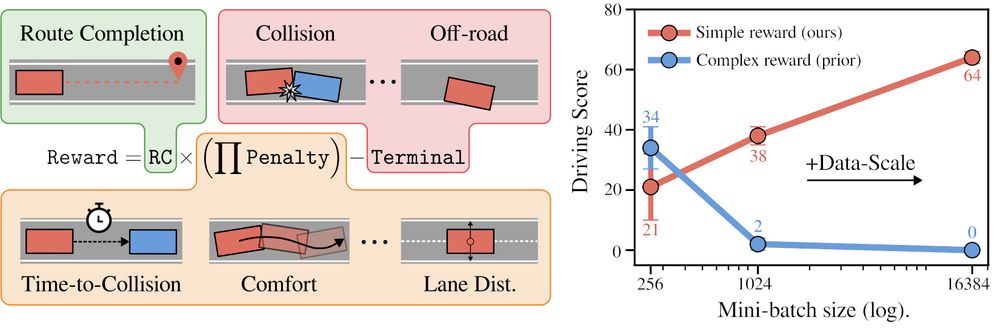

We show how simple rewards enable scaling up PPO for planning.

CaRL outperforms all prior learning-based approaches on nuPlan Val14 and CARLA longest6 v2, using less inference compute.

arxiv.org/abs/2504.17838

We show how simple rewards enable scaling up PPO for planning.

CaRL outperforms all prior learning-based approaches on nuPlan Val14 and CARLA longest6 v2, using less inference compute.

arxiv.org/abs/2504.17838

🔗 haofeixu.github.io/depthsplat/

🔗 haofeixu.github.io/depthsplat/

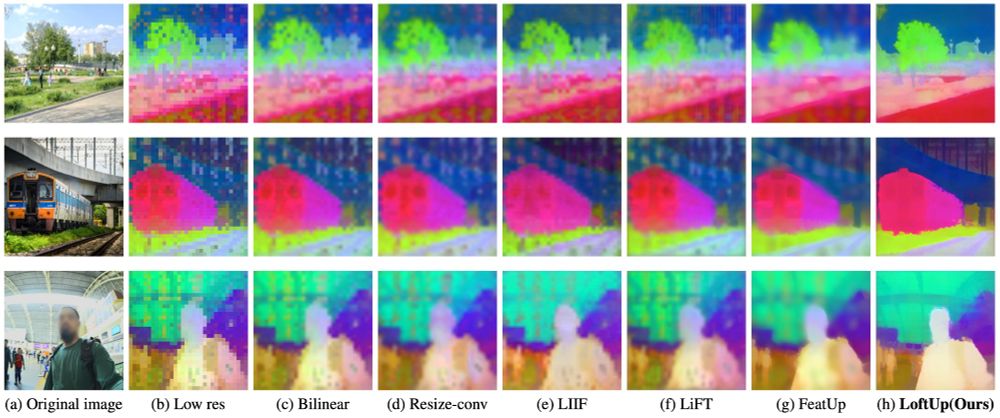

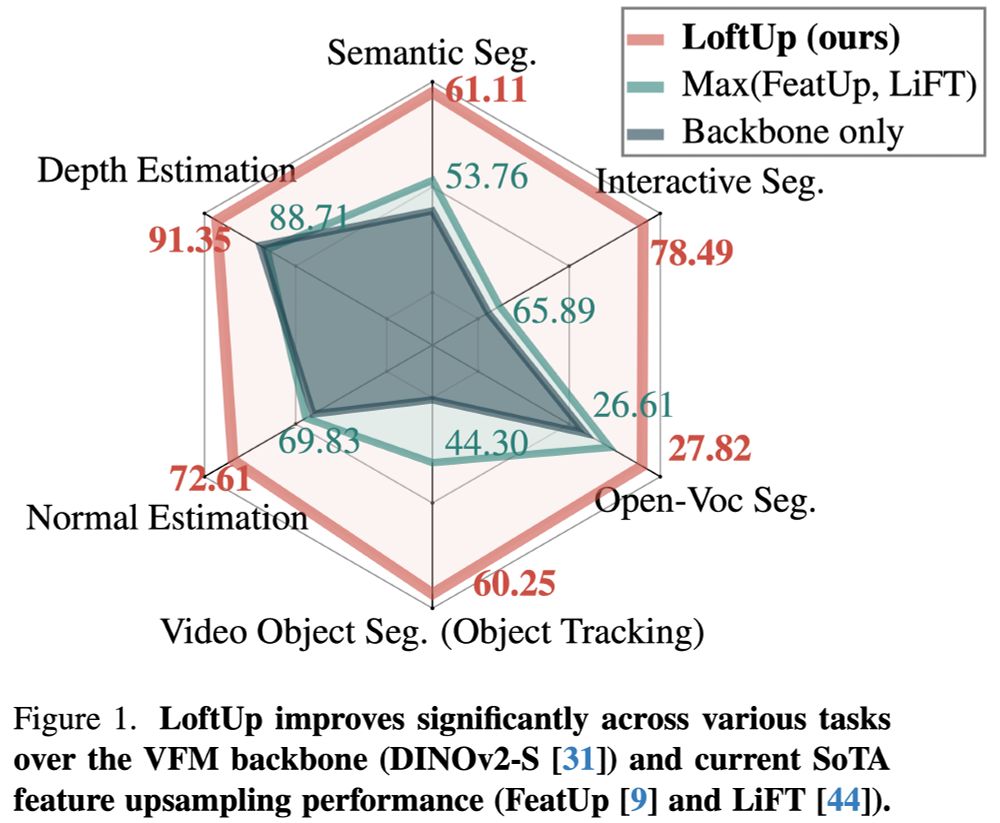

A strong (than ever) and lightweight feature upsampler for vision encoders that can boost performance on dense prediction tasks by 20%–100%!

Easy to plug into models like DINOv2, CLIP, SigLIP — simple design, big gains. Try it out!

github.com/andrehuang/l...

A strong (than ever) and lightweight feature upsampler for vision encoders that can boost performance on dense prediction tasks by 20%–100%!

Easy to plug into models like DINOv2, CLIP, SigLIP — simple design, big gains. Try it out!

github.com/andrehuang/l...