Greta Warren

@gretawarren.bsky.social

Postdoc at the University of Copenhagen interested in human-AI interaction 👩💻, explainable AI 🔎 & NLP 📑.

Currently researching human-centred explainable fact-checking.

🌐 https://gretawarren.github.io/

📍 🇩🇰/🇮🇪

Currently researching human-centred explainable fact-checking.

🌐 https://gretawarren.github.io/

📍 🇩🇰/🇮🇪

Pinned

Greta Warren

@gretawarren.bsky.social

· Feb 14

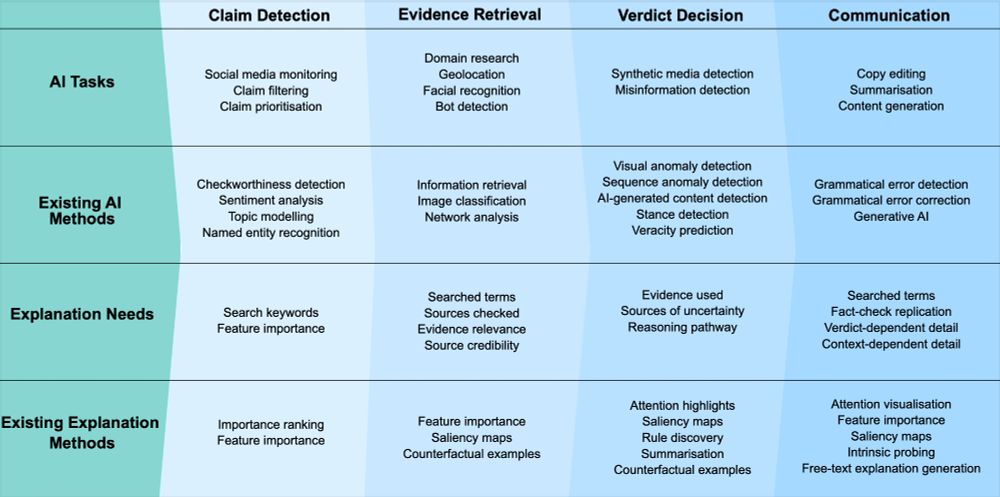

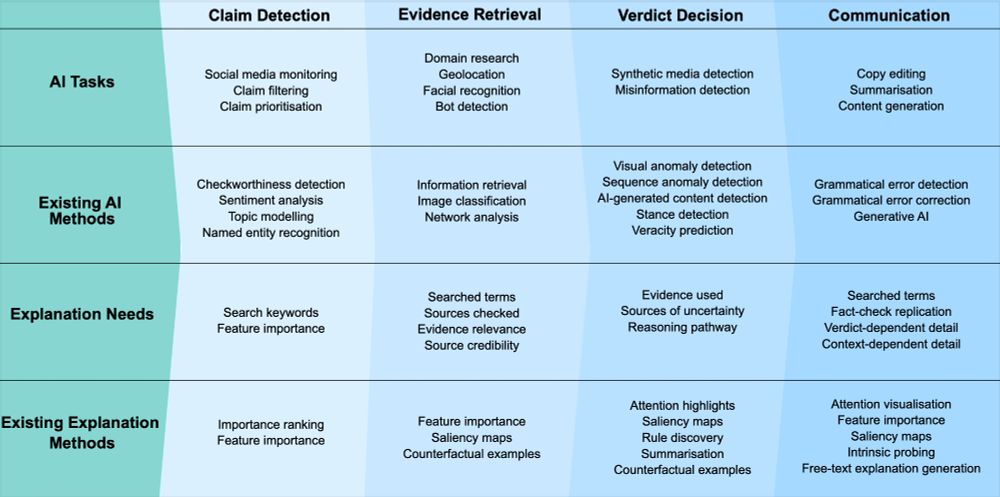

How can explainable AI empower fact-checkers to tackle misinformation? 📰🤖

We interviewed fact-checkers & identified explanation needs & design implications for #NLP, #HCI, and #XAI.

Excited to present this work with

@iaugenstein.bsky.social & Irina Shklovski at #chi2025!

arxiv.org/abs/2502.09083

We interviewed fact-checkers & identified explanation needs & design implications for #NLP, #HCI, and #XAI.

Excited to present this work with

@iaugenstein.bsky.social & Irina Shklovski at #chi2025!

arxiv.org/abs/2502.09083

Reposted by Greta Warren

- Fully funded PhD fellowship on Explainable NLU: apply by 31 October 2025, start in Spring 2026: candidate.hr-manager.net/ApplicationI...

- Open-topic PhD positions: express your interest through ELLIS by 31 October 2025, start in Autumn 2026: ellis.eu/news/ellis-p...

#NLProc #XAI

- Open-topic PhD positions: express your interest through ELLIS by 31 October 2025, start in Autumn 2026: ellis.eu/news/ellis-p...

#NLProc #XAI

PhD fellowship in Explainable Natural Language Understanding Department of Computer Science Faculty of SCIENCE University of Copenhagen

The Natural Language Processing Section at the Department of Computer Science, Faculty of Science at the University of Copenhagen invites applicants for a PhD f

candidate.hr-manager.net

September 1, 2025 at 2:20 PM

- Fully funded PhD fellowship on Explainable NLU: apply by 31 October 2025, start in Spring 2026: candidate.hr-manager.net/ApplicationI...

- Open-topic PhD positions: express your interest through ELLIS by 31 October 2025, start in Autumn 2026: ellis.eu/news/ellis-p...

#NLProc #XAI

- Open-topic PhD positions: express your interest through ELLIS by 31 October 2025, start in Autumn 2026: ellis.eu/news/ellis-p...

#NLProc #XAI

Reposted by Greta Warren

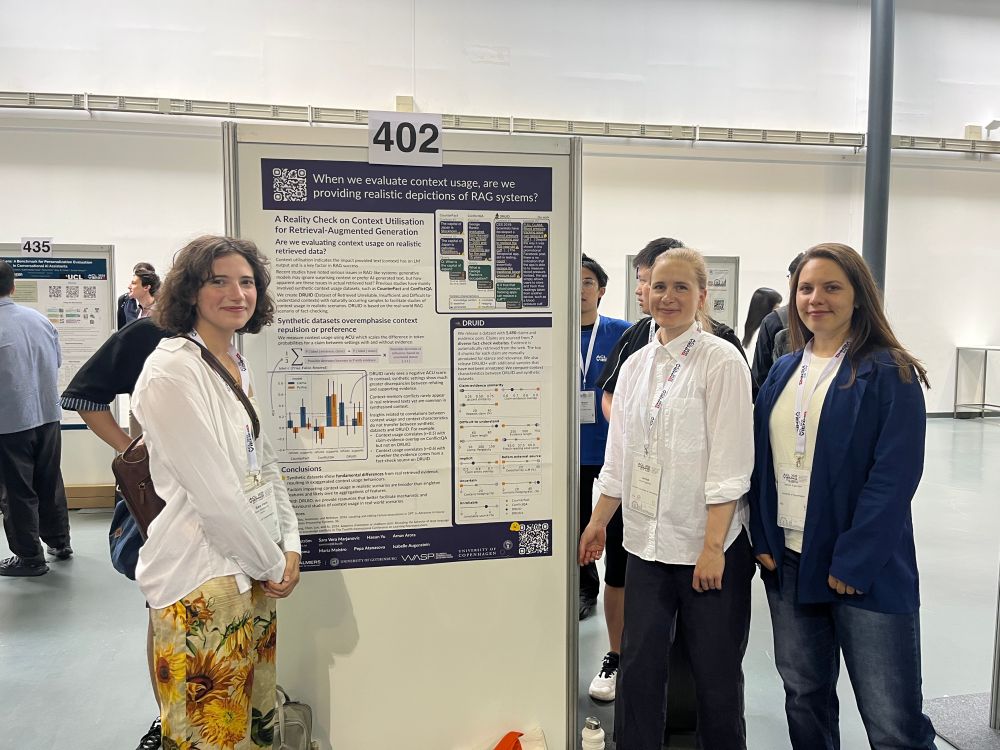

Thanks for everyone who came to our #acl2025nlp presentations (even the informal ones). Hope to connect again at #emnlp2025!

#NLProc @siddheshp.bsky.social @lovhag.bsky.social @saravera.bsky.social @nadavb.bsky.social @gretawarren.bsky.social @iaugenstein.bsky.social

#NLProc @siddheshp.bsky.social @lovhag.bsky.social @saravera.bsky.social @nadavb.bsky.social @gretawarren.bsky.social @iaugenstein.bsky.social

August 4, 2025 at 9:26 AM

Thanks for everyone who came to our #acl2025nlp presentations (even the informal ones). Hope to connect again at #emnlp2025!

#NLProc @siddheshp.bsky.social @lovhag.bsky.social @saravera.bsky.social @nadavb.bsky.social @gretawarren.bsky.social @iaugenstein.bsky.social

#NLProc @siddheshp.bsky.social @lovhag.bsky.social @saravera.bsky.social @nadavb.bsky.social @gretawarren.bsky.social @iaugenstein.bsky.social

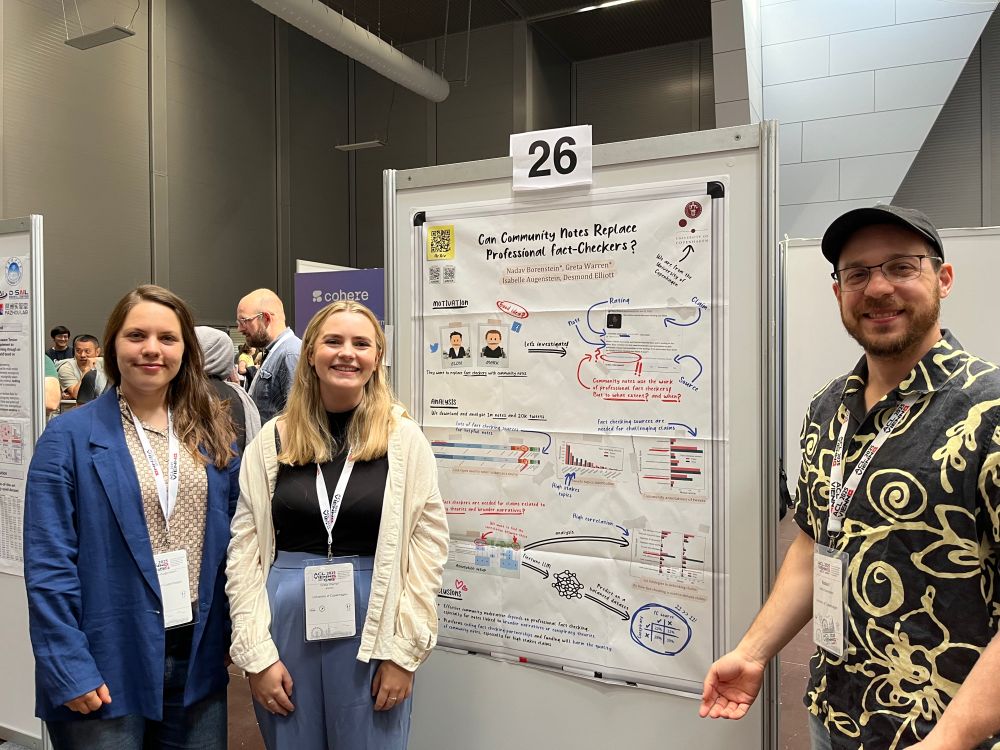

Presenting this today at #ACL2025NLP!

Come to Board 26 in Hall 5X to talk about the relationship between community notes and fact-checking and the future of content moderation 🔎

Come to Board 26 in Hall 5X to talk about the relationship between community notes and fact-checking and the future of content moderation 🔎

July 30, 2025 at 6:23 AM

Presenting this today at #ACL2025NLP!

Come to Board 26 in Hall 5X to talk about the relationship between community notes and fact-checking and the future of content moderation 🔎

Come to Board 26 in Hall 5X to talk about the relationship between community notes and fact-checking and the future of content moderation 🔎

Reposted by Greta Warren

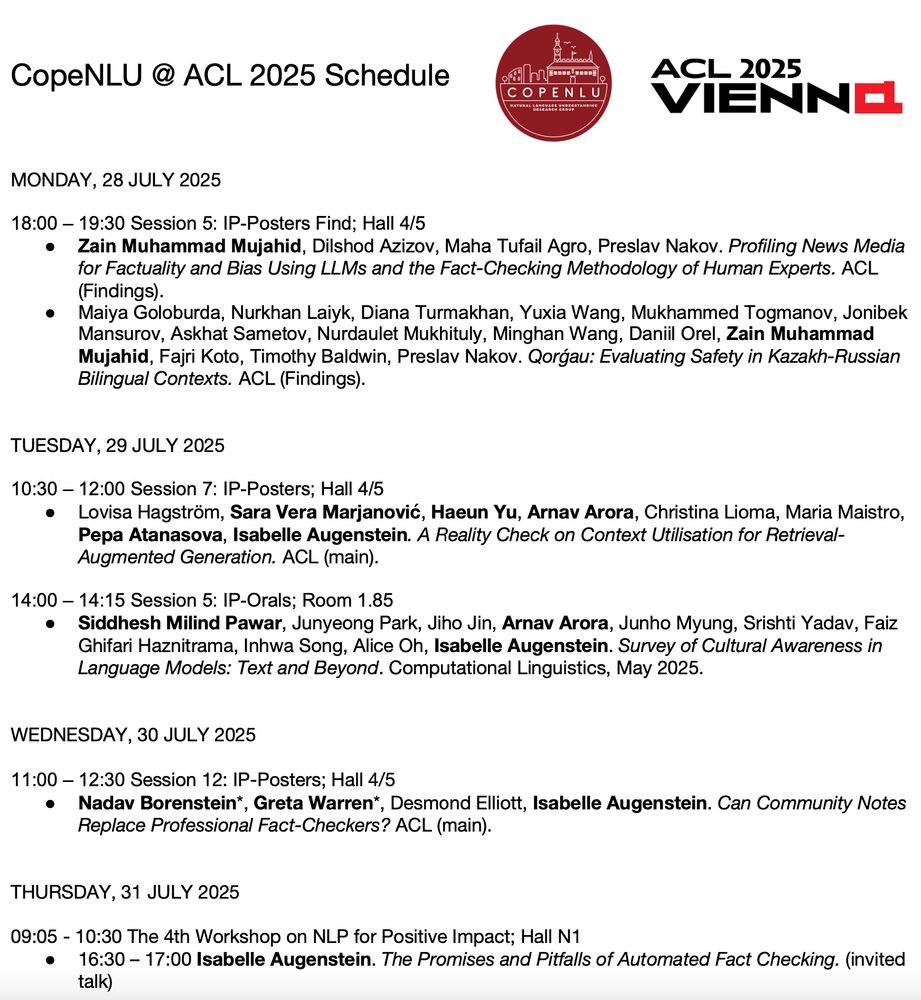

CopeNLU will give five paper presentations and one invited talk at #ACL2025NLP this week ⤵️

@apepa.bsky.social @rnv.bsky.social @nadavb.bsky.social @iaugenstein.bsky.social @gretawarren.bsky.social @saravera.bsky.social @lovhag.bsky.social @zainmujahid.me @siddheshp.bsky.social

@apepa.bsky.social @rnv.bsky.social @nadavb.bsky.social @iaugenstein.bsky.social @gretawarren.bsky.social @saravera.bsky.social @lovhag.bsky.social @zainmujahid.me @siddheshp.bsky.social

July 28, 2025 at 5:26 AM

CopeNLU will give five paper presentations and one invited talk at #ACL2025NLP this week ⤵️

@apepa.bsky.social @rnv.bsky.social @nadavb.bsky.social @iaugenstein.bsky.social @gretawarren.bsky.social @saravera.bsky.social @lovhag.bsky.social @zainmujahid.me @siddheshp.bsky.social

@apepa.bsky.social @rnv.bsky.social @nadavb.bsky.social @iaugenstein.bsky.social @gretawarren.bsky.social @saravera.bsky.social @lovhag.bsky.social @zainmujahid.me @siddheshp.bsky.social

Reposted by Greta Warren

Congrats to the best poster awardees!

@ajyl.bsky.social Shared Geometry of LMs: arxiv.org/pdf/2503.21073

Antonia Karamolegkou, Multimodal LMs for Visual Impairment: aclanthology.org/2025.acl-lon...

@gretawarren.bsky.social Can Community Notes Replace Fact-Checkers? aclanthology.org/2025.acl-short

@ajyl.bsky.social Shared Geometry of LMs: arxiv.org/pdf/2503.21073

Antonia Karamolegkou, Multimodal LMs for Visual Impairment: aclanthology.org/2025.acl-lon...

@gretawarren.bsky.social Can Community Notes Replace Fact-Checkers? aclanthology.org/2025.acl-short

July 27, 2025 at 11:34 AM

Congrats to the best poster awardees!

@ajyl.bsky.social Shared Geometry of LMs: arxiv.org/pdf/2503.21073

Antonia Karamolegkou, Multimodal LMs for Visual Impairment: aclanthology.org/2025.acl-lon...

@gretawarren.bsky.social Can Community Notes Replace Fact-Checkers? aclanthology.org/2025.acl-short

@ajyl.bsky.social Shared Geometry of LMs: arxiv.org/pdf/2503.21073

Antonia Karamolegkou, Multimodal LMs for Visual Impairment: aclanthology.org/2025.acl-lon...

@gretawarren.bsky.social Can Community Notes Replace Fact-Checkers? aclanthology.org/2025.acl-short

Reposted by Greta Warren

🚨 𝐖𝐡𝐚𝐭 𝐡𝐚𝐩𝐩𝐞𝐧𝐬 𝐰𝐡𝐞𝐧 𝐭𝐡𝐞 𝐜𝐫𝐨𝐰𝐝 𝐛𝐞𝐜𝐨𝐦𝐞𝐬 𝐭𝐡𝐞 𝐟𝐚𝐜𝐭-𝐜𝐡𝐞𝐜𝐤𝐞𝐫?

new "Community Moderation and the New Epistemology of Fact Checking on Social Media"

with I Augenstein, M Bakker, T. Chakraborty, D. Corney, E

Ferrara, I Gurevych, S Hale, E Hovy, H Ji, I Larraz, F

Menczer, P Nakov, D Sahnan, G Warren, G Zagni

new "Community Moderation and the New Epistemology of Fact Checking on Social Media"

with I Augenstein, M Bakker, T. Chakraborty, D. Corney, E

Ferrara, I Gurevych, S Hale, E Hovy, H Ji, I Larraz, F

Menczer, P Nakov, D Sahnan, G Warren, G Zagni

arxiv.org

June 1, 2025 at 7:48 AM

🚨 𝐖𝐡𝐚𝐭 𝐡𝐚𝐩𝐩𝐞𝐧𝐬 𝐰𝐡𝐞𝐧 𝐭𝐡𝐞 𝐜𝐫𝐨𝐰𝐝 𝐛𝐞𝐜𝐨𝐦𝐞𝐬 𝐭𝐡𝐞 𝐟𝐚𝐜𝐭-𝐜𝐡𝐞𝐜𝐤𝐞𝐫?

new "Community Moderation and the New Epistemology of Fact Checking on Social Media"

with I Augenstein, M Bakker, T. Chakraborty, D. Corney, E

Ferrara, I Gurevych, S Hale, E Hovy, H Ji, I Larraz, F

Menczer, P Nakov, D Sahnan, G Warren, G Zagni

new "Community Moderation and the New Epistemology of Fact Checking on Social Media"

with I Augenstein, M Bakker, T. Chakraborty, D. Corney, E

Ferrara, I Gurevych, S Hale, E Hovy, H Ji, I Larraz, F

Menczer, P Nakov, D Sahnan, G Warren, G Zagni

Reposted by Greta Warren

Join us in Copenhagen for the Pre-ACL 2025 Workshop! 🇩🇰

We’re excited to welcome researchers and practitioners in Natural Language Processing, Generative AI, and Language Technology to a one-day workshop on 26 July 2025 – just ahead of ACL 2025 in Vienna.

Learn more: www.aicentre.dk/events/pre-a...

We’re excited to welcome researchers and practitioners in Natural Language Processing, Generative AI, and Language Technology to a one-day workshop on 26 July 2025 – just ahead of ACL 2025 in Vienna.

Learn more: www.aicentre.dk/events/pre-a...

May 28, 2025 at 3:53 PM

Join us in Copenhagen for the Pre-ACL 2025 Workshop! 🇩🇰

We’re excited to welcome researchers and practitioners in Natural Language Processing, Generative AI, and Language Technology to a one-day workshop on 26 July 2025 – just ahead of ACL 2025 in Vienna.

Learn more: www.aicentre.dk/events/pre-a...

We’re excited to welcome researchers and practitioners in Natural Language Processing, Generative AI, and Language Technology to a one-day workshop on 26 July 2025 – just ahead of ACL 2025 in Vienna.

Learn more: www.aicentre.dk/events/pre-a...

Reposted by Greta Warren

I am happy to share that the paper got accepted to the main proceedings of ACL 2025 in Vienna! See you there!

arxiv.org/abs/2502.14132

arxiv.org/abs/2502.14132

May 28, 2025 at 6:42 AM

I am happy to share that the paper got accepted to the main proceedings of ACL 2025 in Vienna! See you there!

arxiv.org/abs/2502.14132

arxiv.org/abs/2502.14132

Reposted by Greta Warren

🚨 Please share with your network as I will be considering applications on a rolling basis for this exciting (and fully funded💰) PhD project at @tcddublin.bsky.social on Explaining and Mitigating Bias in Advice from Large Language Models 🤖💬🔍🤨 Details - www.tcd.ie/media/tcd/sc...

www.tcd.ie

May 14, 2025 at 1:33 PM

🚨 Please share with your network as I will be considering applications on a rolling basis for this exciting (and fully funded💰) PhD project at @tcddublin.bsky.social on Explaining and Mitigating Bias in Advice from Large Language Models 🤖💬🔍🤨 Details - www.tcd.ie/media/tcd/sc...

Having an amazing time so far in Yokohama for #CHI2025! 🌸

I'll be presenting our paper, Show Me the Work: Fact-Checkers' Requirements for Explainable Automated Fact-Checking, at the Explainable AI session tomorrow! ✨

📅 Monday, April 28 | 4:56 PM

📍G303

🔗

programs.sigchi.org/chi/2025/pro...

I'll be presenting our paper, Show Me the Work: Fact-Checkers' Requirements for Explainable Automated Fact-Checking, at the Explainable AI session tomorrow! ✨

📅 Monday, April 28 | 4:56 PM

📍G303

🔗

programs.sigchi.org/chi/2025/pro...

April 27, 2025 at 5:21 AM

Having an amazing time so far in Yokohama for #CHI2025! 🌸

I'll be presenting our paper, Show Me the Work: Fact-Checkers' Requirements for Explainable Automated Fact-Checking, at the Explainable AI session tomorrow! ✨

📅 Monday, April 28 | 4:56 PM

📍G303

🔗

programs.sigchi.org/chi/2025/pro...

I'll be presenting our paper, Show Me the Work: Fact-Checkers' Requirements for Explainable Automated Fact-Checking, at the Explainable AI session tomorrow! ✨

📅 Monday, April 28 | 4:56 PM

📍G303

🔗

programs.sigchi.org/chi/2025/pro...

Reposted by Greta Warren

🎓 Fully funded PhD in interpretable NLP at the University of Copenhagen & @aicentre.dk with @iaugenstein.bsky.social and me, @copenlu.bsky.social

📆 Application deadline: 15 May 2025

👀 Reasons to apply: www.copenlu.com/post/why-ucph/

🔗 Apply here: candidate.hr-manager.net/ApplicationI...

#NLProc #XAI

📆 Application deadline: 15 May 2025

👀 Reasons to apply: www.copenlu.com/post/why-ucph/

🔗 Apply here: candidate.hr-manager.net/ApplicationI...

#NLProc #XAI

Interested in joining us at the University of Copenhagen? | CopeNLU

The University of Copenhagen is a great place if you're both interested in high-quality NLP research and a high quality of life.

www.copenlu.com

April 11, 2025 at 9:10 AM

🎓 Fully funded PhD in interpretable NLP at the University of Copenhagen & @aicentre.dk with @iaugenstein.bsky.social and me, @copenlu.bsky.social

📆 Application deadline: 15 May 2025

👀 Reasons to apply: www.copenlu.com/post/why-ucph/

🔗 Apply here: candidate.hr-manager.net/ApplicationI...

#NLProc #XAI

📆 Application deadline: 15 May 2025

👀 Reasons to apply: www.copenlu.com/post/why-ucph/

🔗 Apply here: candidate.hr-manager.net/ApplicationI...

#NLProc #XAI

Reposted by Greta Warren

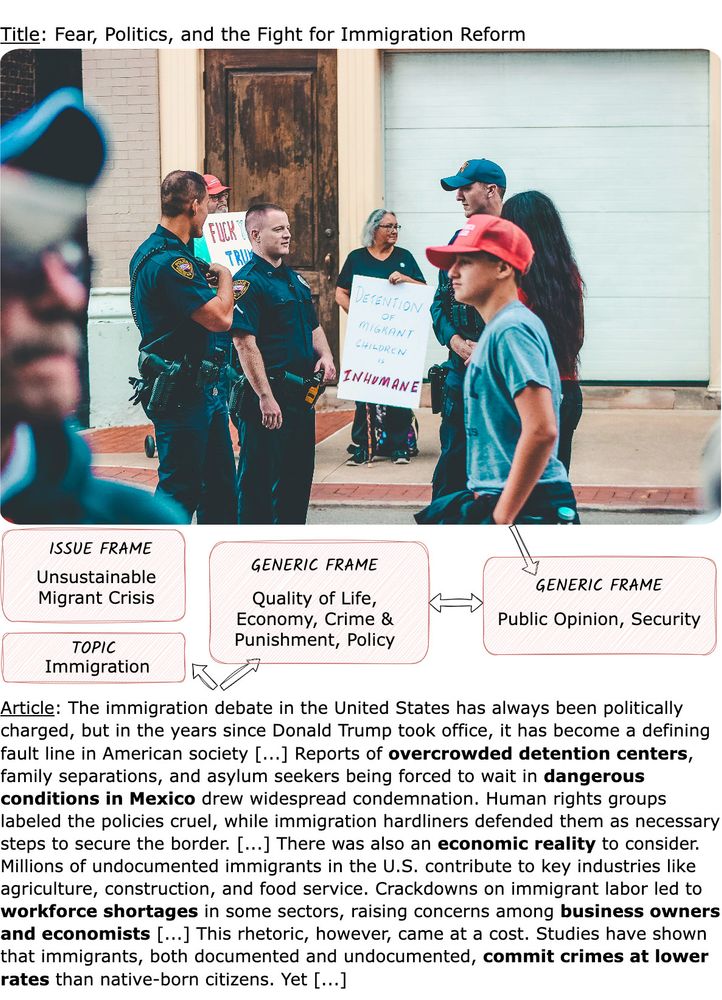

🚨New pre-print 🚨

News articles often convey different things in text vs. image. Recent work in computational framing analysis has analysed the article text but the corresponding images in those articles have been overlooked.

We propose multi-modal framing analysis of news: arxiv.org/abs/2503.20960

News articles often convey different things in text vs. image. Recent work in computational framing analysis has analysed the article text but the corresponding images in those articles have been overlooked.

We propose multi-modal framing analysis of news: arxiv.org/abs/2503.20960

April 7, 2025 at 9:20 AM

🚨New pre-print 🚨

News articles often convey different things in text vs. image. Recent work in computational framing analysis has analysed the article text but the corresponding images in those articles have been overlooked.

We propose multi-modal framing analysis of news: arxiv.org/abs/2503.20960

News articles often convey different things in text vs. image. Recent work in computational framing analysis has analysed the article text but the corresponding images in those articles have been overlooked.

We propose multi-modal framing analysis of news: arxiv.org/abs/2503.20960

Reposted by Greta Warren

🚨 Preprint alert 🚨

𝐂𝐚𝐧 𝐂𝐨𝐦𝐦𝐮𝐧𝐢𝐭𝐲 𝐍𝐨𝐭𝐞𝐬 𝐑𝐞𝐩𝐥𝐚𝐜𝐞 𝐏𝐫𝐨𝐟𝐞𝐬𝐬𝐢𝐨𝐧𝐚𝐥 𝐅𝐚𝐜𝐭-𝐂𝐡𝐞𝐜𝐤𝐞𝐫𝐬?

(arxiv.org/abs/2502.14132)

Fact-checking agencies have come under intense scrutiny in recent months regarding their role in combating misinformation on social media.

𝐂𝐚𝐧 𝐂𝐨𝐦𝐦𝐮𝐧𝐢𝐭𝐲 𝐍𝐨𝐭𝐞𝐬 𝐑𝐞𝐩𝐥𝐚𝐜𝐞 𝐏𝐫𝐨𝐟𝐞𝐬𝐬𝐢𝐨𝐧𝐚𝐥 𝐅𝐚𝐜𝐭-𝐂𝐡𝐞𝐜𝐤𝐞𝐫𝐬?

(arxiv.org/abs/2502.14132)

Fact-checking agencies have come under intense scrutiny in recent months regarding their role in combating misinformation on social media.

February 21, 2025 at 10:30 AM

🚨 Preprint alert 🚨

𝐂𝐚𝐧 𝐂𝐨𝐦𝐦𝐮𝐧𝐢𝐭𝐲 𝐍𝐨𝐭𝐞𝐬 𝐑𝐞𝐩𝐥𝐚𝐜𝐞 𝐏𝐫𝐨𝐟𝐞𝐬𝐬𝐢𝐨𝐧𝐚𝐥 𝐅𝐚𝐜𝐭-𝐂𝐡𝐞𝐜𝐤𝐞𝐫𝐬?

(arxiv.org/abs/2502.14132)

Fact-checking agencies have come under intense scrutiny in recent months regarding their role in combating misinformation on social media.

𝐂𝐚𝐧 𝐂𝐨𝐦𝐦𝐮𝐧𝐢𝐭𝐲 𝐍𝐨𝐭𝐞𝐬 𝐑𝐞𝐩𝐥𝐚𝐜𝐞 𝐏𝐫𝐨𝐟𝐞𝐬𝐬𝐢𝐨𝐧𝐚𝐥 𝐅𝐚𝐜𝐭-𝐂𝐡𝐞𝐜𝐤𝐞𝐫𝐬?

(arxiv.org/abs/2502.14132)

Fact-checking agencies have come under intense scrutiny in recent months regarding their role in combating misinformation on social media.

Reposted by Greta Warren

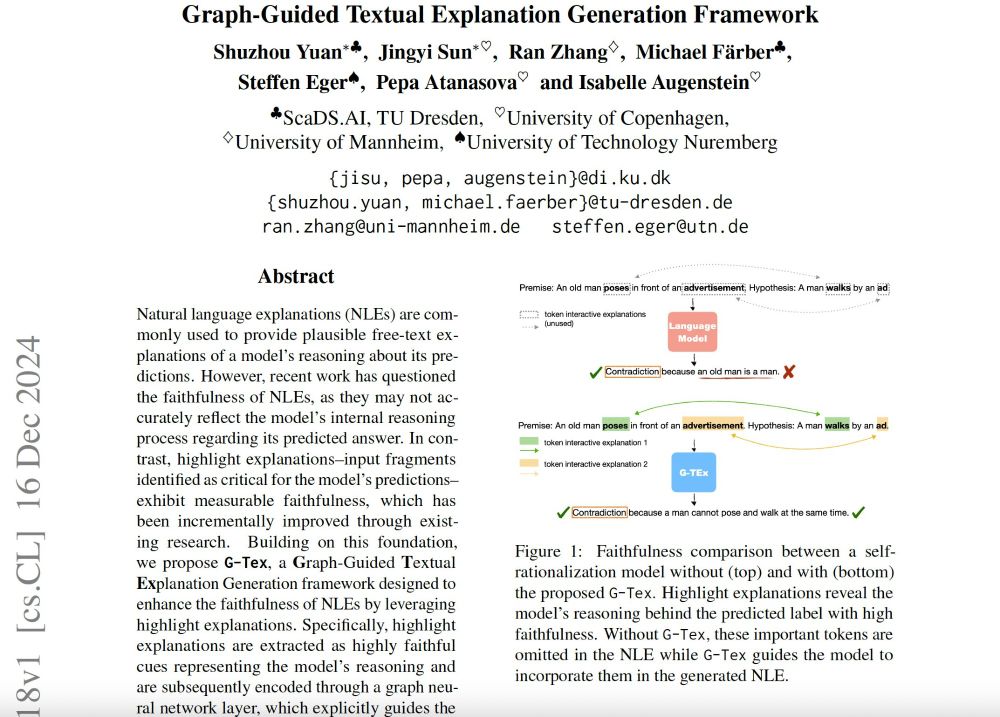

Check our new work on making natural language explanations more faithful to a model’s reasoning. We combine GNNs + highlight explanations to improve faithfulness!

Read more: arxiv.org/pdf/2412.12318

@copenlu.bsky.social

Read more: arxiv.org/pdf/2412.12318

@copenlu.bsky.social

February 17, 2025 at 8:33 PM

Check our new work on making natural language explanations more faithful to a model’s reasoning. We combine GNNs + highlight explanations to improve faithfulness!

Read more: arxiv.org/pdf/2412.12318

@copenlu.bsky.social

Read more: arxiv.org/pdf/2412.12318

@copenlu.bsky.social

How can explainable AI empower fact-checkers to tackle misinformation? 📰🤖

We interviewed fact-checkers & identified explanation needs & design implications for #NLP, #HCI, and #XAI.

Excited to present this work with

@iaugenstein.bsky.social & Irina Shklovski at #chi2025!

arxiv.org/abs/2502.09083

We interviewed fact-checkers & identified explanation needs & design implications for #NLP, #HCI, and #XAI.

Excited to present this work with

@iaugenstein.bsky.social & Irina Shklovski at #chi2025!

arxiv.org/abs/2502.09083

February 14, 2025 at 9:05 AM

How can explainable AI empower fact-checkers to tackle misinformation? 📰🤖

We interviewed fact-checkers & identified explanation needs & design implications for #NLP, #HCI, and #XAI.

Excited to present this work with

@iaugenstein.bsky.social & Irina Shklovski at #chi2025!

arxiv.org/abs/2502.09083

We interviewed fact-checkers & identified explanation needs & design implications for #NLP, #HCI, and #XAI.

Excited to present this work with

@iaugenstein.bsky.social & Irina Shklovski at #chi2025!

arxiv.org/abs/2502.09083