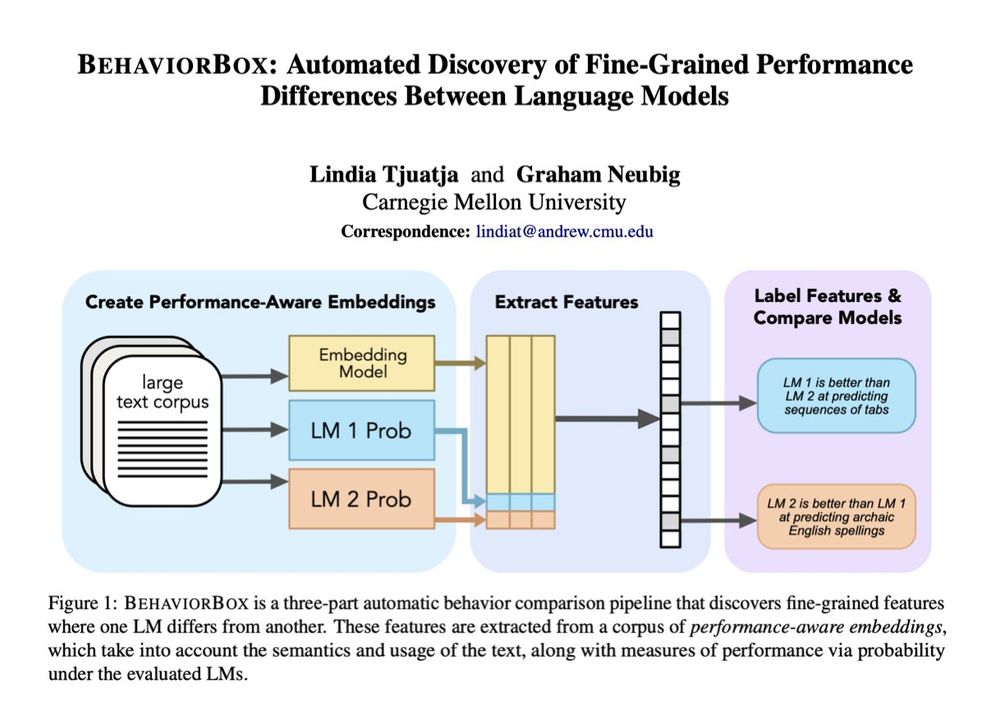

We examine this from first principles, performing unsupervised discovery of "abilities" that one model has and the other does not.

Results show interesting differences between model classes, sizes and pre-/post-training.

🧵1/9

We examine this from first principles, performing unsupervised discovery of "abilities" that one model has and the other does not.

Results show interesting differences between model classes, sizes and pre-/post-training.

Tired of coding agents wasting time and API credits, only to output broken code? What if they asked first instead of guessing? 🚀

(New work led by Sanidhya Vijay: www.linkedin.com/in/sanidhya-...)

Tired of coding agents wasting time and API credits, only to output broken code? What if they asked first instead of guessing? 🚀

(New work led by Sanidhya Vijay: www.linkedin.com/in/sanidhya-...)

Slides: phontron.com/class/anlp-f...

Videos: youtube.com/playlist?lis...

Hope this is useful to people 😀

Slides: phontron.com/class/anlp-f...

Videos: youtube.com/playlist?lis...

Hope this is useful to people 😀

@uwnlp.bsky.social & Ai2

With open models & 45M-paper datastores, it outperforms proprietary systems & match human experts.

Try out our demo!

openscholar.allen.ai

@uwnlp.bsky.social & Ai2

With open models & 45M-paper datastores, it outperforms proprietary systems & match human experts.

Try out our demo!

openscholar.allen.ai

🌟 In our new paper, we rethink how we should be controlling for these factors 🧵:

🌟 In our new paper, we rethink how we should be controlling for these factors 🧵: