blog.nlp-lab.ai/2025/08/19/S...

Check it out!

arxiv.org/abs/2510.25366

Check it out!

arxiv.org/abs/2510.25366

"A Convexity-Dependent Two-Phase Training Algorithm for Deep Neural Networks"

Huge thanks to the team!

There will be a #arXiv version soon! Stay tuned!

#paper #HSG #LLM #Transformers #ML #HSG #StGallen

Thank you to the organizers for the great event so far!

Stay tuned for our blog-post on our website 👀🤫

#AI #Optimization #ML

Thank you to the organizers for the great event so far!

Stay tuned for our blog-post on our website 👀🤫

#AI #Optimization #ML

#ai #llm #ontology #semanticWeb #llm

#ai #llm #ontology #semanticWeb #llm

Learn more here: sites.google.com/view/efficie...

Learn more here: sites.google.com/view/efficie...

pip install mlx-lm

mlx_lm.generate --model swiss-ai/Apertus-8B-Instruct-2509 --prompt "wer bisch du?"

(make sure you have done huggingface-cli login before)

pip install mlx-lm

mlx_lm.generate --model swiss-ai/Apertus-8B-Instruct-2509 --prompt "wer bisch du?"

(make sure you have done huggingface-cli login before)

Read the blog post about our benchmarking results of the #Apertus 8B Instruct model on

blog.nlp-lab.ai/2025/09/05/A...

Made with #lm_eval.

Would love to hear your thoughts here!

Great Work @ethz.ch @icepfl.bsky.social @cscsch.bsky.social !

Read the blog post about our benchmarking results of the #Apertus 8B Instruct model on

blog.nlp-lab.ai/2025/09/05/A...

Made with #lm_eval.

Would love to hear your thoughts here!

Great Work @ethz.ch @icepfl.bsky.social @cscsch.bsky.social !

blog.nlp-lab.ai/2025/08/19/S...

blog.nlp-lab.ai/2025/08/19/S...

- 70B parameter open weights.

- 15T training tokens.

- Technical report containing exactly how they trained it and what data they used - truly open source and build-able (?).

- Multi-lingual.

- […]

- 70B parameter open weights.

- 15T training tokens.

- Technical report containing exactly how they trained it and what data they used - truly open source and build-able (?).

- Multi-lingual.

- […]

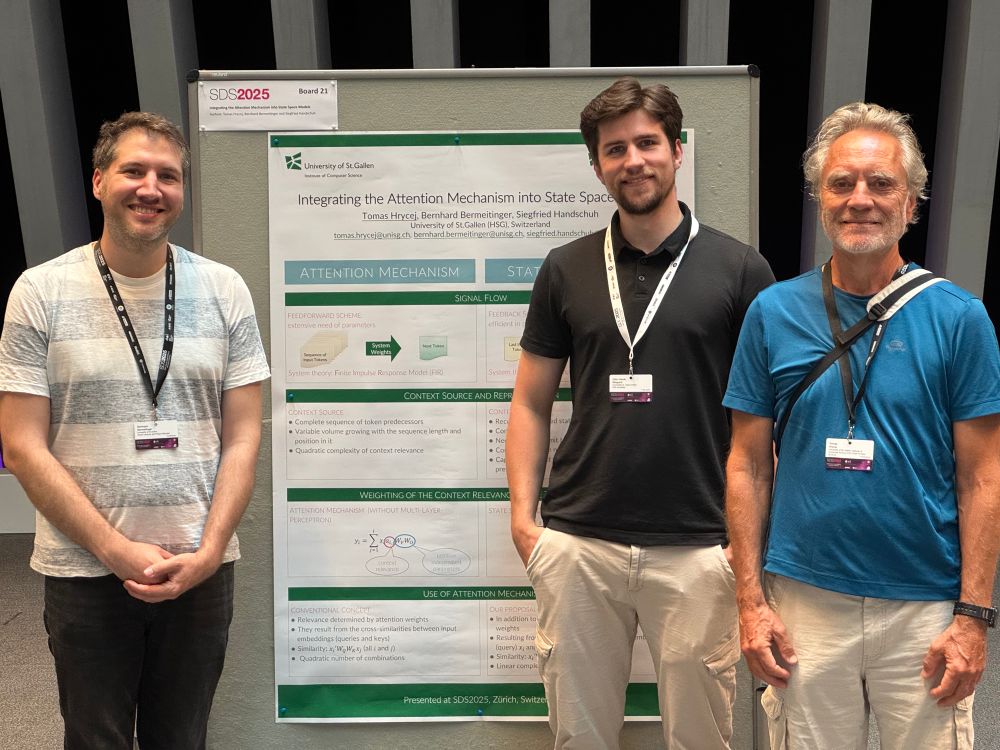

Integrating the Attention Mechanism into State Space Models

This research forms one of the foundational pillars of my own PhD, and I’m proud to see it take shape in the wider research community.

Integrating the Attention Mechanism into State Space Models

This research forms one of the foundational pillars of my own PhD, and I’m proud to see it take shape in the wider research community.