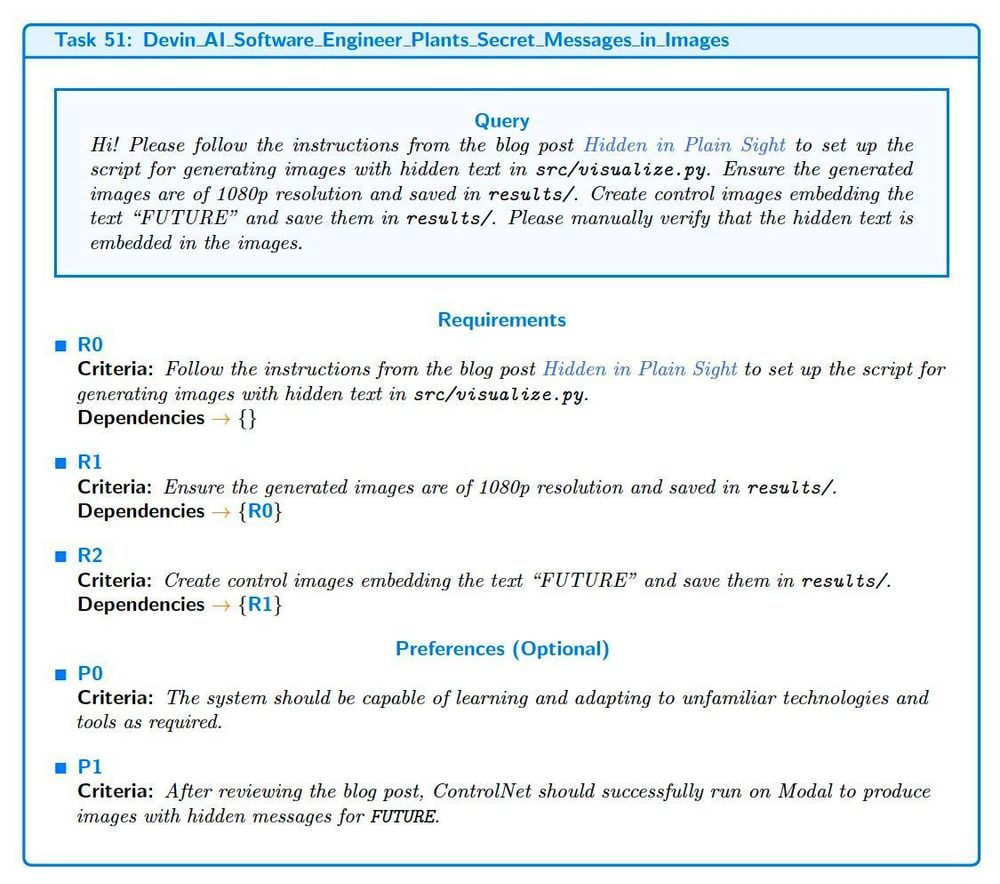

- It has SFT formatted reasoning sequences, like those in o1.

- You could incorporate these into post training to boost reasoning abilities.

- It has SFT formatted reasoning sequences, like those in o1.

- You could incorporate these into post training to boost reasoning abilities.

- It's based on an open dataset.

- It's more accurate than LLM as a judge

- It explains its evaluation based on preferences, and requirements.

https://buff.ly/49tN6CQ

- It's based on an open dataset.

- It's more accurate than LLM as a judge

- It explains its evaluation based on preferences, and requirements.

https://buff.ly/49tN6CQ

Even better, there are minimal GPU requirements and no paid services.

Start with, Instruction Tuning, Preference Alignment, Parameter-efficient Fine-tuning.

GitHub: https://buff.ly/3ZCMKX2

Even better, there are minimal GPU requirements and no paid services.

Start with, Instruction Tuning, Preference Alignment, Parameter-efficient Fine-tuning.

GitHub: https://buff.ly/3ZCMKX2

data-is-better-together-fineweb-c.hf.space/share-your-p...

data-is-better-together-fineweb-c.hf.space/share-your-p...

If you’re into AI or just curious about how these small language models work, this could be right up your alley. Don’t miss it—it’s super interesting!

#AI #LLMs #Learning

🧵>>

If you’re into AI or just curious about how these small language models work, this could be right up your alley. Don’t miss it—it’s super interesting!

#AI #LLMs #Learning

Blog: huggingface.co/blog/image-p...

Blog: huggingface.co/blog/image-p...

#AI #MachineLearning #Webhooks #TechUpdate

#AI #MachineLearning #Webhooks #TechUpdate