Reviewers will be more biased than a crowd, it's a high variance+bias estimator, it can harm research.

Reviewers will be more biased than a crowd, it's a high variance+bias estimator, it can harm research.

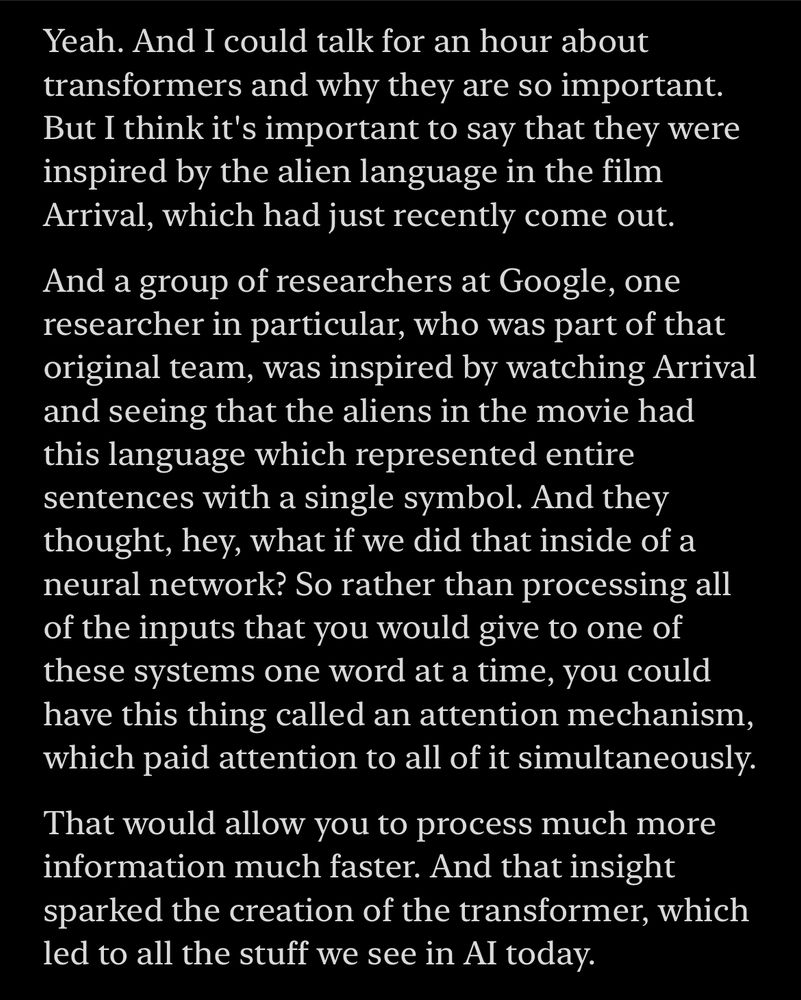

For a reasonable N of 2048, these are the computed frequencies prior to cos(x) & sin(x) for fp32 above and bf16 below.

Given how short the period is of simple trig functions, this difference is catastrophic for large values.

For a reasonable N of 2048, these are the computed frequencies prior to cos(x) & sin(x) for fp32 above and bf16 below.

Given how short the period is of simple trig functions, this difference is catastrophic for large values.

Previous record: 5.03 minutes

Changelog:

- FlexAttention blocksize warmup

- hyperparameter tweaks

Previous record: 5.03 minutes

Changelog:

- FlexAttention blocksize warmup

- hyperparameter tweaks

so you can attend to local neighbourhood and registers or text condition.

it lets you reduce partial attention results (e.g. logsumexp provided by xformers APIs) into its LSE.

github.com/SHI-Labs/NAT...

so you can attend to local neighbourhood and registers or text condition.

it lets you reduce partial attention results (e.g. logsumexp provided by xformers APIs) into its LSE.

github.com/SHI-Labs/NAT...

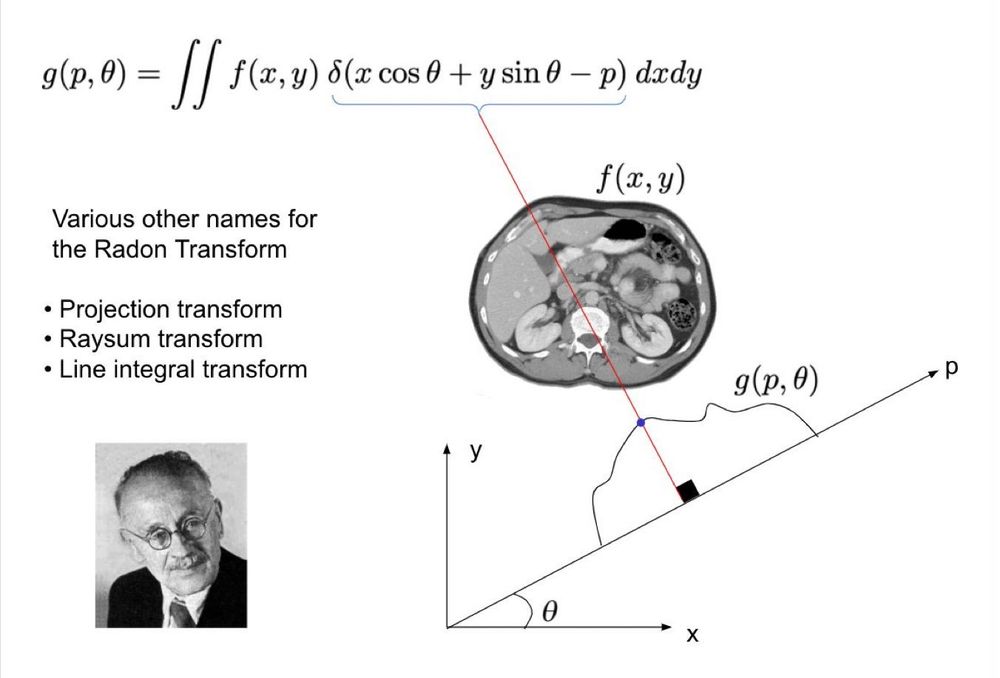

But RT isn't just for CTs. It's a sort of generalization of marginals in probability

RT g(p,θ): Shoot rays at θ+90 & offset p, measure line integrals of f(x,y) along the ray

1/n

But RT isn't just for CTs. It's a sort of generalization of marginals in probability

RT g(p,θ): Shoot rays at θ+90 & offset p, measure line integrals of f(x,y) along the ray

1/n

github.com/ethansmith20...

github.com/ethansmith20...

dev-discuss.pytorch.org/t/fsdp-cudac...

dev-discuss.pytorch.org/t/fsdp-cudac...