Emilio Ferrara

@emilioferrara.bsky.social

Prof of Computer Science at USC

AI, social media, society, networks, data, and

HUMANS LABS http://www.emilio.ferrara.name

AI, social media, society, networks, data, and

HUMANS LABS http://www.emilio.ferrara.name

Pinned

Emilio Ferrara

@emilioferrara.bsky.social

· Jun 22

Information Suppression in Large Language Models: Auditing, Quantifying, and Characterizing Censorship in DeepSeek

This study examines information suppression mechanisms in DeepSeek, an open-source large language model (LLM) developed in China. We propose an auditing framework and use it to analyze the model's res...

arxiv.org

🤖Thrilled to share our latest work☄️

Have you ever wondered what LLMs know but they are not saying?

We built an auditing framework to study information suppression in LLMs, and demonstrated it to quantify and characterize censorship in DeepSeek.

Read more:

arxiv.org/abs/2506.12349

Have you ever wondered what LLMs know but they are not saying?

We built an auditing framework to study information suppression in LLMs, and demonstrated it to quantify and characterize censorship in DeepSeek.

Read more:

arxiv.org/abs/2506.12349

Reposted by Emilio Ferrara

🚨 New preprint 🚨

Can AI agents coordinate influence campaigns without human guidance? And how does coordination arise among AI agents? In our latest research, we simulate LLM-powered AI agents acting like users on an online platform, some benign, some running an influence operation

Can AI agents coordinate influence campaigns without human guidance? And how does coordination arise among AI agents? In our latest research, we simulate LLM-powered AI agents acting like users on an online platform, some benign, some running an influence operation

November 3, 2025 at 7:46 PM

🚨 New preprint 🚨

Can AI agents coordinate influence campaigns without human guidance? And how does coordination arise among AI agents? In our latest research, we simulate LLM-powered AI agents acting like users on an online platform, some benign, some running an influence operation

Can AI agents coordinate influence campaigns without human guidance? And how does coordination arise among AI agents? In our latest research, we simulate LLM-powered AI agents acting like users on an online platform, some benign, some running an influence operation

Reposted by Emilio Ferrara

New paper + interactive dashboard on the 2024 election information ecosystem.

Building on discourse networks work with @hanshanley.bsky.social @luceriluc.bsky.social @emilioferrara.bsky.social—this lets us visualize the online landscape as a unified system, rather than isolating each platform.

Building on discourse networks work with @hanshanley.bsky.social @luceriluc.bsky.social @emilioferrara.bsky.social—this lets us visualize the online landscape as a unified system, rather than isolating each platform.

October 20, 2025 at 6:33 PM

New paper + interactive dashboard on the 2024 election information ecosystem.

Building on discourse networks work with @hanshanley.bsky.social @luceriluc.bsky.social @emilioferrara.bsky.social—this lets us visualize the online landscape as a unified system, rather than isolating each platform.

Building on discourse networks work with @hanshanley.bsky.social @luceriluc.bsky.social @emilioferrara.bsky.social—this lets us visualize the online landscape as a unified system, rather than isolating each platform.

Thrilled to share our latest paper "Information Suppression in Large Language Models" is now published on Information Sciences!

To read more, see: www.sciencedirect.com/science/arti...

great work w/ @siyizhou.bsky.social

To read more, see: www.sciencedirect.com/science/arti...

great work w/ @siyizhou.bsky.social

October 16, 2025 at 10:44 PM

Thrilled to share our latest paper "Information Suppression in Large Language Models" is now published on Information Sciences!

To read more, see: www.sciencedirect.com/science/arti...

great work w/ @siyizhou.bsky.social

To read more, see: www.sciencedirect.com/science/arti...

great work w/ @siyizhou.bsky.social

Reposted by Emilio Ferrara

Peiran Qiu, Siyi Zhou, Emilio Ferrara: Information Suppression in Large Language Models: Auditing, Quantifying, and Characterizing Censorship in DeepSeek https://arxiv.org/abs/2506.12349 https://arxiv.org/pdf/2506.12349 https://arxiv.org/html/2506.12349

June 17, 2025 at 9:05 AM

Peiran Qiu, Siyi Zhou, Emilio Ferrara: Information Suppression in Large Language Models: Auditing, Quantifying, and Characterizing Censorship in DeepSeek https://arxiv.org/abs/2506.12349 https://arxiv.org/pdf/2506.12349 https://arxiv.org/html/2506.12349

Reposted by Emilio Ferrara

Amin Banayeeanzade, Ala N. Tak, Fatemeh Bahrani, Anahita Bolourani, Leonardo Blas, Emilio Ferrara, Jonathan Gratch, Sai Praneeth Karimireddy

Psychological Steering in LLMs: An Evaluation of Effectiveness and Trustworthiness

https://arxiv.org/abs/2510.04484

Psychological Steering in LLMs: An Evaluation of Effectiveness and Trustworthiness

https://arxiv.org/abs/2510.04484

October 7, 2025 at 9:43 AM

Amin Banayeeanzade, Ala N. Tak, Fatemeh Bahrani, Anahita Bolourani, Leonardo Blas, Emilio Ferrara, Jonathan Gratch, Sai Praneeth Karimireddy

Psychological Steering in LLMs: An Evaluation of Effectiveness and Trustworthiness

https://arxiv.org/abs/2510.04484

Psychological Steering in LLMs: An Evaluation of Effectiveness and Trustworthiness

https://arxiv.org/abs/2510.04484

Reposted by Emilio Ferrara

Big news from #ICWSM2025!

"The Susceptibility Paradox in Online Social Influence” by @luceriluc.bsky.social, @jinyiye.bsky.social, Julie Jiang & @emilioferrara.bsky.social was named a Top 5 Paper & won Best Paper Honorable Mention!

👏 Congrats to all!

"The Susceptibility Paradox in Online Social Influence” by @luceriluc.bsky.social, @jinyiye.bsky.social, Julie Jiang & @emilioferrara.bsky.social was named a Top 5 Paper & won Best Paper Honorable Mention!

👏 Congrats to all!

June 26, 2025 at 9:39 PM

Big news from #ICWSM2025!

"The Susceptibility Paradox in Online Social Influence” by @luceriluc.bsky.social, @jinyiye.bsky.social, Julie Jiang & @emilioferrara.bsky.social was named a Top 5 Paper & won Best Paper Honorable Mention!

👏 Congrats to all!

"The Susceptibility Paradox in Online Social Influence” by @luceriluc.bsky.social, @jinyiye.bsky.social, Julie Jiang & @emilioferrara.bsky.social was named a Top 5 Paper & won Best Paper Honorable Mention!

👏 Congrats to all!

Reposted by Emilio Ferrara

So satisfying to have some evidence that Elon Musk is wildly promoting himself on X.

Researchers made 120 sock puppet accounts to see whose content is getting pushed on users. #FAccT2025

@jinyiye.bsky.social @luceriluc.bsky.social @emilioferrara.bsky.social

doi.org/10.1145/3715...

Researchers made 120 sock puppet accounts to see whose content is getting pushed on users. #FAccT2025

@jinyiye.bsky.social @luceriluc.bsky.social @emilioferrara.bsky.social

doi.org/10.1145/3715...

June 25, 2025 at 8:31 AM

So satisfying to have some evidence that Elon Musk is wildly promoting himself on X.

Researchers made 120 sock puppet accounts to see whose content is getting pushed on users. #FAccT2025

@jinyiye.bsky.social @luceriluc.bsky.social @emilioferrara.bsky.social

doi.org/10.1145/3715...

Researchers made 120 sock puppet accounts to see whose content is getting pushed on users. #FAccT2025

@jinyiye.bsky.social @luceriluc.bsky.social @emilioferrara.bsky.social

doi.org/10.1145/3715...

Reposted by Emilio Ferrara

A Multimodal TikTok Dataset of #Ecuador's 2024 Political Crisis and Organized Crime Discourse

by Gabriela Pinto, @emilioferrara.bsky.social USC

workshop-proceedings.icwsm.org/abstract.php...

@icwsm.bsky.social #dataforvulnerable25

by Gabriela Pinto, @emilioferrara.bsky.social USC

workshop-proceedings.icwsm.org/abstract.php...

@icwsm.bsky.social #dataforvulnerable25

Proceedings of the ICWSM Workshops

workshop-proceedings.icwsm.org

June 23, 2025 at 10:12 AM

A Multimodal TikTok Dataset of #Ecuador's 2024 Political Crisis and Organized Crime Discourse

by Gabriela Pinto, @emilioferrara.bsky.social USC

workshop-proceedings.icwsm.org/abstract.php...

@icwsm.bsky.social #dataforvulnerable25

by Gabriela Pinto, @emilioferrara.bsky.social USC

workshop-proceedings.icwsm.org/abstract.php...

@icwsm.bsky.social #dataforvulnerable25

🤖Thrilled to share our latest work☄️

Have you ever wondered what LLMs know but they are not saying?

We built an auditing framework to study information suppression in LLMs, and demonstrated it to quantify and characterize censorship in DeepSeek.

Read more:

arxiv.org/abs/2506.12349

Have you ever wondered what LLMs know but they are not saying?

We built an auditing framework to study information suppression in LLMs, and demonstrated it to quantify and characterize censorship in DeepSeek.

Read more:

arxiv.org/abs/2506.12349

Information Suppression in Large Language Models: Auditing, Quantifying, and Characterizing Censorship in DeepSeek

This study examines information suppression mechanisms in DeepSeek, an open-source large language model (LLM) developed in China. We propose an auditing framework and use it to analyze the model's res...

arxiv.org

June 22, 2025 at 11:52 PM

🤖Thrilled to share our latest work☄️

Have you ever wondered what LLMs know but they are not saying?

We built an auditing framework to study information suppression in LLMs, and demonstrated it to quantify and characterize censorship in DeepSeek.

Read more:

arxiv.org/abs/2506.12349

Have you ever wondered what LLMs know but they are not saying?

We built an auditing framework to study information suppression in LLMs, and demonstrated it to quantify and characterize censorship in DeepSeek.

Read more:

arxiv.org/abs/2506.12349

Reposted by Emilio Ferrara

I'll be at #ICWSM 2025 next week to present our paper about Bluesky Starter Packs.

For the occasion, I've created a Starter Pack with all the organizers, speakers, and authors of this year I could find on Bluesky!

Link: go.bsky.app/GDkQ3y7

Let me know if I missed anyone!

For the occasion, I've created a Starter Pack with all the organizers, speakers, and authors of this year I could find on Bluesky!

Link: go.bsky.app/GDkQ3y7

Let me know if I missed anyone!

June 21, 2025 at 11:31 AM

I'll be at #ICWSM 2025 next week to present our paper about Bluesky Starter Packs.

For the occasion, I've created a Starter Pack with all the organizers, speakers, and authors of this year I could find on Bluesky!

Link: go.bsky.app/GDkQ3y7

Let me know if I missed anyone!

For the occasion, I've created a Starter Pack with all the organizers, speakers, and authors of this year I could find on Bluesky!

Link: go.bsky.app/GDkQ3y7

Let me know if I missed anyone!

Reposted by Emilio Ferrara

Paper here:

arxiv.org/abs/2505.21729

arxiv.org/abs/2505.21729

Bridging the Narrative Divide: Cross-Platform Discourse Networks in Fragmented Ecosystems

Political discourse has grown increasingly fragmented across different social platforms, making it challenging to trace how narratives spread and evolve within such a fragmented information ecosystem....

arxiv.org

June 22, 2025 at 6:45 PM

Paper here:

arxiv.org/abs/2505.21729

arxiv.org/abs/2505.21729

Reposted by Emilio Ferrara

🚨 𝐖𝐡𝐚𝐭 𝐡𝐚𝐩𝐩𝐞𝐧𝐬 𝐰𝐡𝐞𝐧 𝐭𝐡𝐞 𝐜𝐫𝐨𝐰𝐝 𝐛𝐞𝐜𝐨𝐦𝐞𝐬 𝐭𝐡𝐞 𝐟𝐚𝐜𝐭-𝐜𝐡𝐞𝐜𝐤𝐞𝐫?

new "Community Moderation and the New Epistemology of Fact Checking on Social Media"

with I Augenstein, M Bakker, T. Chakraborty, D. Corney, E

Ferrara, I Gurevych, S Hale, E Hovy, H Ji, I Larraz, F

Menczer, P Nakov, D Sahnan, G Warren, G Zagni

new "Community Moderation and the New Epistemology of Fact Checking on Social Media"

with I Augenstein, M Bakker, T. Chakraborty, D. Corney, E

Ferrara, I Gurevych, S Hale, E Hovy, H Ji, I Larraz, F

Menczer, P Nakov, D Sahnan, G Warren, G Zagni

arxiv.org

June 1, 2025 at 7:48 AM

🚨 𝐖𝐡𝐚𝐭 𝐡𝐚𝐩𝐩𝐞𝐧𝐬 𝐰𝐡𝐞𝐧 𝐭𝐡𝐞 𝐜𝐫𝐨𝐰𝐝 𝐛𝐞𝐜𝐨𝐦𝐞𝐬 𝐭𝐡𝐞 𝐟𝐚𝐜𝐭-𝐜𝐡𝐞𝐜𝐤𝐞𝐫?

new "Community Moderation and the New Epistemology of Fact Checking on Social Media"

with I Augenstein, M Bakker, T. Chakraborty, D. Corney, E

Ferrara, I Gurevych, S Hale, E Hovy, H Ji, I Larraz, F

Menczer, P Nakov, D Sahnan, G Warren, G Zagni

new "Community Moderation and the New Epistemology of Fact Checking on Social Media"

with I Augenstein, M Bakker, T. Chakraborty, D. Corney, E

Ferrara, I Gurevych, S Hale, E Hovy, H Ji, I Larraz, F

Menczer, P Nakov, D Sahnan, G Warren, G Zagni

May 19, 2025 at 8:40 PM

Reposted by Emilio Ferrara

What does coordinated inauthentic behavior look like on TikTok?

We introduce a new framework for detecting coordination in video-first platforms, uncovering influence campaigns using synthetic voices, split-screen tactics, and cross-account duplication.

📄https://arxiv.org/abs/2505.10867

We introduce a new framework for detecting coordination in video-first platforms, uncovering influence campaigns using synthetic voices, split-screen tactics, and cross-account duplication.

📄https://arxiv.org/abs/2505.10867

May 19, 2025 at 3:42 PM

What does coordinated inauthentic behavior look like on TikTok?

We introduce a new framework for detecting coordination in video-first platforms, uncovering influence campaigns using synthetic voices, split-screen tactics, and cross-account duplication.

📄https://arxiv.org/abs/2505.10867

We introduce a new framework for detecting coordination in video-first platforms, uncovering influence campaigns using synthetic voices, split-screen tactics, and cross-account duplication.

📄https://arxiv.org/abs/2505.10867

Reposted by Emilio Ferrara

"Limited effectiveness of LLM-based data augmentation for COVID-19 misinformation stance detection" by @euncheolchoi.bsky.social @emilioferrara.bsky.social et al, presented by the awesome Chur at The Web Conference 2025

arxiv.org/abs/2503.02328

arxiv.org/abs/2503.02328

May 1, 2025 at 5:06 AM

"Limited effectiveness of LLM-based data augmentation for COVID-19 misinformation stance detection" by @euncheolchoi.bsky.social @emilioferrara.bsky.social et al, presented by the awesome Chur at The Web Conference 2025

arxiv.org/abs/2503.02328

arxiv.org/abs/2503.02328

Reposted by Emilio Ferrara

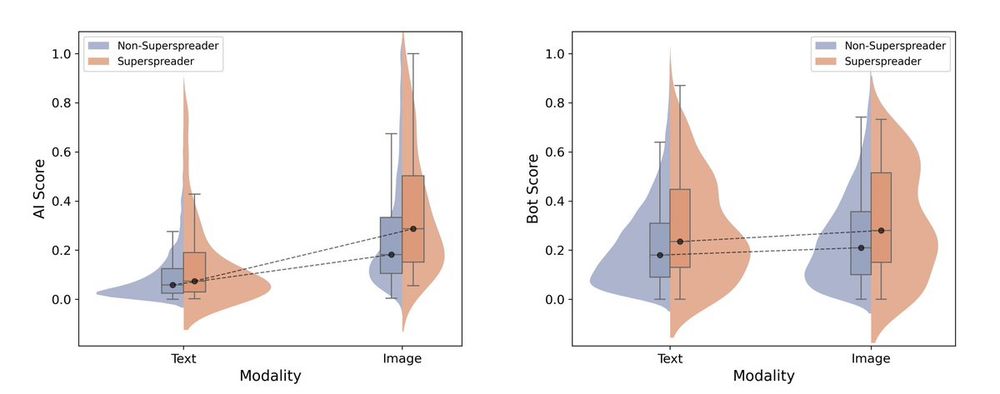

🚀 First study on multimodal AI-generated content (AIGC) on social media! TLDR: AI-generated images are 10× more prevalent than AI-generated text! Just 3% of text spreaders and 10% of image spreaders drive 80% of AIGC diffusion, with premium & bot accounts playing a key role🤖📢

February 21, 2025 at 6:24 AM

🚀 First study on multimodal AI-generated content (AIGC) on social media! TLDR: AI-generated images are 10× more prevalent than AI-generated text! Just 3% of text spreaders and 10% of image spreaders drive 80% of AIGC diffusion, with premium & bot accounts playing a key role🤖📢

Reposted by Emilio Ferrara

🤩Cool collaboration w/ @jinyiye.bsky.social @emilioferrara.bsky.social @luceriluc.bsky.social

🔍Read more: arxiv.org/abs/2502.11248

📊Resources available: github.com/angelayejiny...

🔍Read more: arxiv.org/abs/2502.11248

📊Resources available: github.com/angelayejiny...

Prevalence, Sharing Patterns, and Spreaders of Multimodal AI-Generated Content on X during the 2024 U.S. Presidential Election

While concerns about the risks of AI-generated content (AIGC) to the integrity of social media discussions have been raised, little is known about its scale and the actors responsible for its dissemin...

arxiv.org

February 21, 2025 at 6:27 AM

🤩Cool collaboration w/ @jinyiye.bsky.social @emilioferrara.bsky.social @luceriluc.bsky.social

🔍Read more: arxiv.org/abs/2502.11248

📊Resources available: github.com/angelayejiny...

🔍Read more: arxiv.org/abs/2502.11248

📊Resources available: github.com/angelayejiny...

How does DeepSeek censorship work?

Here is a practical example: I asked it to discuss about my work (having studied censorship online by various countries).

DeepSeek at first starts to compose an accurate answer, even mentioning China’s online censorship efforts.

Here is a practical example: I asked it to discuss about my work (having studied censorship online by various countries).

DeepSeek at first starts to compose an accurate answer, even mentioning China’s online censorship efforts.

January 30, 2025 at 6:09 PM

How does DeepSeek censorship work?

Here is a practical example: I asked it to discuss about my work (having studied censorship online by various countries).

DeepSeek at first starts to compose an accurate answer, even mentioning China’s online censorship efforts.

Here is a practical example: I asked it to discuss about my work (having studied censorship online by various countries).

DeepSeek at first starts to compose an accurate answer, even mentioning China’s online censorship efforts.

Reposted by Emilio Ferrara

Good day to boost this paper on the contrasting effects of lax content moderation (like on X and what is coming at Meta) and how they drive toxic content; by a team including @luceriluc.bsky.social and @emilioferrara.bsky.social arxiv.org/abs/2412.157...

Safe Spaces or Toxic Places? Content Moderation and Social Dynamics of Online Eating Disorder Communities

Social media platforms have become critical spaces for discussing mental health concerns, including eating disorders. While these platforms can provide valuable support networks, they may also amplify...

arxiv.org

January 8, 2025 at 12:07 AM

Good day to boost this paper on the contrasting effects of lax content moderation (like on X and what is coming at Meta) and how they drive toxic content; by a team including @luceriluc.bsky.social and @emilioferrara.bsky.social arxiv.org/abs/2412.157...

Reposted by Emilio Ferrara

i was annoyed at having many chrome tabs with PDF papers having uninformative titles, so i created a small chrome extension to fix it.

i'm using it for a while now, works well.

today i put it on github. enjoy.

github.com/yoavg/pdf-ta...

i'm using it for a while now, works well.

today i put it on github. enjoy.

github.com/yoavg/pdf-ta...

January 5, 2025 at 10:22 PM

i was annoyed at having many chrome tabs with PDF papers having uninformative titles, so i created a small chrome extension to fix it.

i'm using it for a while now, works well.

today i put it on github. enjoy.

github.com/yoavg/pdf-ta...

i'm using it for a while now, works well.

today i put it on github. enjoy.

github.com/yoavg/pdf-ta...

When I was a grad student I looked up to giants of my discipline and never would have thought a day like this would ever come for me, happy to celebrate a personal milestone on this holiday! Academia and research are great. Thanks all!

December 25, 2024 at 12:07 AM

When I was a grad student I looked up to giants of my discipline and never would have thought a day like this would ever come for me, happy to celebrate a personal milestone on this holiday! Academia and research are great. Thanks all!

Reposted by Emilio Ferrara

"IOHunter: Graph Foundation Model to Uncover Online Information Operations" goes to AAAI'25!

This is the result of an incredible collaboration with @luceriluc.bsky.social @frafabbri.bsky.social and @emilioferrara.bsky.social

Read the entire thread for a summary and the link to the preprint.

This is the result of an incredible collaboration with @luceriluc.bsky.social @frafabbri.bsky.social and @emilioferrara.bsky.social

Read the entire thread for a summary and the link to the preprint.

December 23, 2024 at 2:02 PM

"IOHunter: Graph Foundation Model to Uncover Online Information Operations" goes to AAAI'25!

This is the result of an incredible collaboration with @luceriluc.bsky.social @frafabbri.bsky.social and @emilioferrara.bsky.social

Read the entire thread for a summary and the link to the preprint.

This is the result of an incredible collaboration with @luceriluc.bsky.social @frafabbri.bsky.social and @emilioferrara.bsky.social

Read the entire thread for a summary and the link to the preprint.

Reposted by Emilio Ferrara

Fascinating new look at source selection in news from

Spangher, @emilioferrara.bsky.social et al. doi.org/10.48550/arX...

Spangher, @emilioferrara.bsky.social et al. doi.org/10.48550/arX...

December 18, 2024 at 3:13 PM

Fascinating new look at source selection in news from

Spangher, @emilioferrara.bsky.social et al. doi.org/10.48550/arX...

Spangher, @emilioferrara.bsky.social et al. doi.org/10.48550/arX...

Are your friends more susceptible to influence than you? Our new work in #ICWSM2025 uncovers The Susceptibility Paradox in online social influence.

w/ @luceriluc.bsky.social , Julie Jiang, @emilioferrara.bsky.social

Explore our findings: arxiv.org/abs/2406.11553

w/ @luceriluc.bsky.social , Julie Jiang, @emilioferrara.bsky.social

Explore our findings: arxiv.org/abs/2406.11553

December 12, 2024 at 6:39 PM