Everything changes... turns out the age-old rule that lgwr writes out the log buffer when it's 1/3 full no longer applies in recent Oracle versions.

Observations below from 19.26 (with RAC on Exadata). 👇

Everything changes... turns out the age-old rule that lgwr writes out the log buffer when it's 1/3 full no longer applies in recent Oracle versions.

Observations below from 19.26 (with RAC on Exadata). 👇

Have you ever struggled tracing SQL back to the app? Use setClientInfo(...) to add meaningful metadata to your JDBC queries. It makes tracing and perf debugging way easier.

New blog post with real code & tips:

🔗 martincarstenbach.com/2025/10/16/j...

How many do you think are described in the database reference manual?

None.

How many do you think are described in the database reference manual?

None.

The deferral can involve spinning in a tight loop up to 25 times (maximum hard-coded in kcrfw_defer_write).

The deferral can involve spinning in a tight loop up to 25 times (maximum hard-coded in kcrfw_defer_write).

Manually enabling and disabling adaptive lgwr evaluation trace for pipelined / overlapped redo writes:

Manually enabling and disabling adaptive lgwr evaluation trace for pipelined / overlapped redo writes:

xtop - Top for Wall-Clock Time. It uses eBPF/xcapture v3 and gives you "x-ray vision" into Linux system activity.

It will be available on next Tuesday 19 Aug at 1pm EDT when I also run a live demo webinar!

tanelpoder.com/posts/xtop-t...

xtop - Top for Wall-Clock Time. It uses eBPF/xcapture v3 and gives you "x-ray vision" into Linux system activity.

It will be available on next Tuesday 19 Aug at 1pm EDT when I also run a live demo webinar!

tanelpoder.com/posts/xtop-t...

Exadata:

CPU_COUNT <= 256: 16

CPU_COUNT> 256: CPU_COUNT/16

Non-Exadata:

CPU_COUNT <= 32: 2

CPU_COUNT > 32: CPU_COUNT/16

Max limit: 256

Exadata:

CPU_COUNT <= 256: 16

CPU_COUNT> 256: CPU_COUNT/16

Non-Exadata:

CPU_COUNT <= 32: 2

CPU_COUNT > 32: CPU_COUNT/16

Max limit: 256

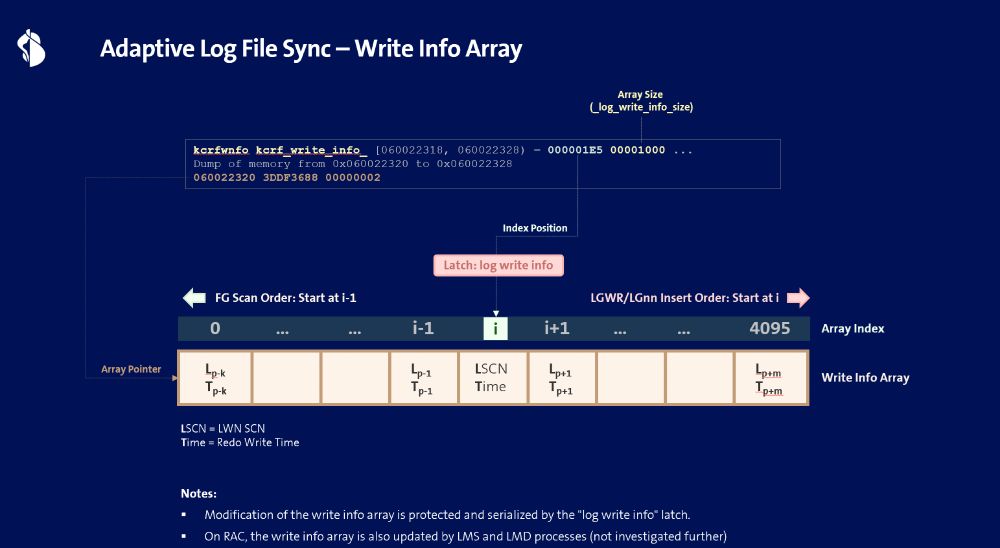

The "redo synch time overhead" in Oracle is the difference between a FG's log file sync (LFS) wait end time and LGWR's redo write completion time.

LGWR and LG workers track the redo write completion times in the "write info array" in the SGA.

The "redo synch time overhead" in Oracle is the difference between a FG's log file sync (LFS) wait end time and LGWR's redo write completion time.

LGWR and LG workers track the redo write completion times in the "write info array" in the SGA.

gdb: I have the control

gdb: I have the control

log parallelism, adaptive log file sync, fast sync, adaptive scalable lgwr, pipelined log writes, ...

Wondering if anybody actually knows how all these features work and interact with each other 🤔

In Adaptive Scalable mode, lgwr can dynamically assign multiple lg workers to process a redo write in parallel when these conditions are met:

- redo write size > KSFD_MAXIO (usually 1 MB)

- _max_log_write_parallelism > 1

- active public redo strands > 1

In Adaptive Scalable mode, lgwr can dynamically assign multiple lg workers to process a redo write in parallel when these conditions are met:

- redo write size > KSFD_MAXIO (usually 1 MB)

- _max_log_write_parallelism > 1

- active public redo strands > 1

On Exadata with pmemlog, the Fast Log File Sync dynamically tunes the log file sync sleep duration to balance responsiveness vs cpu ovherhead (spinning after wakeup).

Oracle tracks three wait variants via different session stat counters:

1. Sleep

2. Spin

3. Backoff Sleeps

On Exadata with pmemlog, the Fast Log File Sync dynamically tunes the log file sync sleep duration to balance responsiveness vs cpu ovherhead (spinning after wakeup).

Oracle tracks three wait variants via different session stat counters:

1. Sleep

2. Spin

3. Backoff Sleeps

It lets you enable and disable adaptive scalable lgwr, fast log file sync, and log parallelism. Highly experimental, of course!

Example 👇

It lets you enable and disable adaptive scalable lgwr, fast log file sync, and log parallelism. Highly experimental, of course!

Example 👇