I live in Amsterdam. Right now working at the Dutch Chamber of Commerce (KVK). Also founder of a boutique consulting firm.

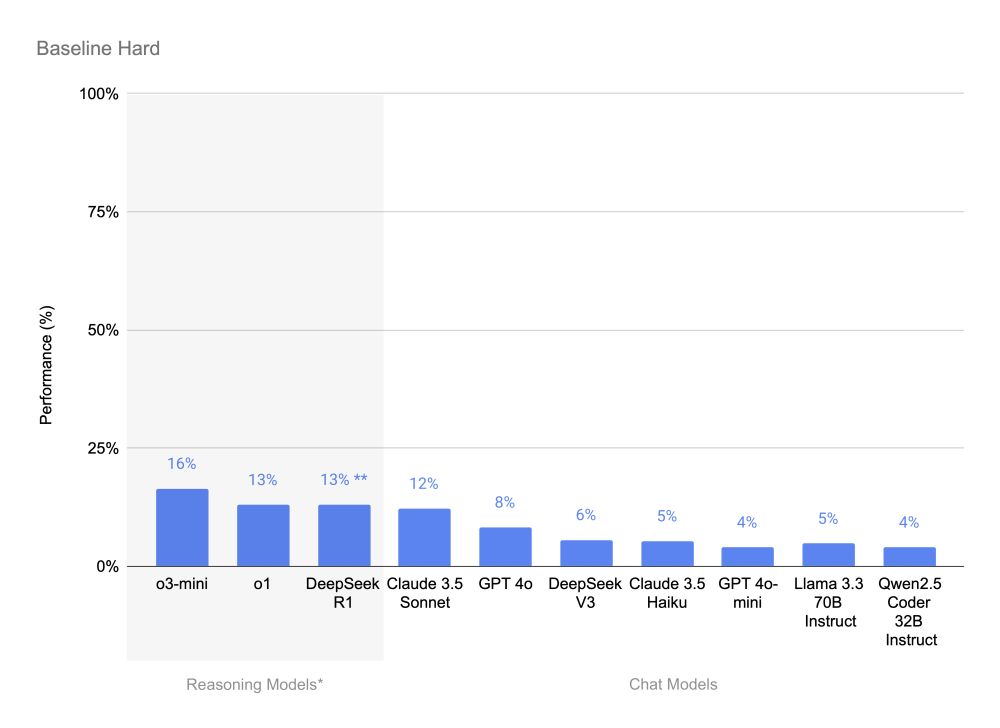

So naturally, some people put it to the test — hours after the 2025 US Math Olympiad problems were released.

The result: They all sucked!

So naturally, some people put it to the test — hours after the 2025 US Math Olympiad problems were released.

The result: They all sucked!

So naturally, some people put it to the test — hours after the 2025 US Math Olympiad problems were released.

The result: They all sucked!

Sat in front of your code.

Silently judging it.

A tool is someone who keeps saying '2025 is the year of the agents'

A tool is someone who keeps saying '2025 is the year of the agents'

huggingface.co/blog/dabstep

huggingface.co/blog/dabstep

The moat:

The moat:

But, also. Dude.

There's no way OpenAI can make this argument without looking very, very silly.

https://www.ft.com/content/a0dfedd1-5255-4fa9-8ccc-1fe01de87ea6

But, also. Dude.

There's no way OpenAI can make this argument without looking very, very silly.

Last I checked the tech industry, we celebrate small teams pushing the industry forward, coming up w novel ways to build software more efficiently. And sharing it.

Yet now there's a class of folks who think this is some bad thing?

Last I checked the tech industry, we celebrate small teams pushing the industry forward, coming up w novel ways to build software more efficiently. And sharing it.

Yet now there's a class of folks who think this is some bad thing?

Here are my notes on the new models, plus how I ran DeepSeek-R1-Distill-Llama-8B on my Mac using Ollama and LLM

simonwillison.net/2025/Jan/20/...

Here are my notes on the new models, plus how I ran DeepSeek-R1-Distill-Llama-8B on my Mac using Ollama and LLM

simonwillison.net/2025/Jan/20/...