Workshop Page: varworkshop.github.io

👉Apply here:

1) Embodied AI:

qualcomm.wd12.myworkdayjobs.com/External/job...

2) Multi-modal LLMs:

👉Apply here:

1) Embodied AI:

qualcomm.wd12.myworkdayjobs.com/External/job...

2) Multi-modal LLMs:

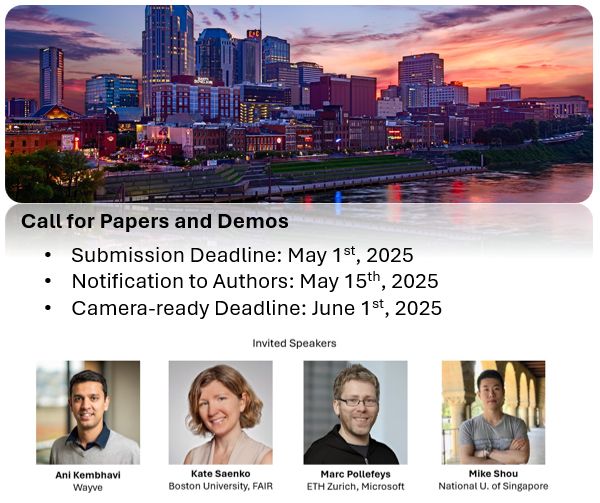

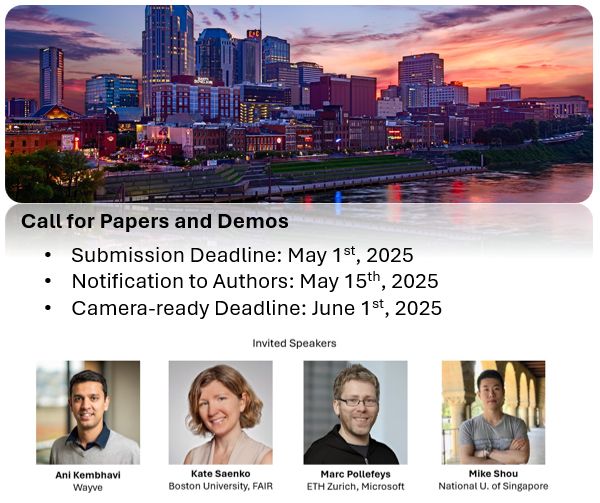

👉Details: varworkshop.github.io/calls/

👉Details: varworkshop.github.io/calls/

Details: varworkshop.github.io/challenges/

🚨🚨🚨The winning teams will receive a prize and a contributed talk.

P.S. GPT-4o does not do too well.

Details: varworkshop.github.io/challenges/

🚨🚨🚨The winning teams will receive a prize and a contributed talk.

P.S. GPT-4o does not do too well.

Details: varworkshop.github.io/challenges/

🚨🚨🚨The winning teams will receive a prize and a contributed talk.

P.S. GPT-4o does not do too well.

🚨The winning teams will receive a prize along with a contributed talk. 🚨

Website: varworkshop.github.io/challenges/

🚨The winning teams will receive a prize along with a contributed talk. 🚨

Website: varworkshop.github.io/challenges/

Link: varworkshop.github.io/calls/

Link: varworkshop.github.io/calls/

Link: varworkshop.github.io/calls/

Workshop Page: varworkshop.github.io

Workshop Page: varworkshop.github.io

arXiv: arxiv.org/abs/2501.09757

TL; DR - We use noise injection to capture both epistemic and aleatoric uncertainty!

TL; DR - We use noise injection to capture both epistemic and aleatoric uncertainty!

Workshop Page: varworkshop.github.io

Workshop Page: varworkshop.github.io

arXiv: arxiv.org/abs/2501.09757

arXiv: arxiv.org/abs/2501.09757

GitHub Repo: github.com/Qualcomm-AI-...

Dataset Page: www.qualcomm.com/developer/so...

GitHub Repo: github.com/Qualcomm-AI-...

Dataset Page: www.qualcomm.com/developer/so...