alexluedtke.com

openreview.net/pdf/cfeab45d...

openreview.net/pdf/cfeab45d...

Application review starts Dec 1. Details here:

academicpositions.harvard.edu/postings/15365

Application review starts Dec 1. Details here:

academicpositions.harvard.edu/postings/15365

For example: what would faces look like if they were all smiling?

arxiv.org/abs/2509.16842

For example: what would faces look like if they were all smiling?

arxiv.org/abs/2509.16842

Challenges in Statistics: A Dozen Challenges in Causality and Causal Inference

https://arxiv.org/abs/2508.17099

Challenges in Statistics: A Dozen Challenges in Causality and Causal Inference

https://arxiv.org/abs/2508.17099

The paper is titled "A New Proof of Sub-Gaussian Norm Concentration Inequality" (arxiv.org/abs/2503.14347), led by Zishun Liu and Yongxin Chen at Georgia Tech.

The paper is titled "A New Proof of Sub-Gaussian Norm Concentration Inequality" (arxiv.org/abs/2503.14347), led by Zishun Liu and Yongxin Chen at Georgia Tech.

Tried it on a 50 page draft of a causal ML paper. Of its top 10 comments, 4 concerned minor technical issues I'd missed (notation error, misapplication of definition, etc.). In my experience, vanilla chatbots wouldn't have caught these.

Refine leverages the best current AI models to draw your attention to potential errors and clarity issues in research paper drafts.

1/

Tried it on a 50 page draft of a causal ML paper. Of its top 10 comments, 4 concerned minor technical issues I'd missed (notation error, misapplication of definition, etc.). In my experience, vanilla chatbots wouldn't have caught these.

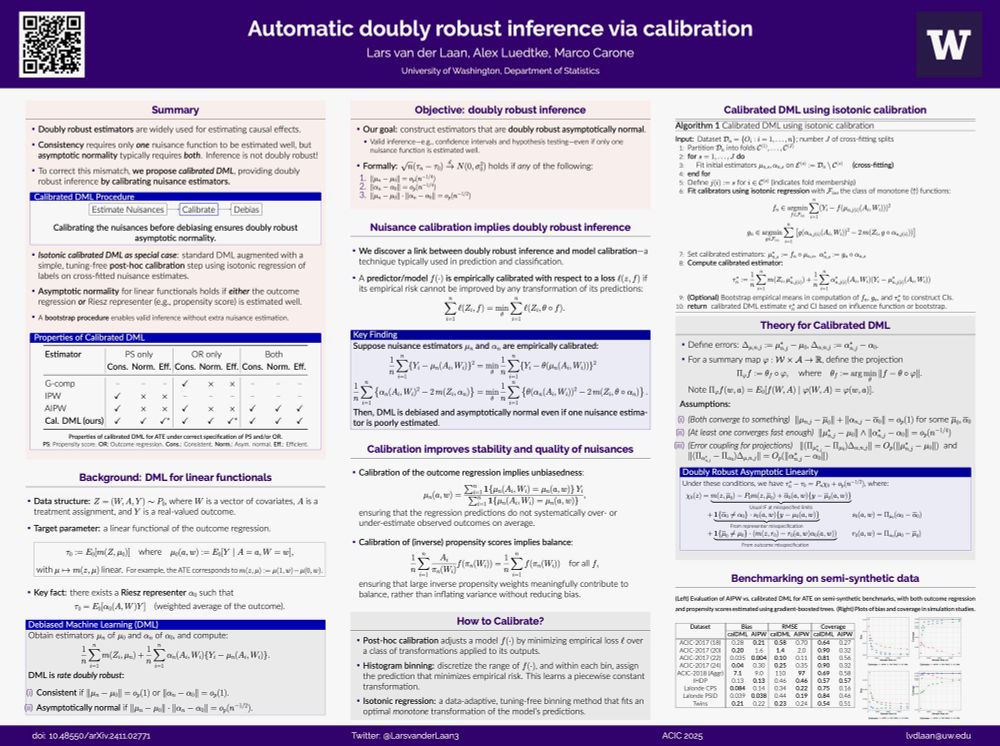

Calibrating nuisance estimates in DML protects against model misspecification and slow convergence.

Just one line of code is all it takes.

Calibrating nuisance estimates in DML protects against model misspecification and slow convergence.

Just one line of code is all it takes.

We study dataset compression through coreset selection - finding a small, weighted subset of observations that preserves information with respect to some divergence.

arxiv.org/abs/2504.20194

We study dataset compression through coreset selection - finding a small, weighted subset of observations that preserves information with respect to some divergence.

arxiv.org/abs/2504.20194

It's deadly to the US economy.

The US is a world leader in tech due to the ecosystem that NIH and NSF propel. It drives innovation for tech transfer, creates a highly-skilled sci/tech workforce, and fosters academic/industry crossfertilization.

Their principal role is support a scientific ecosystem in the United States, that includes everything from education and training to infrastructure and communication.

It's deadly to the US economy.

The US is a world leader in tech due to the ecosystem that NIH and NSF propel. It drives innovation for tech transfer, creates a highly-skilled sci/tech workforce, and fosters academic/industry crossfertilization.

Automatic Debiased Machine Learning for Smooth Functionals of Nonparametric M-Estimands

https://arxiv.org/abs/2501.11868

donskerclass.github.io/post/papers-...

donskerclass.github.io/post/papers-...

Led by amazing postdoc Alex Levis: www.awlevis.com/about/

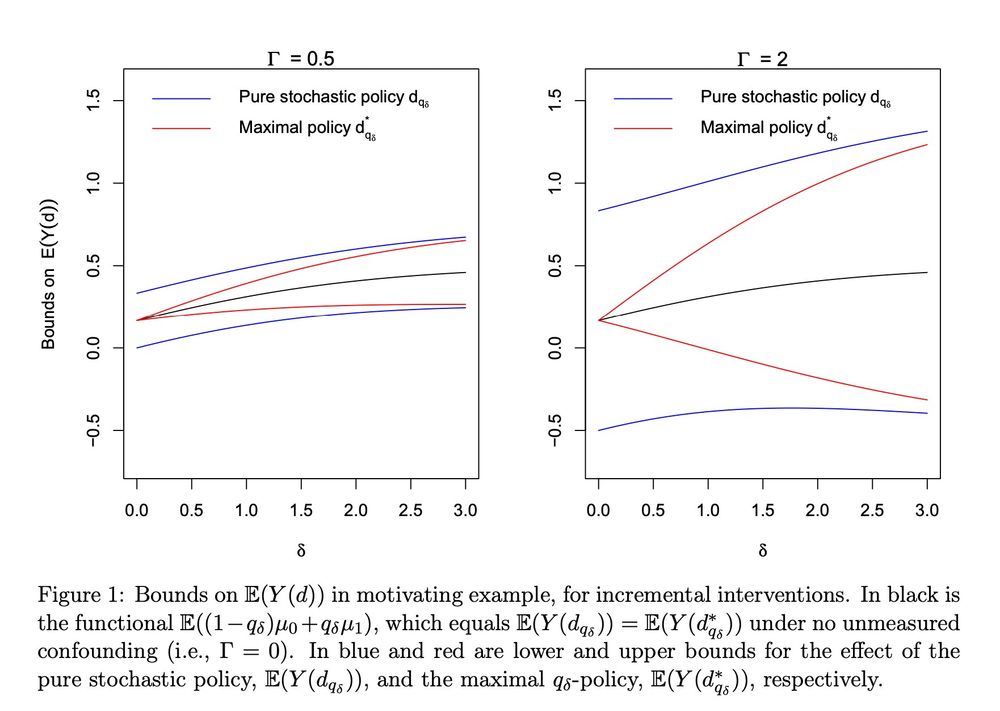

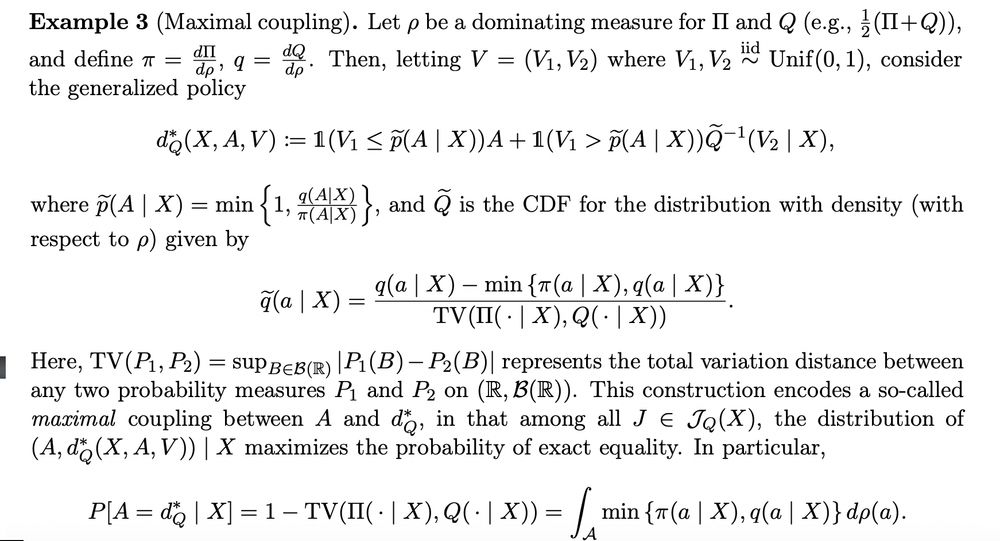

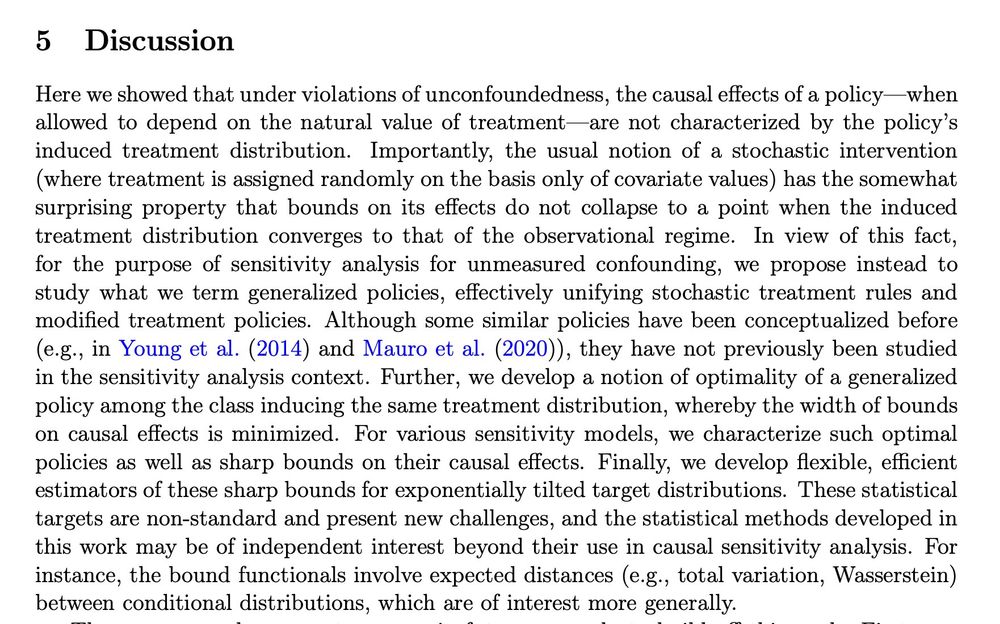

We show causal effects of new "soft" interventions are less sensitive to unmeasured confounding

& study which effects are *least* sensitive to confounding -> makes new connections to optimal transport

Led by amazing postdoc Alex Levis: www.awlevis.com/about/

We show causal effects of new "soft" interventions are less sensitive to unmeasured confounding

& study which effects are *least* sensitive to confounding -> makes new connections to optimal transport

In town and interested in causal ML? Would love to grab coffee and chat.

In town and interested in causal ML? Would love to grab coffee and chat.

link: arxiv.org/abs/2403.14606

Basically: "autodiff - it's everywhere! what is it, and how do you use it?" seems like a good resource for anyone interested in data science, machine learning, "ai," neural nets, etc

#blueskai #stats #mlsky

link: arxiv.org/abs/2403.14606

Basically: "autodiff - it's everywhere! what is it, and how do you use it?" seems like a good resource for anyone interested in data science, machine learning, "ai," neural nets, etc

#blueskai #stats #mlsky

stat.uw.edu/pre-applicat...

stat.uw.edu/pre-applicat...

arxiv.org/abs/2405.08675

tldr: automatic differentiation can be used to derive efficient influence functions and construct efficient estimators.

arxiv.org/abs/2405.08675

tldr: automatic differentiation can be used to derive efficient influence functions and construct efficient estimators.