@alexdimakis.bsky.social @manoliskellis.bsky.social

www.greeksin.ai

@alexdimakis.bsky.social @manoliskellis.bsky.social

www.greeksin.ai

@alexdimakis.bsky.social @manoliskellis.bsky.social

www.greeksin.ai

1. Outperforms DeepSeekR1-32B in reasoning.

2. Fully open source, open weights and open data (1M samples).

3. Post-trained only with SFT. RL post-training will likely further improve performance.

github.com/open-thought...

1. Outperforms DeepSeekR1-32B in reasoning.

2. Fully open source, open weights and open data (1M samples).

3. Post-trained only with SFT. RL post-training will likely further improve performance.

github.com/open-thought...

github.com/open-thought...

github.com/open-thought...

We announce Open Thoughts, an effort to create such open reasoning datasets. Using our data we trained Open Thinker 7B an open data model with performance very close to DeepSeekR1-7B distill. (1/n)

We announce Open Thoughts, an effort to create such open reasoning datasets. Using our data we trained Open Thinker 7B an open data model with performance very close to DeepSeekR1-7B distill. (1/n)

t.co/WO5UV2LZQM

t.co/WO5UV2LZQM

The case for small specialized AI models.

Current thinking is that AGI is coming, and one gigantic model will be able to solve everything. Current Agents are mostly prompts on one big model and prompt engineering is used for executing complex processes. (1/n)

The case for small specialized AI models.

Current thinking is that AGI is coming, and one gigantic model will be able to solve everything. Current Agents are mostly prompts on one big model and prompt engineering is used for executing complex processes. (1/n)

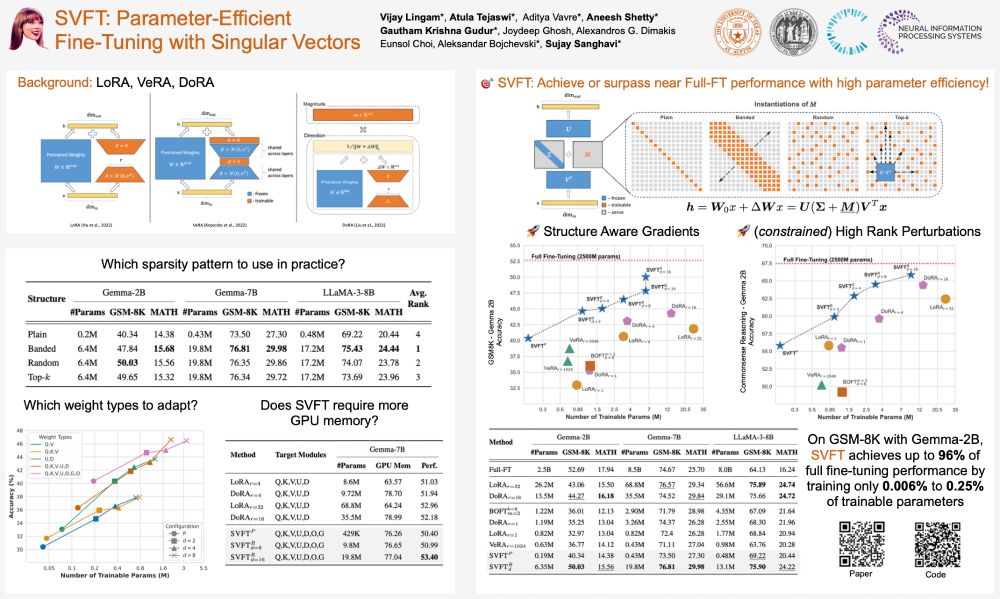

📌Where: East Exhibit Hall A-C #2207, Poster Session 4 East

⏲️When: Thu 12 Dec, 4:30 PM - 7:30 PM PST

#AI #MachineLearning #PEFT #NeurIPS24

📌Where: East Exhibit Hall A-C #2207, Poster Session 4 East

⏲️When: Thu 12 Dec, 4:30 PM - 7:30 PM PST

#AI #MachineLearning #PEFT #NeurIPS24